Merge branch 'master' of github.com:D4-project/d4-core

|

|

@ -0,0 +1,289 @@

|

|||

# -*- coding: utf-8; mode: python -*-

|

||||

##

|

||||

## Format

|

||||

##

|

||||

## ACTION: [AUDIENCE:] COMMIT_MSG [!TAG ...]

|

||||

##

|

||||

## Description

|

||||

##

|

||||

## ACTION is one of 'chg', 'fix', 'new'

|

||||

##

|

||||

## Is WHAT the change is about.

|

||||

##

|

||||

## 'chg' is for refactor, small improvement, cosmetic changes...

|

||||

## 'fix' is for bug fixes

|

||||

## 'new' is for new features, big improvement

|

||||

##

|

||||

## AUDIENCE is optional and one of 'dev', 'usr', 'pkg', 'test', 'doc'|'docs'

|

||||

##

|

||||

## Is WHO is concerned by the change.

|

||||

##

|

||||

## 'dev' is for developpers (API changes, refactors...)

|

||||

## 'usr' is for final users (UI changes)

|

||||

## 'pkg' is for packagers (packaging changes)

|

||||

## 'test' is for testers (test only related changes)

|

||||

## 'doc' is for doc guys (doc only changes)

|

||||

##

|

||||

## COMMIT_MSG is ... well ... the commit message itself.

|

||||

##

|

||||

## TAGs are additionnal adjective as 'refactor' 'minor' 'cosmetic'

|

||||

##

|

||||

## They are preceded with a '!' or a '@' (prefer the former, as the

|

||||

## latter is wrongly interpreted in github.) Commonly used tags are:

|

||||

##

|

||||

## 'refactor' is obviously for refactoring code only

|

||||

## 'minor' is for a very meaningless change (a typo, adding a comment)

|

||||

## 'cosmetic' is for cosmetic driven change (re-indentation, 80-col...)

|

||||

## 'wip' is for partial functionality but complete subfunctionality.

|

||||

##

|

||||

## Example:

|

||||

##

|

||||

## new: usr: support of bazaar implemented

|

||||

## chg: re-indentend some lines !cosmetic

|

||||

## new: dev: updated code to be compatible with last version of killer lib.

|

||||

## fix: pkg: updated year of licence coverage.

|

||||

## new: test: added a bunch of test around user usability of feature X.

|

||||

## fix: typo in spelling my name in comment. !minor

|

||||

##

|

||||

## Please note that multi-line commit message are supported, and only the

|

||||

## first line will be considered as the "summary" of the commit message. So

|

||||

## tags, and other rules only applies to the summary. The body of the commit

|

||||

## message will be displayed in the changelog without reformatting.

|

||||

|

||||

|

||||

##

|

||||

## ``ignore_regexps`` is a line of regexps

|

||||

##

|

||||

## Any commit having its full commit message matching any regexp listed here

|

||||

## will be ignored and won't be reported in the changelog.

|

||||

##

|

||||

ignore_regexps = [

|

||||

r'@minor', r'!minor',

|

||||

r'@cosmetic', r'!cosmetic',

|

||||

r'@refactor', r'!refactor',

|

||||

r'@wip', r'!wip',

|

||||

r'^([cC]hg|[fF]ix|[nN]ew)\s*:\s*[p|P]kg:',

|

||||

r'^([cC]hg|[fF]ix|[nN]ew)\s*:\s*[d|D]ev:',

|

||||

r'^(.{3,3}\s*:)?\s*[fF]irst commit.?\s*$',

|

||||

]

|

||||

|

||||

|

||||

## ``section_regexps`` is a list of 2-tuples associating a string label and a

|

||||

## list of regexp

|

||||

##

|

||||

## Commit messages will be classified in sections thanks to this. Section

|

||||

## titles are the label, and a commit is classified under this section if any

|

||||

## of the regexps associated is matching.

|

||||

##

|

||||

## Please note that ``section_regexps`` will only classify commits and won't

|

||||

## make any changes to the contents. So you'll probably want to go check

|

||||

## ``subject_process`` (or ``body_process``) to do some changes to the subject,

|

||||

## whenever you are tweaking this variable.

|

||||

##

|

||||

section_regexps = [

|

||||

('New', [

|

||||

r'^[nN]ew\s*:\s*((dev|use?r|pkg|test|doc|docs)\s*:\s*)?([^\n]*)$',

|

||||

]),

|

||||

('Changes', [

|

||||

r'^[cC]hg\s*:\s*((dev|use?r|pkg|test|doc|docs)\s*:\s*)?([^\n]*)$',

|

||||

]),

|

||||

('Fix', [

|

||||

r'^[fF]ix\s*:\s*((dev|use?r|pkg|test|doc|docs)\s*:\s*)?([^\n]*)$',

|

||||

]),

|

||||

|

||||

('Other', None ## Match all lines

|

||||

),

|

||||

|

||||

]

|

||||

|

||||

|

||||

## ``body_process`` is a callable

|

||||

##

|

||||

## This callable will be given the original body and result will

|

||||

## be used in the changelog.

|

||||

##

|

||||

## Available constructs are:

|

||||

##

|

||||

## - any python callable that take one txt argument and return txt argument.

|

||||

##

|

||||

## - ReSub(pattern, replacement): will apply regexp substitution.

|

||||

##

|

||||

## - Indent(chars=" "): will indent the text with the prefix

|

||||

## Please remember that template engines gets also to modify the text and

|

||||

## will usually indent themselves the text if needed.

|

||||

##

|

||||

## - Wrap(regexp=r"\n\n"): re-wrap text in separate paragraph to fill 80-Columns

|

||||

##

|

||||

## - noop: do nothing

|

||||

##

|

||||

## - ucfirst: ensure the first letter is uppercase.

|

||||

## (usually used in the ``subject_process`` pipeline)

|

||||

##

|

||||

## - final_dot: ensure text finishes with a dot

|

||||

## (usually used in the ``subject_process`` pipeline)

|

||||

##

|

||||

## - strip: remove any spaces before or after the content of the string

|

||||

##

|

||||

## - SetIfEmpty(msg="No commit message."): will set the text to

|

||||

## whatever given ``msg`` if the current text is empty.

|

||||

##

|

||||

## Additionally, you can `pipe` the provided filters, for instance:

|

||||

#body_process = Wrap(regexp=r'\n(?=\w+\s*:)') | Indent(chars=" ")

|

||||

#body_process = Wrap(regexp=r'\n(?=\w+\s*:)')

|

||||

#body_process = noop

|

||||

body_process = ReSub(r'((^|\n)[A-Z]\w+(-\w+)*: .*(\n\s+.*)*)+$', r'') | strip

|

||||

|

||||

|

||||

## ``subject_process`` is a callable

|

||||

##

|

||||

## This callable will be given the original subject and result will

|

||||

## be used in the changelog.

|

||||

##

|

||||

## Available constructs are those listed in ``body_process`` doc.

|

||||

subject_process = (strip |

|

||||

ReSub(r'^([cC]hg|[fF]ix|[nN]ew)\s*:\s*((dev|use?r|pkg|test|doc|docs)\s*:\s*)?([^\n@]*)(@[a-z]+\s+)*$', r'\4') |

|

||||

SetIfEmpty("No commit message.") | ucfirst | final_dot)

|

||||

|

||||

|

||||

## ``tag_filter_regexp`` is a regexp

|

||||

##

|

||||

## Tags that will be used for the changelog must match this regexp.

|

||||

##

|

||||

tag_filter_regexp = r'^v[0-9]+\.[0-9]+$'

|

||||

|

||||

|

||||

|

||||

## ``unreleased_version_label`` is a string or a callable that outputs a string

|

||||

##

|

||||

## This label will be used as the changelog Title of the last set of changes

|

||||

## between last valid tag and HEAD if any.

|

||||

unreleased_version_label = "%%version%% (unreleased)"

|

||||

|

||||

|

||||

## ``output_engine`` is a callable

|

||||

##

|

||||

## This will change the output format of the generated changelog file

|

||||

##

|

||||

## Available choices are:

|

||||

##

|

||||

## - rest_py

|

||||

##

|

||||

## Legacy pure python engine, outputs ReSTructured text.

|

||||

## This is the default.

|

||||

##

|

||||

## - mustache(<template_name>)

|

||||

##

|

||||

## Template name could be any of the available templates in

|

||||

## ``templates/mustache/*.tpl``.

|

||||

## Requires python package ``pystache``.

|

||||

## Examples:

|

||||

## - mustache("markdown")

|

||||

## - mustache("restructuredtext")

|

||||

##

|

||||

## - makotemplate(<template_name>)

|

||||

##

|

||||

## Template name could be any of the available templates in

|

||||

## ``templates/mako/*.tpl``.

|

||||

## Requires python package ``mako``.

|

||||

## Examples:

|

||||

## - makotemplate("restructuredtext")

|

||||

##

|

||||

output_engine = rest_py

|

||||

#output_engine = mustache("restructuredtext")

|

||||

#output_engine = mustache("markdown")

|

||||

#output_engine = makotemplate("restructuredtext")

|

||||

|

||||

|

||||

## ``include_merge`` is a boolean

|

||||

##

|

||||

## This option tells git-log whether to include merge commits in the log.

|

||||

## The default is to include them.

|

||||

include_merge = True

|

||||

|

||||

|

||||

## ``log_encoding`` is a string identifier

|

||||

##

|

||||

## This option tells gitchangelog what encoding is outputed by ``git log``.

|

||||

## The default is to be clever about it: it checks ``git config`` for

|

||||

## ``i18n.logOutputEncoding``, and if not found will default to git's own

|

||||

## default: ``utf-8``.

|

||||

#log_encoding = 'utf-8'

|

||||

|

||||

|

||||

## ``publish`` is a callable

|

||||

##

|

||||

## Sets what ``gitchangelog`` should do with the output generated by

|

||||

## the output engine. ``publish`` is a callable taking one argument

|

||||

## that is an interator on lines from the output engine.

|

||||

##

|

||||

## Some helper callable are provided:

|

||||

##

|

||||

## Available choices are:

|

||||

##

|

||||

## - stdout

|

||||

##

|

||||

## Outputs directly to standard output

|

||||

## (This is the default)

|

||||

##

|

||||

## - FileInsertAtFirstRegexMatch(file, pattern, idx=lamda m: m.start())

|

||||

##

|

||||

## Creates a callable that will parse given file for the given

|

||||

## regex pattern and will insert the output in the file.

|

||||

## ``idx`` is a callable that receive the matching object and

|

||||

## must return a integer index point where to insert the

|

||||

## the output in the file. Default is to return the position of

|

||||

## the start of the matched string.

|

||||

##

|

||||

## - FileRegexSubst(file, pattern, replace, flags)

|

||||

##

|

||||

## Apply a replace inplace in the given file. Your regex pattern must

|

||||

## take care of everything and might be more complex. Check the README

|

||||

## for a complete copy-pastable example.

|

||||

##

|

||||

# publish = FileInsertIntoFirstRegexMatch(

|

||||

# "CHANGELOG.rst",

|

||||

# r'/(?P<rev>[0-9]+\.[0-9]+(\.[0-9]+)?)\s+\([0-9]+-[0-9]{2}-[0-9]{2}\)\n--+\n/',

|

||||

# idx=lambda m: m.start(1)

|

||||

# )

|

||||

#publish = stdout

|

||||

|

||||

|

||||

## ``revs`` is a list of callable or a list of string

|

||||

##

|

||||

## callable will be called to resolve as strings and allow dynamical

|

||||

## computation of these. The result will be used as revisions for

|

||||

## gitchangelog (as if directly stated on the command line). This allows

|

||||

## to filter exaclty which commits will be read by gitchangelog.

|

||||

##

|

||||

## To get a full documentation on the format of these strings, please

|

||||

## refer to the ``git rev-list`` arguments. There are many examples.

|

||||

##

|

||||

## Using callables is especially useful, for instance, if you

|

||||

## are using gitchangelog to generate incrementally your changelog.

|

||||

##

|

||||

## Some helpers are provided, you can use them::

|

||||

##

|

||||

## - FileFirstRegexMatch(file, pattern): will return a callable that will

|

||||

## return the first string match for the given pattern in the given file.

|

||||

## If you use named sub-patterns in your regex pattern, it'll output only

|

||||

## the string matching the regex pattern named "rev".

|

||||

##

|

||||

## - Caret(rev): will return the rev prefixed by a "^", which is a

|

||||

## way to remove the given revision and all its ancestor.

|

||||

##

|

||||

## Please note that if you provide a rev-list on the command line, it'll

|

||||

## replace this value (which will then be ignored).

|

||||

##

|

||||

## If empty, then ``gitchangelog`` will act as it had to generate a full

|

||||

## changelog.

|

||||

##

|

||||

## The default is to use all commits to make the changelog.

|

||||

#revs = ["^1.0.3", ]

|

||||

#revs = [

|

||||

# Caret(

|

||||

# FileFirstRegexMatch(

|

||||

# "CHANGELOG.rst",

|

||||

# r"(?P<rev>[0-9]+\.[0-9]+(\.[0-9]+)?)\s+\([0-9]+-[0-9]{2}-[0-9]{2}\)\n--+\n")),

|

||||

# "HEAD"

|

||||

#]

|

||||

revs = []

|

||||

|

|

@ -0,0 +1,8 @@

|

|||

# Temp files

|

||||

*.swp

|

||||

*.pyc

|

||||

*.swo

|

||||

*.o

|

||||

|

||||

# redis datas

|

||||

server/dump6380.rdb

|

||||

75

README.md

|

|

@ -1,17 +1,84 @@

|

|||

# D4 core

|

||||

|

||||

Software components used for the D4 project

|

||||

|

||||

|

||||

D4 core are software components used in the D4 project. The software includes everything to create your own sensor network or connect

|

||||

to an existing sensor network using simple clients.

|

||||

|

||||

|

||||

|

||||

|

||||

## D4 core client

|

||||

|

||||

[D4 core client](https://github.com/D4-project/d4-core/tree/master/client) is a simple and minimal implementation of the [D4 encapsulation protocol](https://github.com/D4-project/architecture/tree/master/format). There is also a [portable D4 client](https://github.com/D4-project/d4-goclient) in Go including the support for the SSL/TLS connectivity.

|

||||

|

||||

### Requirements

|

||||

|

||||

- Unix-like operating system

|

||||

- make

|

||||

- a recent C compiler

|

||||

|

||||

### Usage

|

||||

|

||||

The D4 client can be used to stream any byte stream towards a D4 server.

|

||||

|

||||

As an example, you directly stream tcpdump output to a D4 server with the following

|

||||

script:

|

||||

|

||||

````

|

||||

tcpdump -n -s0 -w - | ./d4 -c ./conf | socat - OPENSSL-CONNECT:$D4-SERVER-IP-ADDRESS:$PORT,verify=0

|

||||

````

|

||||

|

||||

~~~~

|

||||

d4 - d4 client

|

||||

Read data from the configured <source> and send it to <destination>

|

||||

|

||||

Usage: d4 -c config_directory

|

||||

|

||||

Configuration

|

||||

|

||||

The configuration settings are stored in files in the configuration directory

|

||||

specified with the -c command line switch.

|

||||

|

||||

Files in the configuration directory

|

||||

|

||||

key - is the private HMAC-SHA-256-128 key.

|

||||

The HMAC is computed on the header with a HMAC value set to 0

|

||||

which is updated later.

|

||||

snaplen - the length of bytes that is read from the <source>

|

||||

version - the version of the d4 client

|

||||

type - the type of data that is send. pcap, netflow, ...

|

||||

source - the source where the data is read from

|

||||

destination - the destination where the data is written to

|

||||

~~~~

|

||||

|

||||

### Installation

|

||||

|

||||

~~~~

|

||||

cd client

|

||||

git submodule init

|

||||

git submodule update

|

||||

~~~~

|

||||

|

||||

## D4 core server

|

||||

|

||||

D4 core server is a complete server to handle clients (sensors) including the decapsulation of the [D4 protocol](https://github.com/D4-project/architecture/tree/master/format), control of

|

||||

sensor registrations, management of decoding protocols and dispatching to adequate decoders/analysers.

|

||||

|

||||

### Requirements

|

||||

|

||||

- uuid-dev

|

||||

- make

|

||||

- a recent C compiler

|

||||

- Python 3.6

|

||||

- GNU/Linux distribution

|

||||

|

||||

### Installation

|

||||

|

||||

|

||||

- [Install D4 Server](https://github.com/D4-project/d4-core/tree/master/server)

|

||||

|

||||

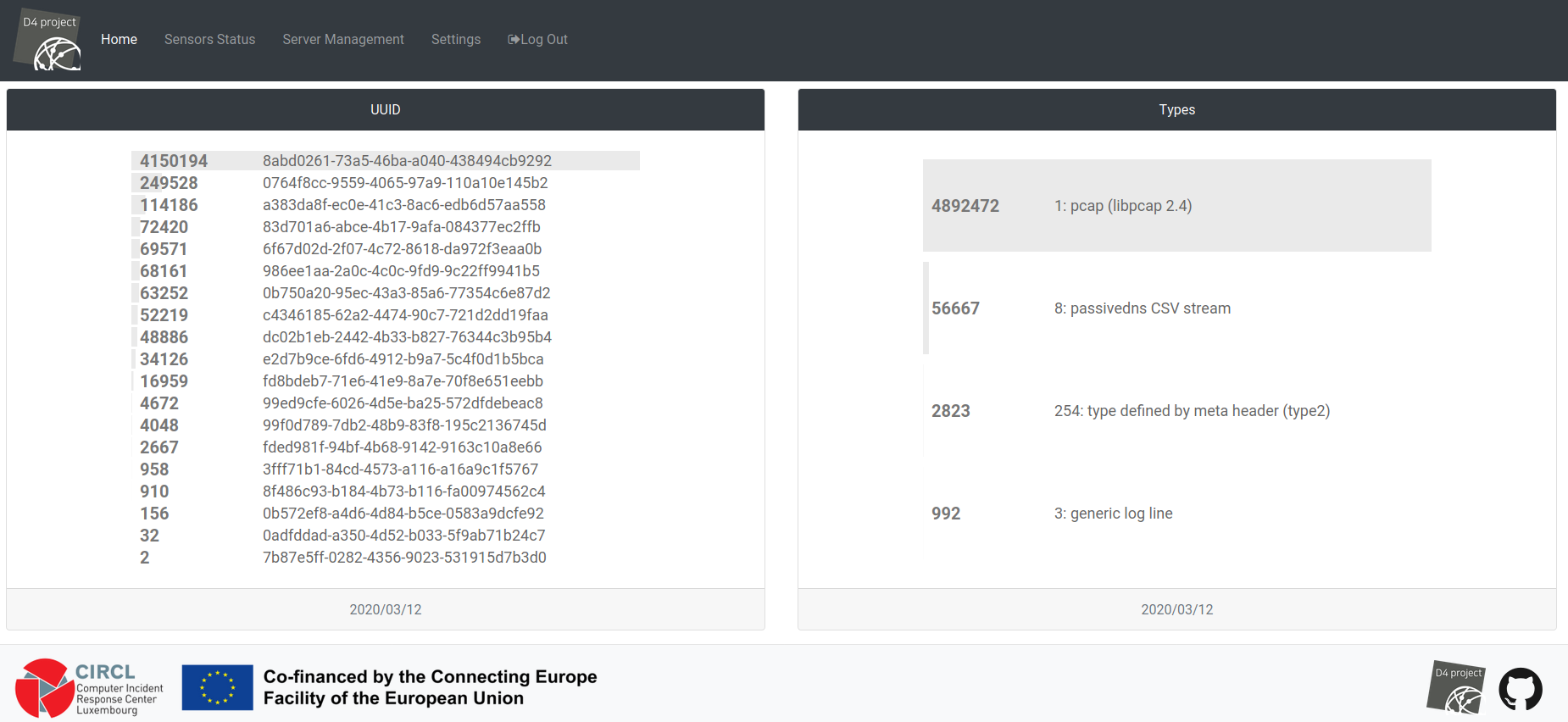

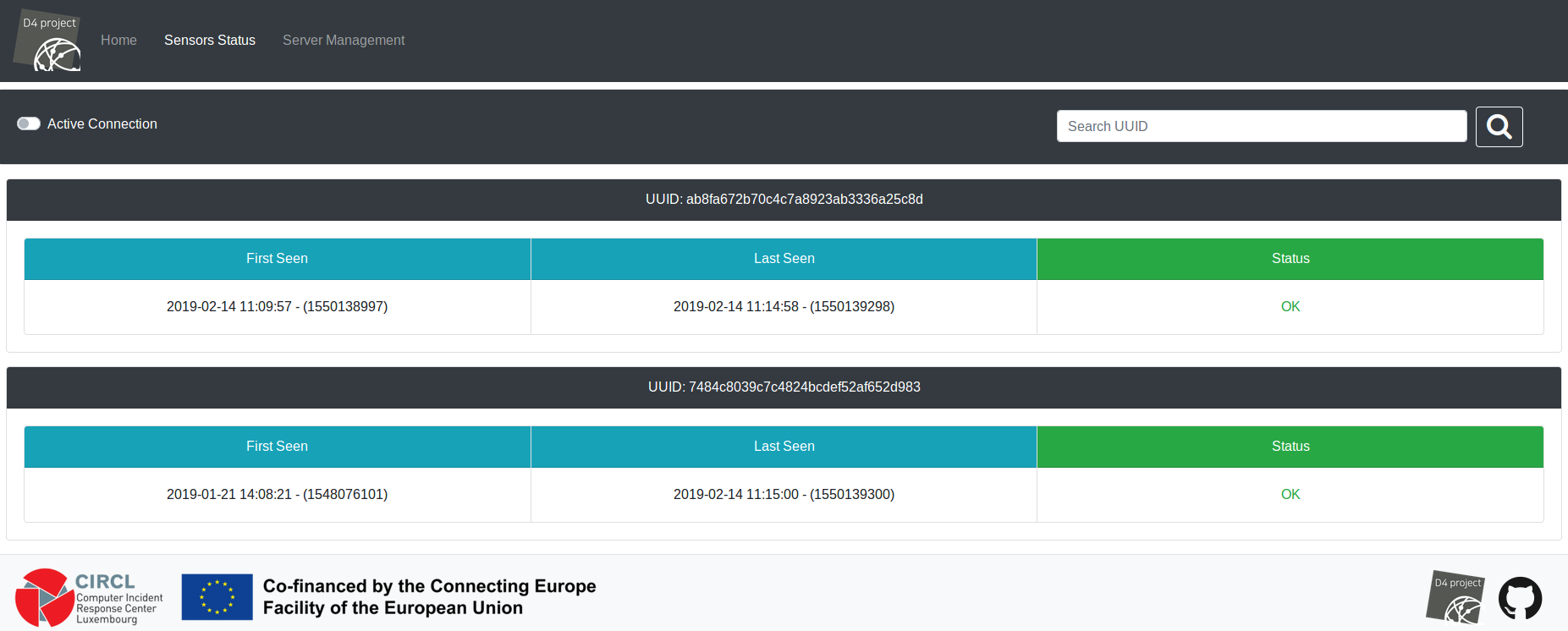

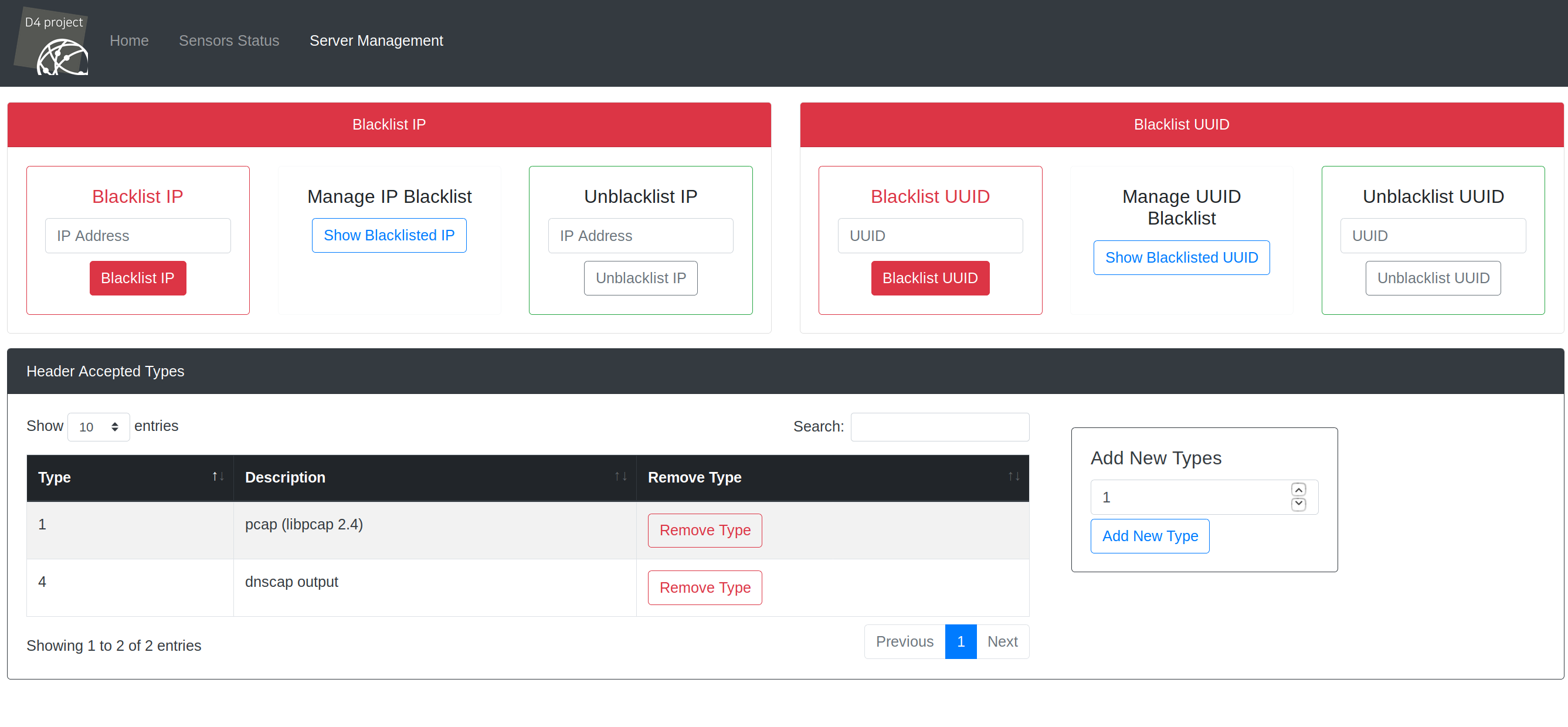

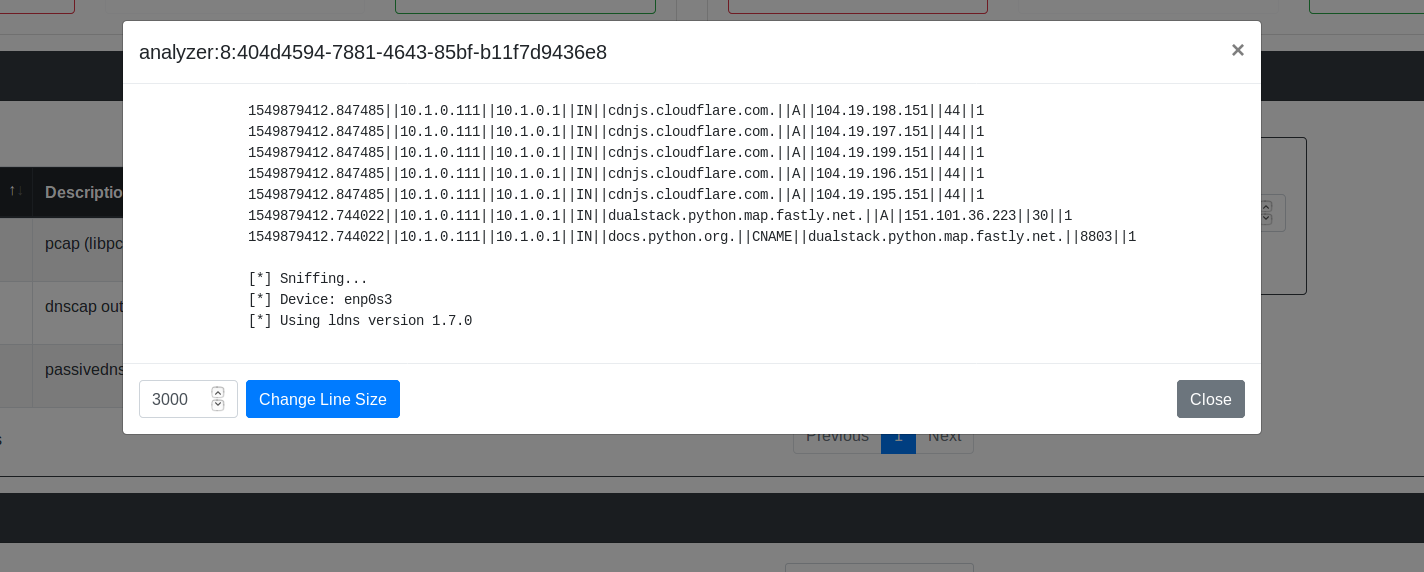

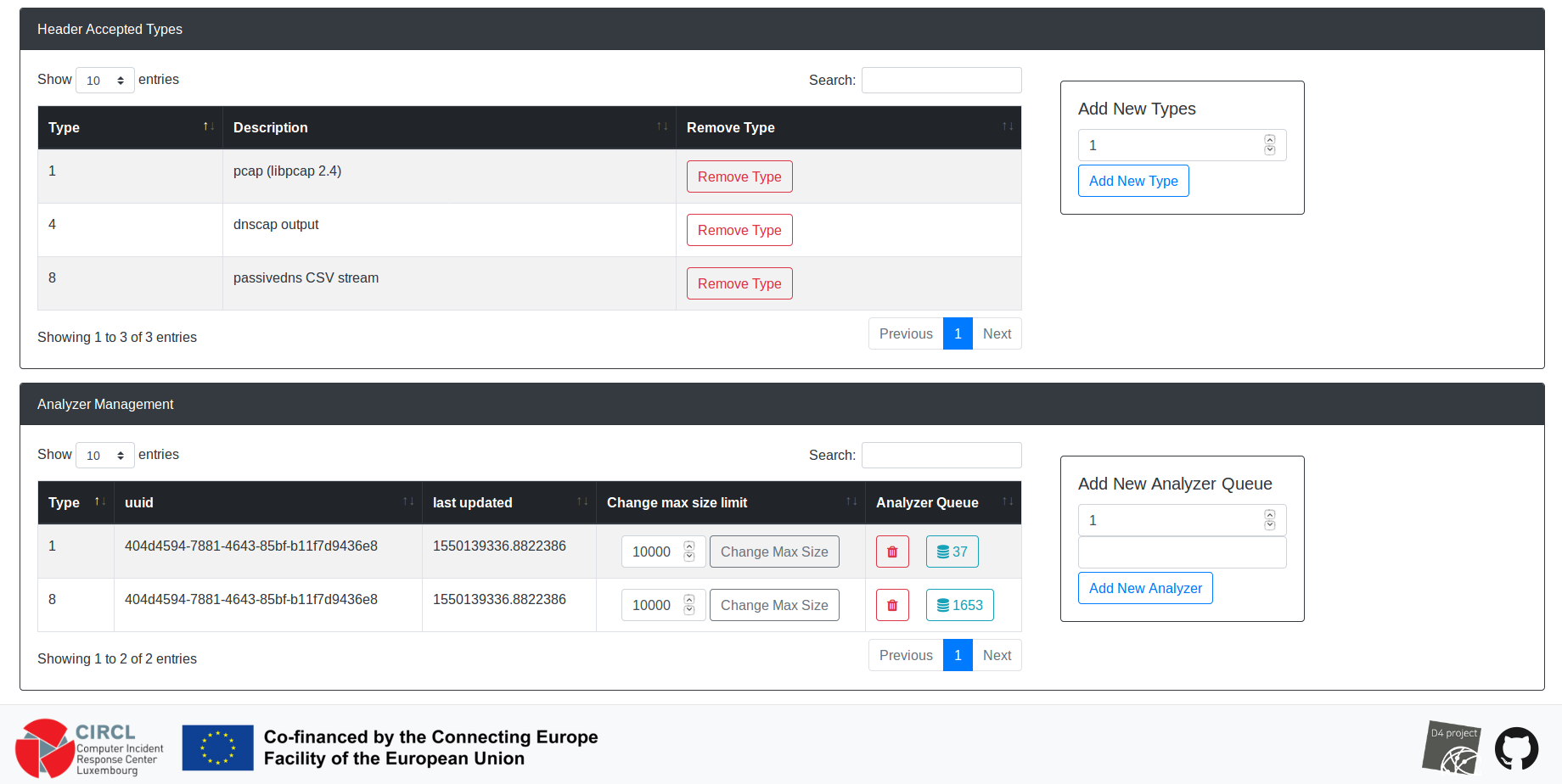

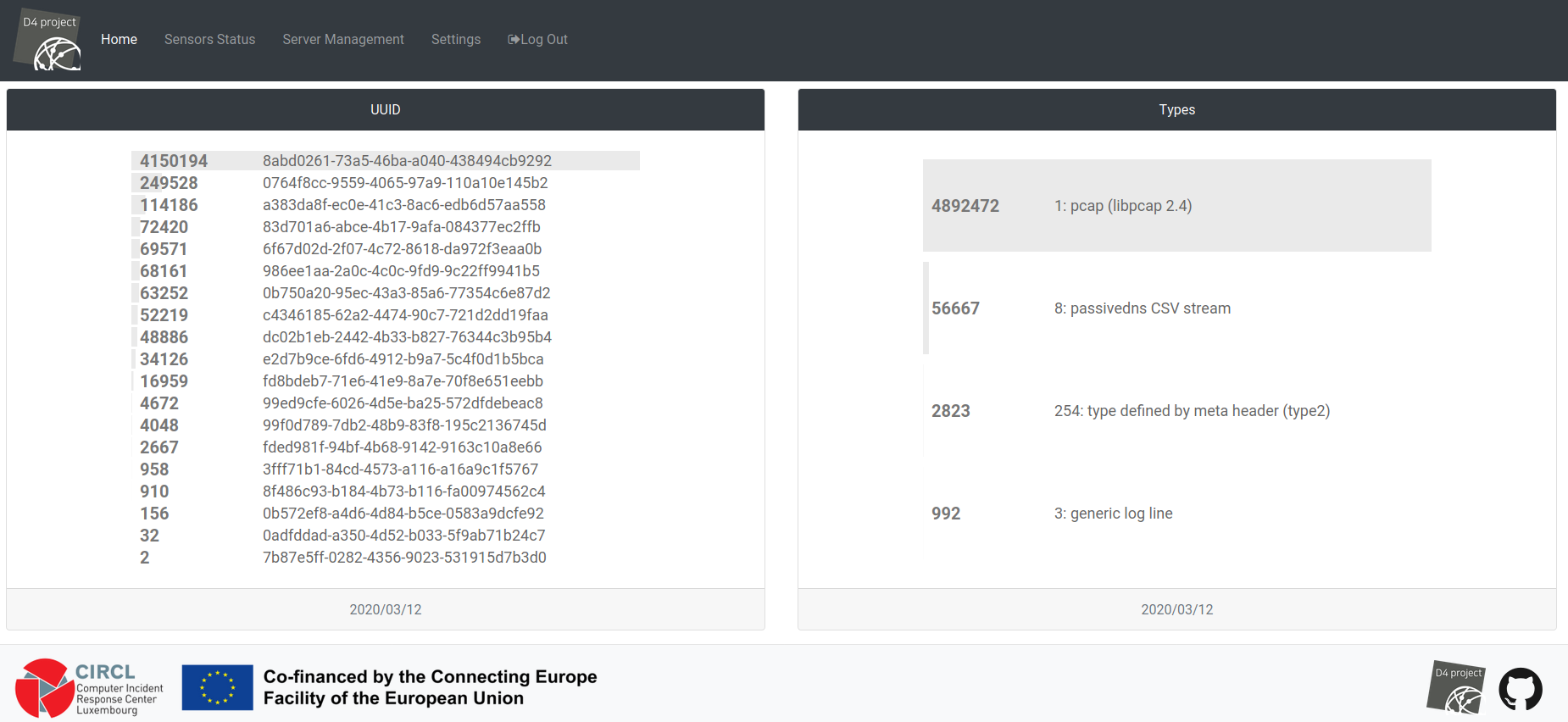

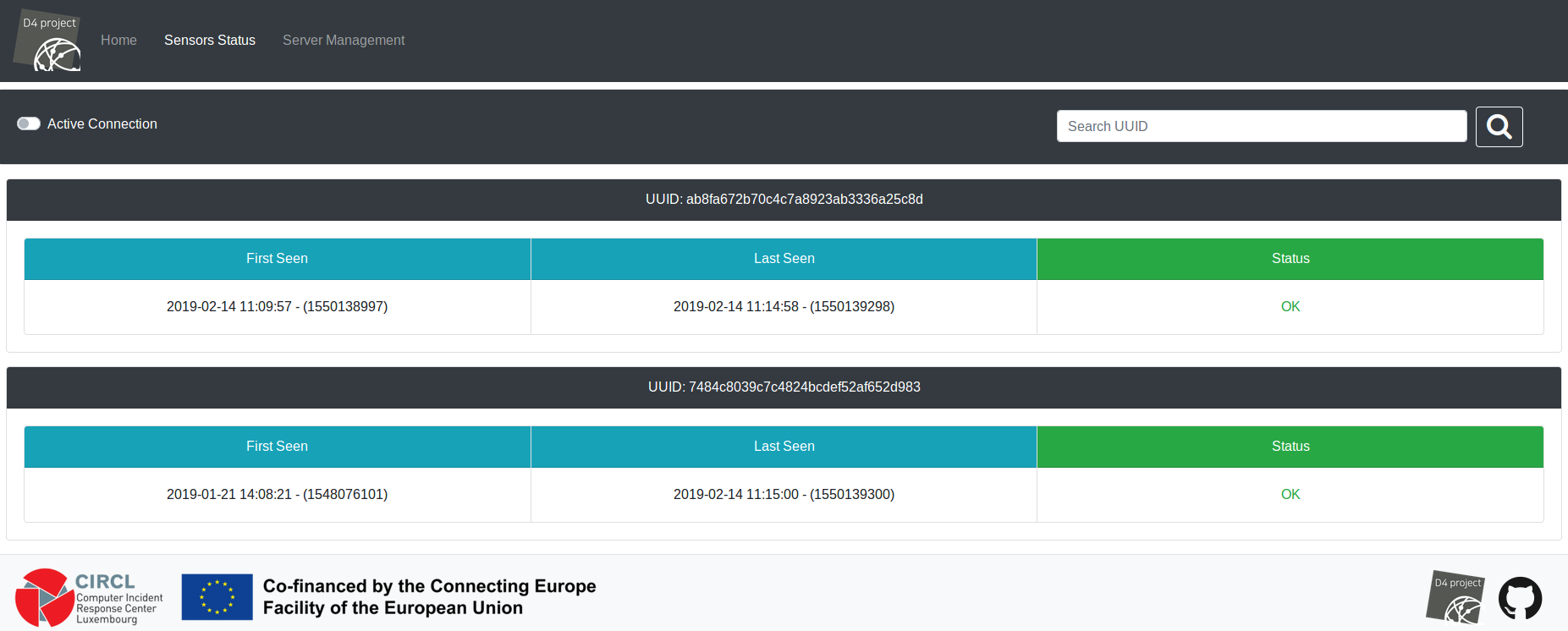

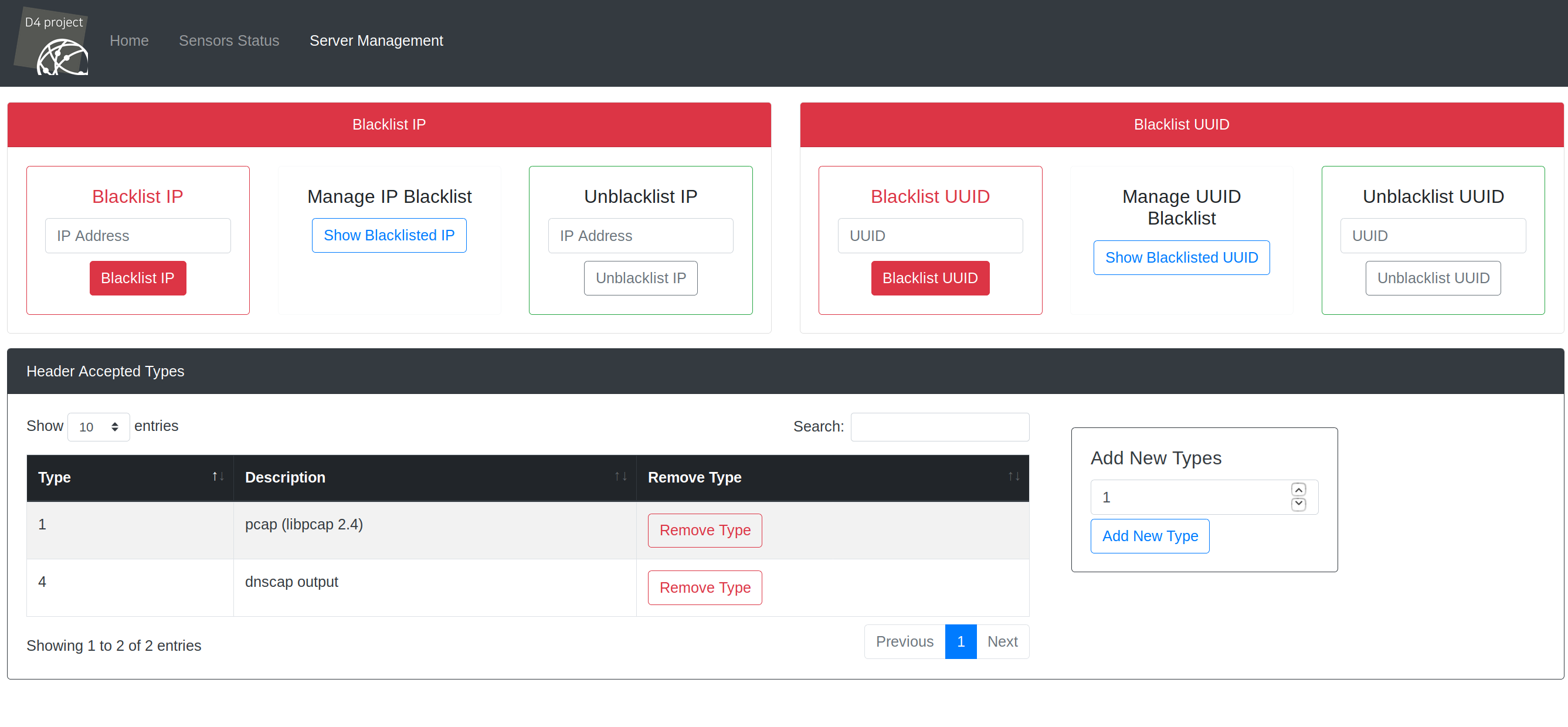

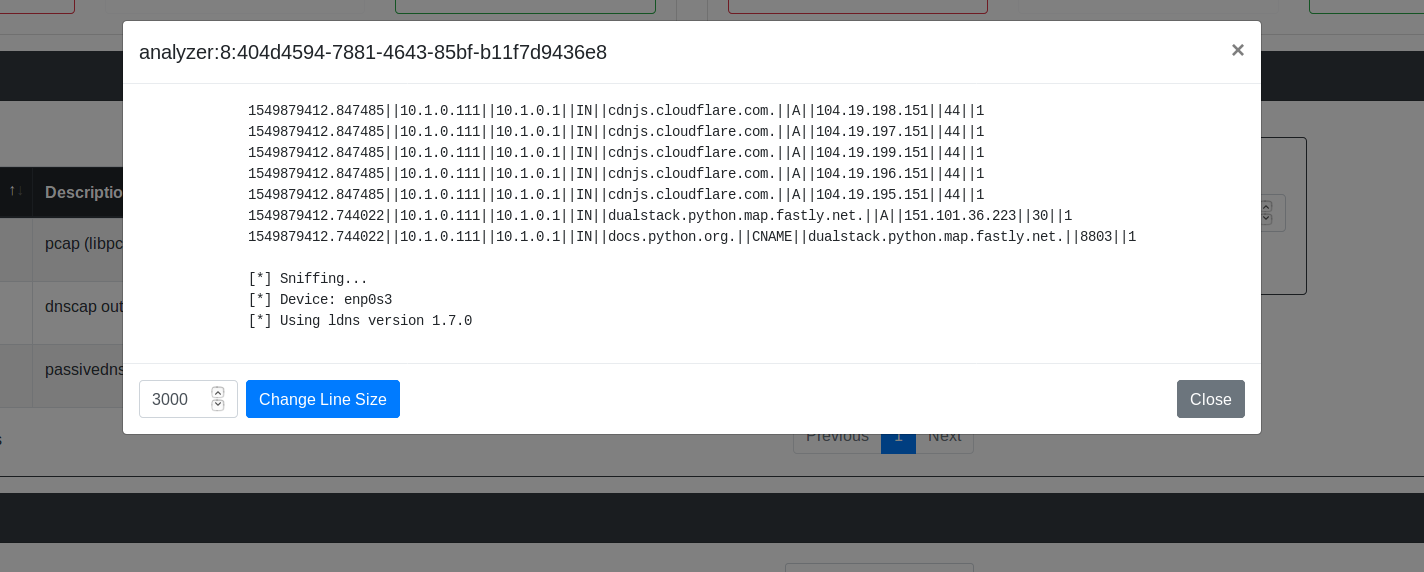

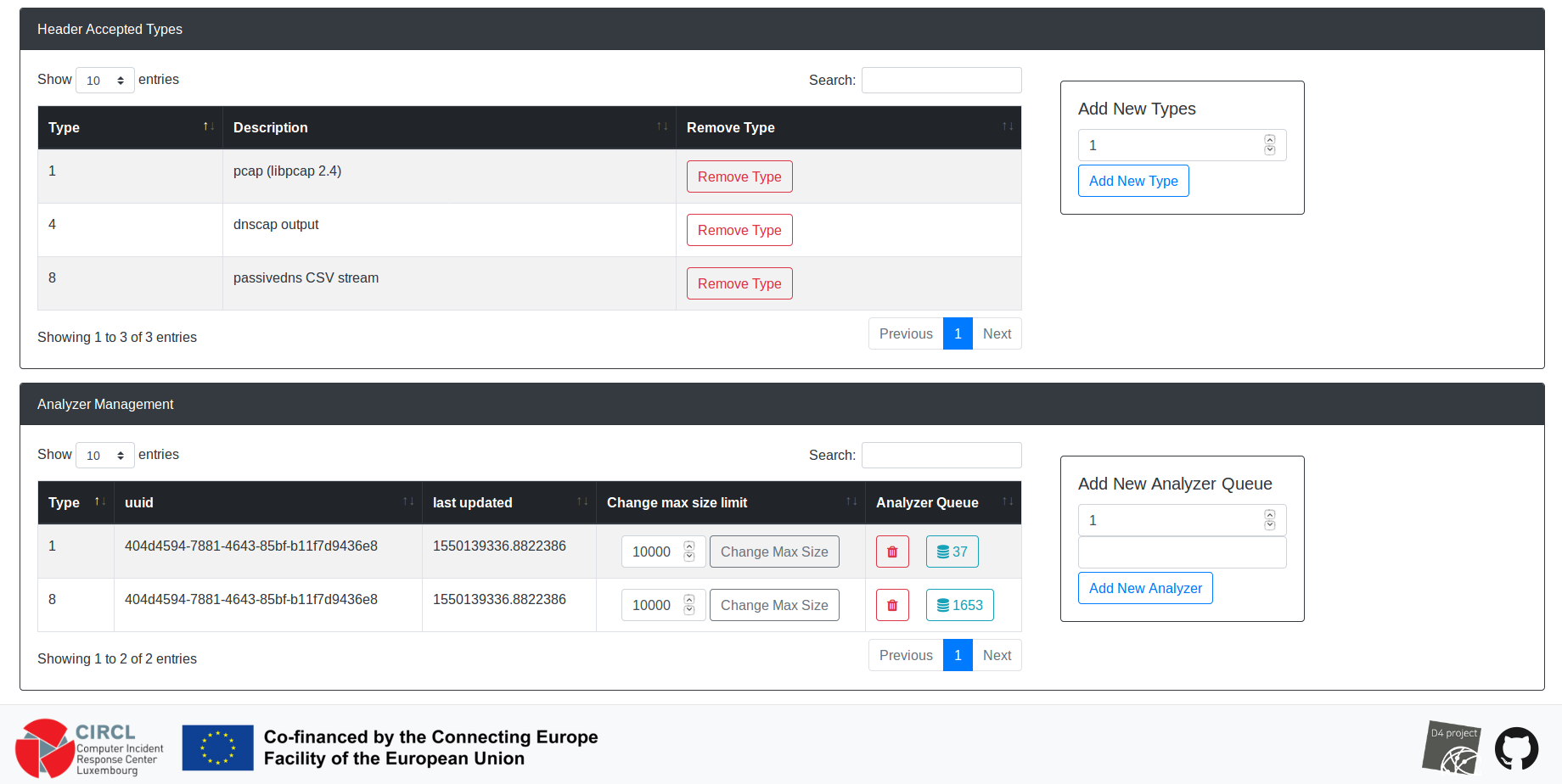

### Screenshots of D4 core server management

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

After Width: | Height: | Size: 64 KiB |

|

After Width: | Height: | Size: 88 KiB |

|

After Width: | Height: | Size: 94 KiB |

|

After Width: | Height: | Size: 136 KiB |

|

After Width: | Height: | Size: 127 KiB |

|

|

@ -2,6 +2,7 @@

|

|||

*.csr

|

||||

*.pem

|

||||

*.key

|

||||

configs/server.conf

|

||||

data/

|

||||

logs/

|

||||

redis/

|

||||

|

|

|

|||

|

|

@ -36,7 +36,7 @@ function helptext {

|

|||

- D4 Twisted server.

|

||||

- All wokers manager.

|

||||

- All Redis in memory servers.

|

||||

- Flak server.

|

||||

- Flask server.

|

||||

|

||||

Usage: LAUNCH.sh

|

||||

[-l | --launchAuto]

|

||||

|

|

@ -51,7 +51,7 @@ function launching_redis {

|

|||

|

||||

screen -dmS "Redis_D4"

|

||||

sleep 0.1

|

||||

echo -e $GREEN"\t* Launching D4 Redis ervers"$DEFAULT

|

||||

echo -e $GREEN"\t* Launching D4 Redis Servers"$DEFAULT

|

||||

screen -S "Redis_D4" -X screen -t "6379" bash -c $redis_dir'redis-server '$conf_dir'6379.conf ; read x'

|

||||

sleep 0.1

|

||||

screen -S "Redis_D4" -X screen -t "6380" bash -c $redis_dir'redis-server '$conf_dir'6380.conf ; read x'

|

||||

|

|

@ -72,9 +72,13 @@ function launching_workers {

|

|||

sleep 0.1

|

||||

echo -e $GREEN"\t* Launching D4 Workers"$DEFAULT

|

||||

|

||||

screen -S "Workers_D4" -X screen -t "1_workers_manager" bash -c "cd ${D4_HOME}/workers/workers_1; ./workers_manager.py; read x"

|

||||

screen -S "Workers_D4" -X screen -t "1_workers" bash -c "cd ${D4_HOME}/workers/workers_1; ./workers_manager.py; read x"

|

||||

sleep 0.1

|

||||

screen -S "Workers_D4" -X screen -t "4_workers_manager" bash -c "cd ${D4_HOME}/workers/workers_4; ./workers_manager.py; read x"

|

||||

screen -S "Workers_D4" -X screen -t "2_workers" bash -c "cd ${D4_HOME}/workers/workers_2; ./workers_manager.py; read x"

|

||||

sleep 0.1

|

||||

screen -S "Workers_D4" -X screen -t "4_workers" bash -c "cd ${D4_HOME}/workers/workers_4; ./workers_manager.py; read x"

|

||||

sleep 0.1

|

||||

screen -S "Workers_D4" -X screen -t "8_workers" bash -c "cd ${D4_HOME}/workers/workers_8; ./workers_manager.py; read x"

|

||||

sleep 0.1

|

||||

}

|

||||

|

||||

|

|

@ -159,6 +163,7 @@ function launch_flask {

|

|||

screen -dmS "Flask_D4"

|

||||

sleep 0.1

|

||||

echo -e $GREEN"\t* Launching Flask server"$DEFAULT

|

||||

# screen -S "Flask_D4" -X screen -t "Flask_server" bash -c "cd $flask_dir; export FLASK_DEBUG=1;export FLASK_APP=Flask_server.py; python -m flask run --port 7000; read x"

|

||||

screen -S "Flask_D4" -X screen -t "Flask_server" bash -c "cd $flask_dir; ls; ./Flask_server.py; read x"

|

||||

else

|

||||

echo -e $RED"\t* A Flask_D4 screen is already launched"$DEFAULT

|

||||

|

|

@ -200,9 +205,15 @@ function update_web {

|

|||

fi

|

||||

}

|

||||

|

||||

function update_config {

|

||||

echo -e $GREEN"\t* Updating Config File"$DEFAULT

|

||||

bash -c "(cd ${D4_HOME}/configs; ./update_conf.py -v 0)"

|

||||

}

|

||||

|

||||

function launch_all {

|

||||

helptext;

|

||||

launch_redis;

|

||||

update_config;

|

||||

launch_d4_server;

|

||||

launch_workers;

|

||||

launch_flask;

|

||||

|

|

|

|||

|

|

@ -0,0 +1,68 @@

|

|||

# D4 core

|

||||

|

||||

|

||||

|

||||

## D4 core server

|

||||

|

||||

D4 core server is a complete server to handle clients (sensors) including the decapsulation of the [D4 protocol](https://github.com/D4-project/architecture/tree/master/format), control of

|

||||

sensor registrations, management of decoding protocols and dispatching to adequate decoders/analysers.

|

||||

|

||||

### Requirements

|

||||

|

||||

- Python 3.6

|

||||

- GNU/Linux distribution

|

||||

|

||||

### Installation

|

||||

|

||||

###### Install D4 server

|

||||

~~~~

|

||||

cd server

|

||||

./install_server.sh

|

||||

~~~~

|

||||

Create or add a pem in [d4-core/server](https://github.com/D4-project/d4-core/tree/master/server) :

|

||||

~~~~

|

||||

cd gen_cert

|

||||

./gen_root.sh

|

||||

./gen_cert.sh

|

||||

cd ..

|

||||

~~~~

|

||||

|

||||

|

||||

###### Launch D4 server

|

||||

~~~~

|

||||

./LAUNCH.sh -l

|

||||

~~~~

|

||||

|

||||

The web interface is accessible via `http://127.0.0.1:7000/`

|

||||

|

||||

### Updating web assets

|

||||

To update javascript libs run:

|

||||

~~~~

|

||||

cd web

|

||||

./update_web.sh

|

||||

~~~~

|

||||

|

||||

### Notes

|

||||

|

||||

- All server logs are located in ``d4-core/server/logs/``

|

||||

- Close D4 Server: ``./LAUNCH.sh -k``

|

||||

|

||||

### Screenshots of D4 core server management

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Troubleshooting

|

||||

|

||||

###### Worker 1, tcpdump: Permission denied

|

||||

Could be related to AppArmor:

|

||||

~~~~

|

||||

sudo cat /var/log/syslog | grep denied

|

||||

~~~~

|

||||

Run the following command as root:

|

||||

~~~~

|

||||

aa-complain /usr/sbin/tcpdump

|

||||

~~~~

|

||||

|

|

@ -0,0 +1,5 @@

|

|||

[Save_Directories]

|

||||

# By default all datas are saved in $D4_HOME/data/

|

||||

use_default_save_directory = yes

|

||||

save_directory = None

|

||||

|

||||

|

|

@ -0,0 +1,77 @@

|

|||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import argparse

|

||||

import configparser

|

||||

|

||||

def print_message(message_to_print, verbose):

|

||||

if verbose:

|

||||

print(message_to_print)

|

||||

|

||||

if __name__ == "__main__":

|

||||

|

||||

# parse parameters

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument('-v', '--verbose',help='Display Info Messages', type=int, default=1, choices=[0, 1])

|

||||

parser.add_argument('-b', '--backup',help='Create Config Backup', type=int, default=1, choices=[0, 1])

|

||||

args = parser.parse_args()

|

||||

if args.verbose == 1:

|

||||

verbose = True

|

||||

else:

|

||||

verbose = False

|

||||

if args.backup == 1:

|

||||

backup = True

|

||||

else:

|

||||

backup = False

|

||||

|

||||

config_file_server = os.path.join(os.environ['D4_HOME'], 'configs/server.conf')

|

||||

config_file_sample = os.path.join(os.environ['D4_HOME'], 'configs/server.conf.sample')

|

||||

config_file_backup = os.path.join(os.environ['D4_HOME'], 'configs/server.conf.backup')

|

||||

|

||||

# Check if confile file exist

|

||||

if not os.path.isfile(config_file_server):

|

||||

# create config file

|

||||

with open(config_file_server, 'w') as configfile:

|

||||

with open(config_file_sample, 'r') as config_file_sample:

|

||||

configfile.write(config_file_sample.read())

|

||||

print_message('Config File Created', verbose)

|

||||

else:

|

||||

config_server = configparser.ConfigParser()

|

||||

config_server.read(config_file_server)

|

||||

config_sections = config_server.sections()

|

||||

|

||||

config_sample = configparser.ConfigParser()

|

||||

config_sample.read(config_file_sample)

|

||||

sample_sections = config_sample.sections()

|

||||

|

||||

mew_content_added = False

|

||||

for section in sample_sections:

|

||||

new_key_added = False

|

||||

if section not in config_sections:

|

||||

# add new section

|

||||

config_server.add_section(section)

|

||||

mew_content_added = True

|

||||

for key in config_sample[section]:

|

||||

if key not in config_server[section]:

|

||||

# add new section key

|

||||

config_server.set(section, key, config_sample[section][key])

|

||||

if not new_key_added:

|

||||

print_message('[{}]'.format(section), verbose)

|

||||

new_key_added = True

|

||||

mew_content_added = True

|

||||

print_message(' {} = {}'.format(key, config_sample[section][key]), verbose)

|

||||

|

||||

# new keys have been added to config file

|

||||

if mew_content_added:

|

||||

# backup config file

|

||||

if backup:

|

||||

with open(config_file_backup, 'w') as configfile:

|

||||

with open(config_file_server, 'r') as configfile_origin:

|

||||

configfile.write(configfile_origin.read())

|

||||

print_message('New Backup Created', verbose)

|

||||

# create new config file

|

||||

with open(config_file_server, 'w') as configfile:

|

||||

config_server.write(configfile)

|

||||

print_message('Config file updated', verbose)

|

||||

else:

|

||||

print_message('Nothing to update', verbose)

|

||||

|

|

@ -2,4 +2,4 @@

|

|||

# Create Root key

|

||||

openssl genrsa -out rootCA.key 4096

|

||||

# Create and Sign the Root CA Certificate

|

||||

openssl req -x509 -new -nodes -key rootCA.key -sha256 -days 1024 -out rootCA.crt

|

||||

openssl req -x509 -new -nodes -key rootCA.key -sha256 -days 1024 -out rootCA.crt -config san.cnf

|

||||

|

|

|

|||

|

|

@ -3,7 +3,7 @@

|

|||

set -e

|

||||

set -x

|

||||

|

||||

sudo apt-get install python3-pip virtualenv screen -y

|

||||

sudo apt-get install python3-pip virtualenv screen whois unzip libffi-dev gcc -y

|

||||

|

||||

if [ -z "$VIRTUAL_ENV" ]; then

|

||||

virtualenv -p python3 D4ENV

|

||||

|

|

|

|||

238

server/server.py

|

|

@ -23,7 +23,8 @@ from twisted.protocols.policies import TimeoutMixin

|

|||

hmac_reset = bytearray(32)

|

||||

hmac_key = b'private key to change'

|

||||

|

||||

accepted_type = [1, 4]

|

||||

accepted_type = [1, 2, 4, 8, 254]

|

||||

accepted_extended_type = ['ja3-jl']

|

||||

|

||||

timeout_time = 30

|

||||

|

||||

|

|

@ -60,40 +61,86 @@ except redis.exceptions.ConnectionError:

|

|||

print('Error: Redis server {}:{}, ConnectionError'.format(host_redis_metadata, port_redis_metadata))

|

||||

sys.exit(1)

|

||||

|

||||

# set hmac default key

|

||||

redis_server_metadata.set('server:hmac_default_key', hmac_key)

|

||||

|

||||

# init redis_server_metadata

|

||||

redis_server_metadata.delete('server:accepted_type')

|

||||

for type in accepted_type:

|

||||

redis_server_metadata.sadd('server:accepted_type', type)

|

||||

redis_server_metadata.delete('server:accepted_extended_type')

|

||||

for type in accepted_extended_type:

|

||||

redis_server_metadata.sadd('server:accepted_extended_type', type)

|

||||

|

||||

class Echo(Protocol, TimeoutMixin):

|

||||

dict_all_connection = {}

|

||||

|

||||

class D4_Server(Protocol, TimeoutMixin):

|

||||

|

||||

def __init__(self):

|

||||

self.buffer = b''

|

||||

self.setTimeout(timeout_time)

|

||||

self.session_uuid = str(uuid.uuid4())

|

||||

self.data_saved = False

|

||||

self.update_stream_type = True

|

||||

self.first_connection = True

|

||||

self.ip = None

|

||||

self.source_port = None

|

||||

self.stream_max_size = None

|

||||

self.hmac_key = None

|

||||

#self.version = None

|

||||

self.type = None

|

||||

self.uuid = None

|

||||

logger.debug('New session: session_uuid={}'.format(self.session_uuid))

|

||||

dict_all_connection[self.session_uuid] = self

|

||||

|

||||

def dataReceived(self, data):

|

||||

self.resetTimeout()

|

||||

ip, source_port = self.transport.client

|

||||

# check blacklisted_ip

|

||||

if redis_server_metadata.sismember('blacklist_ip', ip):

|

||||

self.transport.abortConnection()

|

||||

logger.warning('Blacklisted IP={}, connection closed'.format(ip))

|

||||

# check and kick sensor by uuid

|

||||

for client_uuid in redis_server_stream.smembers('server:sensor_to_kick'):

|

||||

client_uuid = client_uuid.decode()

|

||||

for session_uuid in redis_server_stream.smembers('map:active_connection-uuid-session_uuid:{}'.format(client_uuid)):

|

||||

session_uuid = session_uuid.decode()

|

||||

logger.warning('Sensor kicked uuid={}, session_uuid={}'.format(client_uuid, session_uuid))

|

||||

redis_server_stream.set('temp_blacklist_uuid:{}'.format(client_uuid), 'some random string')

|

||||

redis_server_stream.expire('temp_blacklist_uuid:{}'.format(client_uuid), 30)

|

||||

dict_all_connection[session_uuid].transport.abortConnection()

|

||||

redis_server_stream.srem('server:sensor_to_kick', client_uuid)

|

||||

|

||||

self.process_header(data, ip, source_port)

|

||||

self.resetTimeout()

|

||||

if self.first_connection or self.ip is None:

|

||||

client_info = self.transport.client

|

||||

self.ip = self.extract_ip(client_info[0])

|

||||

self.source_port = client_info[1]

|

||||

logger.debug('New connection, ip={}, port={} session_uuid={}'.format(self.ip, self.source_port, self.session_uuid))

|

||||

# check blacklisted_ip

|

||||

if redis_server_metadata.sismember('blacklist_ip', self.ip):

|

||||

self.transport.abortConnection()

|

||||

logger.warning('Blacklisted IP={}, connection closed'.format(self.ip))

|

||||

else:

|

||||

# process data

|

||||

self.process_header(data, self.ip, self.source_port)

|

||||

|

||||

def timeoutConnection(self):

|

||||

self.resetTimeout()

|

||||

self.buffer = b''

|

||||

logger.debug('buffer timeout, session_uuid={}'.format(self.session_uuid))

|

||||

if self.uuid is None:

|

||||

# # TODO: ban auto

|

||||

logger.warning('Timeout, no D4 header send, session_uuid={}, connection closed'.format(self.session_uuid))

|

||||

self.transport.abortConnection()

|

||||

else:

|

||||

self.resetTimeout()

|

||||

self.buffer = b''

|

||||

logger.debug('buffer timeout, session_uuid={}'.format(self.session_uuid))

|

||||

|

||||

def connectionMade(self):

|

||||

self.transport.setTcpKeepAlive(1)

|

||||

|

||||

def connectionLost(self, reason):

|

||||

redis_server_stream.sadd('ended_session', self.session_uuid)

|

||||

self.setTimeout(None)

|

||||

redis_server_stream.srem('active_connection:{}'.format(self.type), '{}:{}'.format(self.ip, self.uuid))

|

||||

redis_server_stream.srem('active_connection', '{}'.format(self.uuid))

|

||||

if self.uuid:

|

||||

redis_server_stream.srem('map:active_connection-uuid-session_uuid:{}'.format(self.uuid), self.session_uuid)

|

||||

logger.debug('Connection closed: session_uuid={}'.format(self.session_uuid))

|

||||

dict_all_connection.pop(self.session_uuid)

|

||||

|

||||

def unpack_header(self, data):

|

||||

data_header = {}

|

||||

|

|

@ -104,25 +151,18 @@ class Echo(Protocol, TimeoutMixin):

|

|||

data_header['timestamp'] = struct.unpack('Q', data[18:26])[0]

|

||||

data_header['hmac_header'] = data[26:58]

|

||||

data_header['size'] = struct.unpack('I', data[58:62])[0]

|

||||

return data_header

|

||||

|

||||

# uuid blacklist

|

||||

if redis_server_metadata.sismember('blacklist_uuid', data_header['uuid_header']):

|

||||

self.transport.abortConnection()

|

||||

logger.warning('Blacklisted UUID={}, connection closed'.format(data_header['uuid_header']))

|

||||

|

||||

# check default size limit

|

||||

if data_header['size'] > data_default_size_limit:

|

||||

self.transport.abortConnection()

|

||||

logger.warning('Incorrect header data size: the server received more data than expected by default, expected={}, received={} , uuid={}, session_uuid={}'.format(data_default_size_limit, data_header['size'] ,data_header['uuid_header'], self.session_uuid))

|

||||

|

||||

# Worker: Incorrect type

|

||||

if redis_server_stream.sismember('Error:IncorrectType:{}'.format(data_header['type']), self.session_uuid):

|

||||

self.transport.abortConnection()

|

||||

redis_server_stream.delete(stream_name)

|

||||

redis_server_stream.srem('Error:IncorrectType:{}'.format(data_header['type']), self.session_uuid)

|

||||

logger.warning('Incorrect type={} detected by worker, uuid={}, session_uuid={}'.format(data_header['type'] ,data_header['uuid_header'], self.session_uuid))

|

||||

|

||||

return data_header

|

||||

def extract_ip(self, ip_string):

|

||||

#remove interface

|

||||

ip_string = ip_string.split('%')[0]

|

||||

# IPv4

|

||||

#extract ipv4

|

||||

if '.' in ip_string:

|

||||

return ip_string.split(':')[-1]

|

||||

# IPv6

|

||||

else:

|

||||

return ip_string

|

||||

|

||||

def is_valid_uuid_v4(self, header_uuid):

|

||||

try:

|

||||

|

|

@ -143,14 +183,103 @@ class Echo(Protocol, TimeoutMixin):

|

|||

logger.info('Invalid Header, uuid={}, session_uuid={}'.format(uuid_to_check, self.session_uuid))

|

||||

return False

|

||||

|

||||

def check_connection_validity(self, data_header):

|

||||

# blacklist ip by uuid

|

||||

if redis_server_metadata.sismember('blacklist_ip_by_uuid', data_header['uuid_header']):

|

||||

redis_server_metadata.sadd('blacklist_ip', self.ip)

|

||||

self.transport.abortConnection()

|

||||

logger.warning('Blacklisted IP by UUID={}, connection closed'.format(data_header['uuid_header']))

|

||||

return False

|

||||

|

||||

# uuid blacklist

|

||||

if redis_server_metadata.sismember('blacklist_uuid', data_header['uuid_header']):

|

||||

logger.warning('Blacklisted UUID={}, connection closed'.format(data_header['uuid_header']))

|

||||

self.transport.abortConnection()

|

||||

return False

|

||||

|

||||

# check temp blacklist

|

||||

if redis_server_stream.exists('temp_blacklist_uuid:{}'.format(data_header['uuid_header'])):

|

||||

logger.warning('Temporarily Blacklisted UUID={}, connection closed'.format(data_header['uuid_header']))

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error', 'Error: This UUID is temporarily blacklisted')

|

||||

self.transport.abortConnection()

|

||||

return False

|

||||

|

||||

# check default size limit

|

||||

if data_header['size'] > data_default_size_limit:

|

||||

self.transport.abortConnection()

|

||||

logger.warning('Incorrect header data size: the server received more data than expected by default, expected={}, received={} , uuid={}, session_uuid={}'.format(data_default_size_limit, data_header['size'] ,data_header['uuid_header'], self.session_uuid))

|

||||

return False

|

||||

|

||||

# Worker: Incorrect type

|

||||

if redis_server_stream.sismember('Error:IncorrectType', self.session_uuid):

|

||||

self.transport.abortConnection()

|

||||

redis_server_stream.delete('stream:{}:{}'.format(data_header['type'], self.session_uuid))

|

||||

redis_server_stream.srem('Error:IncorrectType', self.session_uuid)

|

||||

logger.warning('Incorrect type={} detected by worker, uuid={}, session_uuid={}'.format(data_header['type'] ,data_header['uuid_header'], self.session_uuid))

|

||||

return False

|

||||

|

||||

return True

|

||||

|

||||

def process_header(self, data, ip, source_port):

|

||||

if not self.buffer:

|

||||

data_header = self.unpack_header(data)

|

||||

if data_header:

|

||||

if not self.check_connection_validity(data_header):

|

||||

return 1

|

||||

if self.is_valid_header(data_header['uuid_header'], data_header['type']):

|

||||

|

||||

# auto kill connection # TODO: map type

|

||||

if self.first_connection:

|

||||

self.first_connection = False

|

||||

if redis_server_stream.sismember('active_connection:{}'.format(data_header['type']), '{}:{}'.format(ip, data_header['uuid_header'])):

|

||||

# same IP-type for an UUID

|

||||

logger.warning('is using the same UUID for one type, ip={} uuid={} type={} session_uuid={}'.format(ip, data_header['uuid_header'], data_header['type'], self.session_uuid))

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error', 'Error: This UUID is using the same UUID for one type={}'.format(data_header['type']))

|

||||

self.transport.abortConnection()

|

||||

return 1

|

||||

else:

|

||||

#self.version = None

|

||||

# check if type change

|

||||

if self.data_saved:

|

||||

# type change detected

|

||||

if self.type != data_header['type']:

|

||||

# Meta types

|

||||

if self.type == 2 and data_header['type'] == 254:

|

||||

self.update_stream_type = True

|

||||

# Type Error

|

||||

else:

|

||||

logger.warning('Unexpected type change, type={} new type={}, ip={} uuid={} session_uuid={}'.format(ip, data_header['uuid_header'], data_header['type'], self.session_uuid))

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error', 'Error: Unexpected type change type={}, new type={}'.format(self.type, data_header['type']))

|

||||

self.transport.abortConnection()

|

||||

return 1

|

||||

# type 254, check if previous type 2 saved

|

||||

elif data_header['type'] == 254:

|

||||

logger.warning('a type 2 packet must be sent, ip={} uuid={} type={} session_uuid={}'.format(ip, data_header['uuid_header'], data_header['type'], self.session_uuid))

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error', 'Error: a type 2 packet must be sent, type={}'.format(data_header['type']))

|

||||

self.transport.abortConnection()

|

||||

return 1

|

||||

self.type = data_header['type']

|

||||

self.uuid = data_header['uuid_header']

|

||||

#active Connection

|

||||

redis_server_stream.sadd('active_connection:{}'.format(self.type), '{}:{}'.format(ip, self.uuid))

|

||||

redis_server_stream.sadd('active_connection', '{}'.format(self.uuid))

|

||||

# map session_uuid/uuid

|

||||

redis_server_stream.sadd('map:active_connection-uuid-session_uuid:{}'.format(self.uuid), self.session_uuid)

|

||||

|

||||

# check if the uuid is the same

|

||||

if self.uuid != data_header['uuid_header']:

|

||||

logger.warning('The uuid change during the connection, ip={} uuid={} type={} session_uuid={} new_uuid={}'.format(ip, self.uuid, data_header['type'], self.session_uuid, data_header['uuid_header']))

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error', 'Error: The uuid change, new_uuid={}'.format(data_header['uuid_header']))

|

||||

self.transport.abortConnection()

|

||||

return 1

|

||||

## TODO: ban ?

|

||||

|

||||

# check data size

|

||||

if data_header['size'] == (len(data) - header_size):

|

||||

self.process_d4_data(data, data_header, ip)

|

||||

res = self.process_d4_data(data, data_header, ip)

|

||||

# Error detected, kill connection

|

||||

if res == 1:

|

||||

return 1

|

||||

# multiple d4 headers

|

||||

elif data_header['size'] < (len(data) - header_size):

|

||||

next_data = data[data_header['size'] + header_size:]

|

||||

|

|

@ -159,7 +288,10 @@ class Echo(Protocol, TimeoutMixin):

|

|||

#print(data)

|

||||

#print()

|

||||

#print(next_data)

|

||||

self.process_d4_data(data, data_header, ip)

|

||||

res = self.process_d4_data(data, data_header, ip)

|

||||

# Error detected, kill connection

|

||||

if res == 1:

|

||||

return 1

|

||||

# process next d4 header

|

||||

self.process_header(next_data, ip, source_port)

|

||||

# data_header['size'] > (len(data) - header_size)

|

||||

|

|

@ -210,7 +342,12 @@ class Echo(Protocol, TimeoutMixin):

|

|||

self.buffer = b''

|

||||

# set hmac_header to 0

|

||||

data = data.replace(data_header['hmac_header'], hmac_reset, 1)

|

||||

HMAC = hmac.new(hmac_key, msg=data, digestmod='sha256')

|

||||

if self.hmac_key is None:

|

||||

self.hmac_key = redis_server_metadata.hget('metadata_uuid:{}'.format(data_header['uuid_header']), 'hmac_key')

|

||||

if self.hmac_key is None:

|

||||

self.hmac_key = redis_server_metadata.get('server:hmac_default_key')

|

||||

|

||||

HMAC = hmac.new(self.hmac_key, msg=data, digestmod='sha256')

|

||||

data_header['hmac_header'] = data_header['hmac_header'].hex()

|

||||

|

||||

### Debug ###

|

||||

|

|

@ -234,6 +371,8 @@ class Echo(Protocol, TimeoutMixin):

|

|||

|

||||

date = datetime.datetime.now().strftime("%Y%m%d")

|

||||

if redis_server_stream.xlen('stream:{}:{}'.format(data_header['type'], self.session_uuid)) < self.stream_max_size:

|

||||

# Clean Error Message

|

||||

redis_server_metadata.hdel('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error')

|

||||

|

||||

redis_server_stream.xadd('stream:{}:{}'.format(data_header['type'], self.session_uuid), {'message': data[header_size:], 'uuid': data_header['uuid_header'], 'timestamp': data_header['timestamp'], 'version': data_header['version']})

|

||||

|

||||

|

|

@ -244,24 +383,44 @@ class Echo(Protocol, TimeoutMixin):

|

|||

redis_server_metadata.zincrby('daily_ip:{}'.format(date), 1, ip)

|

||||

redis_server_metadata.zincrby('daily_type:{}'.format(date), 1, data_header['type'])

|

||||

redis_server_metadata.zincrby('stat_type_uuid:{}:{}'.format(date, data_header['type']), 1, data_header['uuid_header'])

|

||||

redis_server_metadata.zincrby('stat_uuid_type:{}:{}'.format(date, data_header['uuid_header']), 1, data_header['type'])

|

||||

|

||||

#

|

||||

if not redis_server_metadata.hexists('metadata_uuid:{}'.format(data_header['uuid_header']), 'first_seen'):

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'first_seen', data_header['timestamp'])

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'last_seen', data_header['timestamp'])

|

||||

redis_server_metadata.hset('metadata_type_by_uuid:{}:{}'.format(data_header['uuid_header'], data_header['type']), 'last_seen', data_header['timestamp'])

|

||||

|

||||

if not self.data_saved:

|

||||

#UUID IP: ## TODO: use d4 timestamp ?

|

||||

redis_server_metadata.lpush('list_uuid_ip:{}'.format(data_header['uuid_header']), '{}-{}'.format(ip, datetime.datetime.now().strftime("%Y%m%d%H%M%S")))

|

||||

redis_server_metadata.ltrim('list_uuid_ip:{}'.format(data_header['uuid_header']), 0, 15)

|

||||

|

||||

self.data_saved = True

|

||||

if self.update_stream_type:

|

||||

redis_server_stream.sadd('session_uuid:{}'.format(data_header['type']), self.session_uuid.encode())

|

||||

redis_server_stream.hset('map-type:session_uuid-uuid:{}'.format(data_header['type']), self.session_uuid, data_header['uuid_header'])

|

||||

self.data_saved = True

|

||||

redis_server_metadata.sadd('all_types_by_uuid:{}'.format(data_header['uuid_header']), data_header['type'])

|

||||

|

||||

if not redis_server_metadata.hexists('metadata_type_by_uuid:{}:{}'.format(data_header['uuid_header'], data_header['type']), 'first_seen'):

|

||||

redis_server_metadata.hset('metadata_type_by_uuid:{}:{}'.format(data_header['uuid_header'], data_header['type']), 'first_seen', data_header['timestamp'])

|

||||

self.update_stream_type = False

|

||||

return 0

|

||||

else:

|

||||

logger.warning("stream exceed max entries limit, uuid={}, session_uuid={}, type={}".format(data_header['uuid_header'], self.session_uuid, data_header['type']))

|

||||

## TODO: FIXME

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error', 'Error: stream exceed max entries limit')

|

||||

|

||||

self.transport.abortConnection()

|

||||

return 1

|

||||

else:

|

||||

print('hmac do not match')

|

||||

print(data)

|

||||

logger.debug("HMAC don't match, uuid={}, session_uuid={}".format(data_header['uuid_header'], self.session_uuid))

|

||||

|

||||

## TODO: FIXME

|

||||

redis_server_metadata.hset('metadata_uuid:{}'.format(data_header['uuid_header']), 'Error', 'Error: HMAC don\'t match')

|

||||

self.transport.abortConnection()

|

||||

return 1

|

||||

|

||||

|

||||

def main(reactor):

|

||||

|

|

@ -273,8 +432,9 @@ def main(reactor):

|

|||

print(e)

|

||||

sys.exit(1)

|

||||

certificate = ssl.PrivateCertificate.loadPEM(certData)

|

||||

factory = protocol.Factory.forProtocol(Echo)

|

||||

reactor.listenSSL(4443, factory, certificate.options())

|

||||

factory = protocol.Factory.forProtocol(D4_Server)

|

||||

# use interface to support both IPv4 and IPv6

|

||||

reactor.listenSSL(4443, factory, certificate.options(), interface='::')

|

||||

return defer.Deferred()

|

||||

|

||||

|

||||

|

|

@ -283,6 +443,9 @@ if __name__ == "__main__":

|

|||

parser.add_argument('-v', '--verbose',help='dddd' , type=int, default=30)

|

||||

args = parser.parse_args()

|

||||

|

||||

if not redis_server_metadata.exists('first_date'):

|

||||

redis_server_metadata.set('first_date', datetime.datetime.now().strftime("%Y%m%d"))

|

||||

|

||||

logs_dir = 'logs'

|

||||

if not os.path.isdir(logs_dir):

|

||||

os.makedirs(logs_dir)

|

||||

|

|

@ -298,4 +461,5 @@ if __name__ == "__main__":

|

|||

logger.setLevel(args.verbose)

|

||||

|

||||

logger.info('Launching Server ...')

|

||||

|

||||

task.react(main)

|

||||

|

|

|

|||

|

|

@ -2,11 +2,17 @@

|

|||

# -*-coding:UTF-8 -*

|

||||

|

||||

import os

|

||||

import re

|

||||

import sys

|

||||

import uuid

|

||||

import time

|

||||

import json

|

||||

import redis

|

||||

import flask

|

||||

import datetime

|

||||

import ipaddress

|

||||

|

||||

import subprocess

|

||||

|

||||

from flask import Flask, render_template, jsonify, request, Blueprint, redirect, url_for

|

||||

|

||||

|

|

@ -14,6 +20,22 @@ baseUrl = ''

|

|||

if baseUrl != '':

|

||||

baseUrl = '/'+baseUrl

|

||||

|

||||

host_redis_stream = "localhost"

|

||||

port_redis_stream = 6379

|

||||

|

||||

default_max_entries_by_stream = 10000

|

||||

analyzer_list_max_default_size = 10000

|

||||

|

||||

default_analyzer_max_line_len = 3000

|

||||

|

||||

json_type_description_path = os.path.join(os.environ['D4_HOME'], 'web/static/json/type.json')

|

||||

|

||||

redis_server_stream = redis.StrictRedis(

|

||||

host=host_redis_stream,

|

||||

port=port_redis_stream,

|

||||

db=0,

|

||||

decode_responses=True)

|

||||

|

||||

host_redis_metadata = "localhost"

|

||||

port_redis_metadata= 6380

|

||||

|

||||

|

|

@ -23,13 +45,89 @@ redis_server_metadata = redis.StrictRedis(

|

|||

db=0,

|

||||

decode_responses=True)

|

||||

|

||||

redis_server_analyzer = redis.StrictRedis(

|

||||

host=host_redis_metadata,

|

||||

port=port_redis_metadata,

|

||||

db=2,

|

||||

decode_responses=True)

|

||||

|

||||

with open(json_type_description_path, 'r') as f:

|

||||

json_type = json.loads(f.read())

|

||||

json_type_description = {}

|

||||

for type_info in json_type:

|

||||

json_type_description[type_info['type']] = type_info

|

||||

|

||||

app = Flask(__name__, static_url_path=baseUrl+'/static/')

|

||||

app.config['MAX_CONTENT_LENGTH'] = 900 * 1024 * 1024

|

||||

|

||||

# ========== FUNCTIONS ============

|

||||

def is_valid_uuid_v4(header_uuid):

|

||||

try:

|

||||

header_uuid=header_uuid.replace('-', '')

|

||||

uuid_test = uuid.UUID(hex=header_uuid, version=4)

|

||||

return uuid_test.hex == header_uuid

|

||||

except:

|

||||

return False

|

||||

|

||||

def is_valid_ip(ip):

|

||||

try:

|

||||

ipaddress.ip_address(ip)

|

||||

return True

|

||||

except ValueError:

|

||||

return False

|

||||

|

||||

def is_valid_network(ip_network):

|

||||

try:

|

||||

ipaddress.ip_network(ip_network)

|

||||

return True

|

||||

except ValueError:

|

||||

return False

|

||||

|

||||

# server_management input handler

|

||||

def get_server_management_input_handler_value(value):

|

||||

if value is not None:

|

||||

if value !="0":

|

||||

try:

|

||||

value=int(value)

|

||||

except:

|

||||

value=0

|

||||

else:

|

||||

value=0

|

||||

return value

|

||||

|

||||

def get_json_type_description():

|

||||

return json_type_description

|

||||

|

||||

def get_whois_ouput(ip):

|

||||

if is_valid_ip(ip):

|

||||

process = subprocess.run(["whois", ip], stdout=subprocess.PIPE)

|

||||

return re.sub(r"#.*\n?", '', process.stdout.decode()).lstrip('\n').rstrip('\n')

|

||||

else:

|

||||

return ''

|

||||

|

||||

def get_substract_date_range(num_day, date_from=None):

|

||||

if date_from is None:

|

||||

date_from = datetime.datetime.now()

|

||||

else:

|

||||

date_from = datetime.date(int(date_from[0:4]), int(date_from[4:6]), int(date_from[6:8]))

|

||||

|

||||

l_date = []

|

||||

for i in range(num_day):

|

||||

date = date_from - datetime.timedelta(days=i)

|

||||

l_date.append( date.strftime('%Y%m%d') )

|

||||

return list(reversed(l_date))

|

||||

|

||||

# ========== ERRORS ============

|

||||

|

||||

@app.errorhandler(404)

|

||||

def page_not_found(e):

|

||||

return render_template('404.html'), 404

|

||||

|

||||

# ========== ROUTES ============

|

||||

@app.route('/')

|

||||

def index():

|

||||

return render_template("index.html")

|

||||

date = datetime.datetime.now().strftime("%Y/%m/%d")

|

||||

return render_template("index.html", date=date)

|

||||

|

||||

@app.route('/_json_daily_uuid_stats')

|

||||

def _json_daily_uuid_stats():

|

||||

|

|

@ -42,5 +140,632 @@ def _json_daily_uuid_stats():

|

|||

|

||||

return jsonify(data_daily_uuid)

|

||||

|

||||

@app.route('/_json_daily_type_stats')

|

||||

def _json_daily_type_stats():

|

||||

date = datetime.datetime.now().strftime("%Y%m%d")

|

||||

daily_uuid = redis_server_metadata.zrange('daily_type:{}'.format(date), 0, -1, withscores=True)

|

||||

json_type_description = get_json_type_description()

|

||||

|

||||

data_daily_uuid = []

|

||||

for result in daily_uuid:

|

||||

try:

|

||||

type_description = json_type_description[int(result[0])]['description']

|

||||

except:

|

||||

type_description = 'Please update your web server'

|

||||

data_daily_uuid.append({"key": '{}: {}'.format(result[0], type_description), "value": int(result[1])})

|

||||

|

||||

return jsonify(data_daily_uuid)

|

||||

|

||||

@app.route('/sensors_status')

|

||||

def sensors_status():

|

||||

active_connection_filter = request.args.get('active_connection_filter')

|

||||

if active_connection_filter is None:

|

||||

active_connection_filter = False

|

||||

else:

|

||||

if active_connection_filter=='True':

|

||||

active_connection_filter = True

|

||||

else:

|

||||

active_connection_filter = False

|

||||

|

||||

date = datetime.datetime.now().strftime("%Y%m%d")

|

||||

|

||||

if not active_connection_filter:

|

||||

daily_uuid = redis_server_metadata.zrange('daily_uuid:{}'.format(date), 0, -1)

|

||||

else:

|

||||

daily_uuid = redis_server_stream.smembers('active_connection')

|

||||

|

||||

status_daily_uuid = []

|

||||

for result in daily_uuid:

|

||||

first_seen = redis_server_metadata.hget('metadata_uuid:{}'.format(result), 'first_seen')

|

||||

first_seen_gmt = time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(int(first_seen)))

|

||||

last_seen = redis_server_metadata.hget('metadata_uuid:{}'.format(result), 'last_seen')

|

||||

last_seen_gmt = time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(int(last_seen)))

|

||||

if redis_server_metadata.sismember('blacklist_ip_by_uuid', result):

|

||||

Error = "All IP using this UUID are Blacklisted"

|

||||

elif redis_server_metadata.sismember('blacklist_uuid', result):

|

||||

Error = "Blacklisted UUID"

|

||||

else:

|

||||

Error = redis_server_metadata.hget('metadata_uuid:{}'.format(result), 'Error')

|

||||

if redis_server_stream.sismember('active_connection', result):

|

||||

active_connection = True

|

||||

else:

|

||||

active_connection = False

|

||||

|

||||

if first_seen is not None and last_seen is not None:

|

||||

status_daily_uuid.append({"uuid": result,"first_seen": first_seen, "last_seen": last_seen,

|

||||

"active_connection": active_connection,

|

||||

"first_seen_gmt": first_seen_gmt, "last_seen_gmt": last_seen_gmt, "Error": Error})

|

||||

|

||||

return render_template("sensors_status.html", status_daily_uuid=status_daily_uuid,

|

||||

active_connection_filter=active_connection_filter)

|

||||

|

||||

@app.route('/show_active_uuid')

|

||||

def show_active_uuid():

|

||||

#swap switch value

|

||||

active_connection_filter = request.args.get('show_active_connection')

|

||||

if active_connection_filter is None:

|

||||

active_connection_filter = True

|

||||

else:

|

||||

if active_connection_filter=='True':

|

||||

active_connection_filter = False

|

||||

else:

|

||||

active_connection_filter = True

|

||||

|

||||

return redirect(url_for('sensors_status', active_connection_filter=active_connection_filter))

|

||||

|

||||

@app.route('/server_management')

|

||||

def server_management():

|

||||

blacklisted_ip = request.args.get('blacklisted_ip')

|

||||

unblacklisted_ip = request.args.get('unblacklisted_ip')

|

||||

blacklisted_uuid = request.args.get('blacklisted_uuid')

|

||||

unblacklisted_uuid = request.args.get('unblacklisted_uuid')

|

||||

|

||||

blacklisted_ip = get_server_management_input_handler_value(blacklisted_ip)

|

||||

unblacklisted_ip = get_server_management_input_handler_value(unblacklisted_ip)

|

||||

blacklisted_uuid = get_server_management_input_handler_value(blacklisted_uuid)

|

||||

unblacklisted_uuid = get_server_management_input_handler_value(unblacklisted_uuid)

|

||||

|

||||

json_type_description = get_json_type_description()

|

||||

|

||||

list_accepted_types = []

|

||||

list_analyzer_types = []

|

||||

for type in redis_server_metadata.smembers('server:accepted_type'):

|

||||

try:

|

||||

description = json_type_description[int(type)]['description']

|

||||

except:

|

||||

description = 'Please update your web server'

|

||||

|

||||

list_analyzer_uuid = []

|

||||

for analyzer_uuid in redis_server_metadata.smembers('analyzer:{}'.format(type)):

|

||||

size_limit = redis_server_metadata.hget('analyzer:{}'.format(analyzer_uuid), 'max_size')

|

||||

if size_limit is None:

|

||||

size_limit = analyzer_list_max_default_size

|

||||

last_updated = redis_server_metadata.hget('analyzer:{}'.format(analyzer_uuid), 'last_updated')

|

||||

if last_updated is None:

|

||||

last_updated = 'Never'

|

||||

else:

|

||||

last_updated = datetime.datetime.fromtimestamp(float(last_updated)).strftime('%Y-%m-%d %H:%M:%S')

|

||||

description_analyzer = redis_server_metadata.hget('analyzer:{}'.format(analyzer_uuid), 'description')

|

||||

if description_analyzer is None:

|

||||

description_analyzer = ''

|

||||

len_queue = redis_server_analyzer.llen('analyzer:{}:{}'.format(type, analyzer_uuid))

|

||||

if len_queue is None:

|

||||

len_queue = 0

|

||||

list_analyzer_uuid.append({'uuid': analyzer_uuid, 'description': description_analyzer, 'size_limit': size_limit,'last_updated': last_updated, 'length': len_queue})

|

||||

|

||||

list_accepted_types.append({"id": int(type), "description": description, 'list_analyzer_uuid': list_analyzer_uuid})

|

||||

|

||||

list_accepted_extended_types = []

|

||||

for extended_type in redis_server_metadata.smembers('server:accepted_extended_type'):

|

||||

|

||||

list_analyzer_uuid = []

|

||||

for analyzer_uuid in redis_server_metadata.smembers('analyzer:254:{}'.format(extended_type)):

|

||||

size_limit = redis_server_metadata.hget('analyzer:{}'.format(analyzer_uuid), 'max_size')

|

||||

if size_limit is None:

|

||||

size_limit = analyzer_list_max_default_size

|

||||

last_updated = redis_server_metadata.hget('analyzer:{}'.format(analyzer_uuid), 'last_updated')

|

||||

if last_updated is None:

|

||||

last_updated = 'Never'

|

||||

else:

|

||||

last_updated = datetime.datetime.fromtimestamp(float(last_updated)).strftime('%Y-%m-%d %H:%M:%S')

|

||||

description_analyzer = redis_server_metadata.hget('analyzer:{}'.format(analyzer_uuid), 'description')

|

||||

if description_analyzer is None:

|

||||

description_analyzer = ''

|

||||

len_queue = redis_server_analyzer.llen('analyzer:{}:{}'.format(extended_type, analyzer_uuid))

|

||||

if len_queue is None:

|

||||

len_queue = 0

|

||||

list_analyzer_uuid.append({'uuid': analyzer_uuid, 'description': description_analyzer, 'size_limit': size_limit,'last_updated': last_updated, 'length': len_queue})

|

||||

|

||||

list_accepted_extended_types.append({"name": extended_type, 'list_analyzer_uuid': list_analyzer_uuid})

|

||||

|

||||

return render_template("server_management.html", list_accepted_types=list_accepted_types, list_accepted_extended_types=list_accepted_extended_types,

|

||||

default_analyzer_max_line_len=default_analyzer_max_line_len,

|

||||

blacklisted_ip=blacklisted_ip, unblacklisted_ip=unblacklisted_ip,

|

||||

blacklisted_uuid=blacklisted_uuid, unblacklisted_uuid=unblacklisted_uuid)

|

||||

|

||||

@app.route('/uuid_management')

|

||||

def uuid_management():

|

||||

uuid_sensor = request.args.get('uuid')

|

||||

if is_valid_uuid_v4(uuid_sensor):

|

||||

|

||||

first_seen = redis_server_metadata.hget('metadata_uuid:{}'.format(uuid_sensor), 'first_seen')

|

||||

first_seen_gmt = time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(int(first_seen)))

|

||||

last_seen = redis_server_metadata.hget('metadata_uuid:{}'.format(uuid_sensor), 'last_seen')

|

||||

last_seen_gmt = time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(int(last_seen)))

|

||||

Error = redis_server_metadata.hget('metadata_uuid:{}'.format(uuid_sensor), 'Error')

|

||||

if redis_server_stream.exists('temp_blacklist_uuid:{}'.format(uuid_sensor)):

|

||||

temp_blacklist_uuid = True

|

||||

else:

|

||||

temp_blacklist_uuid = False

|

||||

if redis_server_metadata.sismember('blacklist_uuid', uuid_sensor):

|

||||

blacklisted_uuid = True

|

||||

Error = "Blacklisted UUID"

|

||||

else:

|

||||

blacklisted_uuid = False

|

||||

if redis_server_metadata.sismember('blacklist_ip_by_uuid', uuid_sensor):

|

||||

blacklisted_ip_by_uuid = True

|

||||

Error = "All IP using this UUID are Blacklisted"

|

||||

else:

|

||||

blacklisted_ip_by_uuid = False

|

||||

data_uuid= {"first_seen": first_seen, "last_seen": last_seen,

|

||||

"temp_blacklist_uuid": temp_blacklist_uuid,

|

||||

"blacklisted_uuid": blacklisted_uuid, "blacklisted_ip_by_uuid": blacklisted_ip_by_uuid,

|

||||

"first_seen_gmt": first_seen_gmt, "last_seen_gmt": last_seen_gmt, "Error": Error}

|

||||

|

||||

if redis_server_stream.sismember('active_connection', uuid_sensor):

|

||||

active_connection = True

|

||||

else:

|

||||

active_connection = False

|

||||

|

||||

max_uuid_stream = redis_server_metadata.hget('stream_max_size_by_uuid', uuid_sensor)

|

||||