Compare commits

120 Commits

| Author | SHA1 | Date |

|---|---|---|

|

|

cb3d618ee1 | |

|

|

27aa5b1df9 | |

|

|

2e8ddd490f | |

|

|

090d0f66bb | |

|

|

dfd53c126b | |

|

|

6273a220b2 | |

|

|

81686aa022 | |

|

|

399e659d8f | |

|

|

b2f463e8f1 | |

|

|

adf0f6008b | |

|

|

39d593364d | |

|

|

cbb90c057a | |

|

|

b7998d5601 | |

|

|

dc3cdcbc1c | |

|

|

6c3c9f9954 | |

|

|

a6d5a3d22c | |

|

|

36a771ea2d | |

|

|

ef6e87f3c5 | |

|

|

5a3e299332 | |

|

|

d74d2fb71a | |

|

|

cf64529929 | |

|

|

893631e003 | |

|

|

ac301b5360 | |

|

|

04fab82f5e | |

|

|

a297cef179 | |

|

|

3edf227cc1 | |

|

|

2d358918c9 | |

|

|

47f82c8879 | |

|

|

6fee7df9fe | |

|

|

4b30072880 | |

|

|

7ce265e477 | |

|

|

168c31a5bf | |

|

|

adda78faad | |

|

|

df482d6ee3 | |

|

|

609402ebf2 | |

|

|

98e562bd47 | |

|

|

f17f80b21c | |

|

|

00e3ce3437 | |

|

|

82b2944119 | |

|

|

7078f341ae | |

|

|

cb1c8c4d65 | |

|

|

091994a34d | |

|

|

209cd0500f | |

|

|

99656658f2 | |

|

|

cdc72e7998 | |

|

|

8a792fe4ba | |

|

|

ab261a6bd2 | |

|

|

14d3a650e5 | |

|

|

b48ad52845 | |

|

|

10430135d1 | |

|

|

4d55d601a1 | |

|

|

aabf74f2f3 | |

|

|

d3087662a7 | |

|

|

8fa83dd248 | |

|

|

56e7657253 | |

|

|

bb3c1b2676 | |

|

|

1c61e1d1fe | |

|

|

f5770b6e60 | |

|

|

e39ef2c551 | |

|

|

d01f686514 | |

|

|

a800e8c8f1 | |

|

|

4dc2d1abef | |

|

|

5b0b5a6f68 | |

|

|

8f5a084d32 | |

|

|

8bf0fe4590 | |

|

|

6f58e862cc | |

|

|

8db01c389b | |

|

|

9a71a7a892 | |

|

|

d870819080 | |

|

|

0bd02f21d6 | |

|

|

3ce8557cff | |

|

|

336fc7655a | |

|

|

b530c67825 | |

|

|

16d9eb2561 | |

|

|

c54575ae77 | |

|

|

5b320a9470 | |

|

|

648e406c54 | |

|

|

f5af770516 | |

|

|

61043d81aa | |

|

|

2bc20333a9 | |

|

|

e9ef2d529f | |

|

|

4ce9888f5d | |

|

|

ff256984a3 | |

|

|

96cfebd0ea | |

|

|

f6b6137937 | |

|

|

eb6ff228e8 | |

|

|

450f5860e4 | |

|

|

3630ec0460 | |

|

|

e5720087de | |

|

|

15bb67a086 | |

|

|

d722390f89 | |

|

|

c8d2b8cb95 | |

|

|

113159f820 | |

|

|

67bf0c3cf0 | |

|

|

8bf6bdc1fe | |

|

|

85f2964c6c | |

|

|

c19e43c931 | |

|

|

489ce2c955 | |

|

|

868777eba5 | |

|

|

acb20a769b | |

|

|

91500ba460 | |

|

|

c6f21f0b5f | |

|

|

6b5ec52e28 | |

|

|

3650637ce8 | |

|

|

b6df534a72 | |

|

|

fb15487773 | |

|

|

bfc75e0db8 | |

|

|

e6d98d2dbc | |

|

|

c26e95ce50 | |

|

|

bf2fce284f | |

|

|

1dd57366c2 | |

|

|

3a22c250ee | |

|

|

ae2adfe4d6 | |

|

|

40ff019e2f | |

|

|

0816a93efe | |

|

|

e4e4d8d57e | |

|

|

7d96e76690 | |

|

|

87a68494c1 | |

|

|

c0e441ee6b | |

|

|

4086b462b7 |

50

README.md

|

|

@ -12,6 +12,10 @@ to an existing sensor network using simple clients.

|

|||

|

||||

[D4 core client](https://github.com/D4-project/d4-core/tree/master/client) is a simple and minimal implementation of the [D4 encapsulation protocol](https://github.com/D4-project/architecture/tree/master/format). There is also a [portable D4 client](https://github.com/D4-project/d4-goclient) in Go including the support for the SSL/TLS connectivity.

|

||||

|

||||

<p align="center">

|

||||

<img alt="d4-cclient" src="https://raw.githubusercontent.com/D4-project/d4-core/master/client/media/d4c-client.png" height="140" />

|

||||

</p>

|

||||

|

||||

### Requirements

|

||||

|

||||

- Unix-like operating system

|

||||

|

|

@ -60,10 +64,31 @@ git submodule init

|

|||

git submodule update

|

||||

~~~~

|

||||

|

||||

Build the d4 client. This will create the `d4` binary.

|

||||

|

||||

~~~~

|

||||

make

|

||||

~~~~

|

||||

|

||||

Then register the sensor with the server. Replace `API_TOKEN`, `VALID_UUID4` (create a random UUID via [UUIDgenerator](https://www.uuidgenerator.net/)) and `VALID_HMAC_KEY`.

|

||||

|

||||

~~~~

|

||||

curl -k https://127.0.0.1:7000/api/v1/add/sensor/register --header "Authorization: API_TOKEN" -H "Content-Type: application/json" --data '{"uuid":"VALID_UUID4","hmac_key":"VALID_HMAC_KEY"}' -X POST

|

||||

~~~~

|

||||

|

||||

If the registration went correctly the UUID is returned. Do not forget to approve the registration in the D4 server web interface.

|

||||

|

||||

Update the configuration file

|

||||

|

||||

~~~~

|

||||

cp -r conf.sample conf

|

||||

echo VALID_UUID4 > conf/uuid

|

||||

echo VALID_HMAC_KEY > conf/key

|

||||

~~~~

|

||||

|

||||

## D4 core server

|

||||

|

||||

D4 core server is a complete server to handle clients (sensors) including the decapsulation of the [D4 protocol](https://github.com/D4-project/architecture/tree/master/format), control of

|

||||

sensor registrations, management of decoding protocols and dispatching to adequate decoders/analysers.

|

||||

D4 core server is a complete server to handle clients (sensors) including the decapsulation of the [D4 protocol](https://github.com/D4-project/architecture/tree/master/format), control of sensor registrations, management of decoding protocols and dispatching to adequate decoders/analysers.

|

||||

|

||||

### Requirements

|

||||

|

||||

|

|

@ -72,13 +97,26 @@ sensor registrations, management of decoding protocols and dispatching to adequa

|

|||

|

||||

### Installation

|

||||

|

||||

|

||||

- [Install D4 Server](https://github.com/D4-project/d4-core/tree/master/server)

|

||||

|

||||

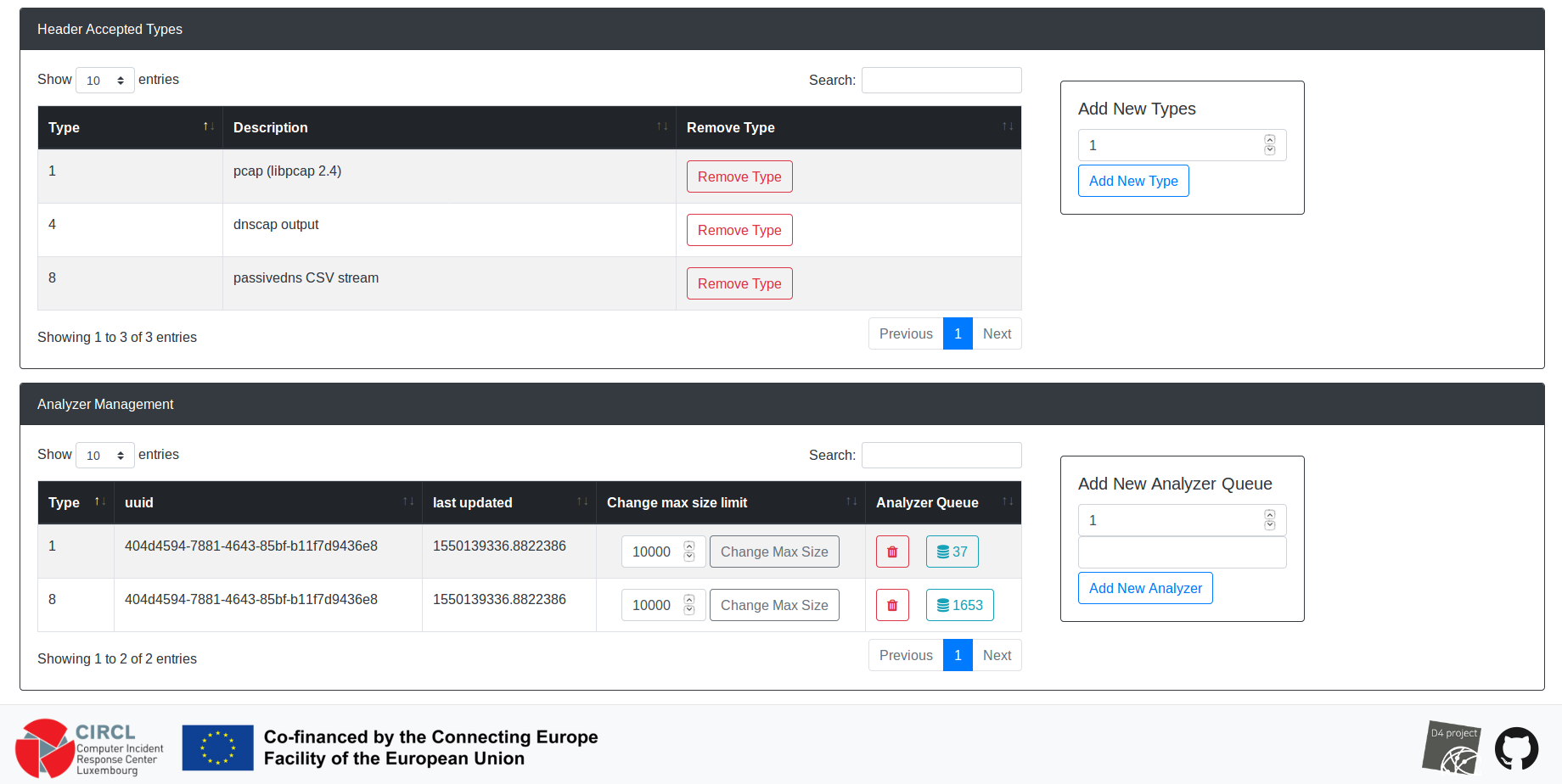

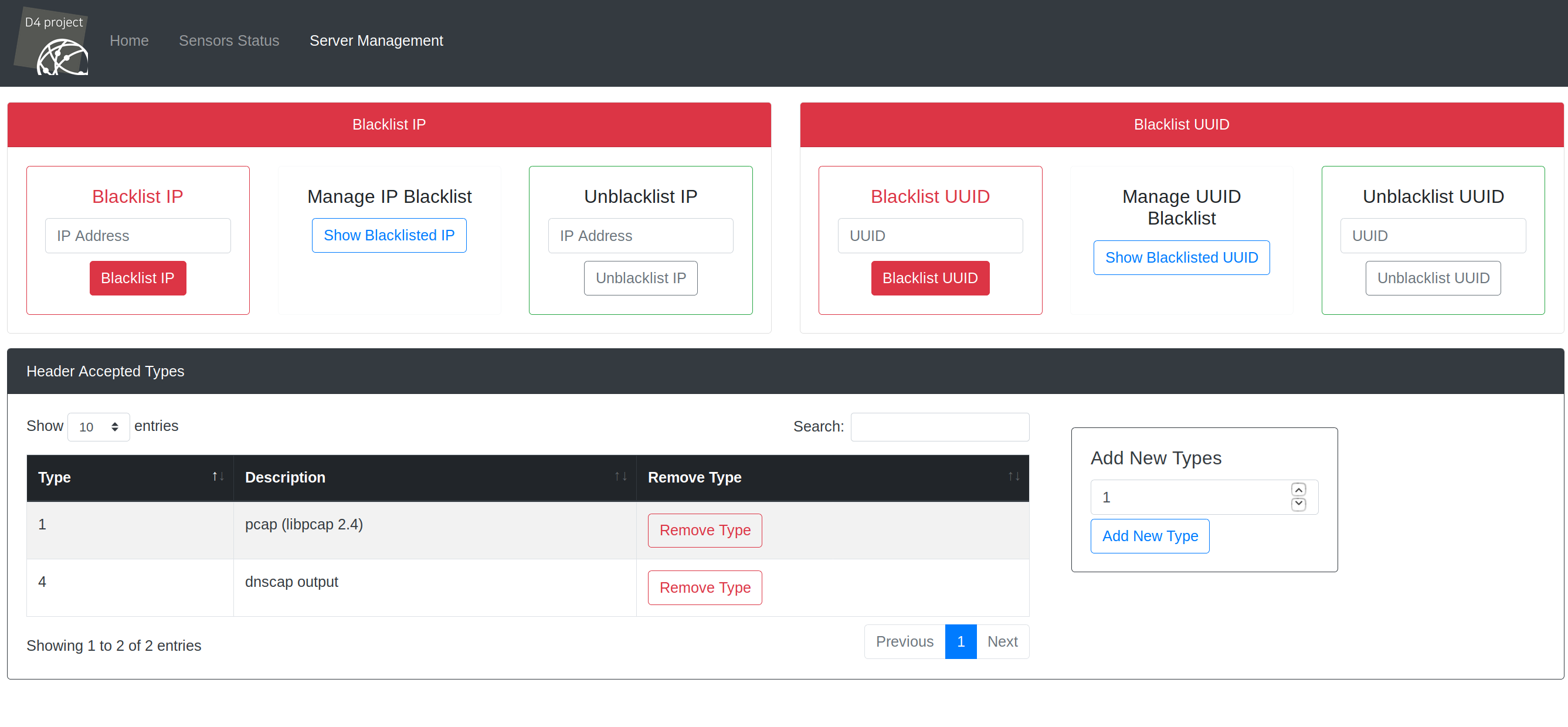

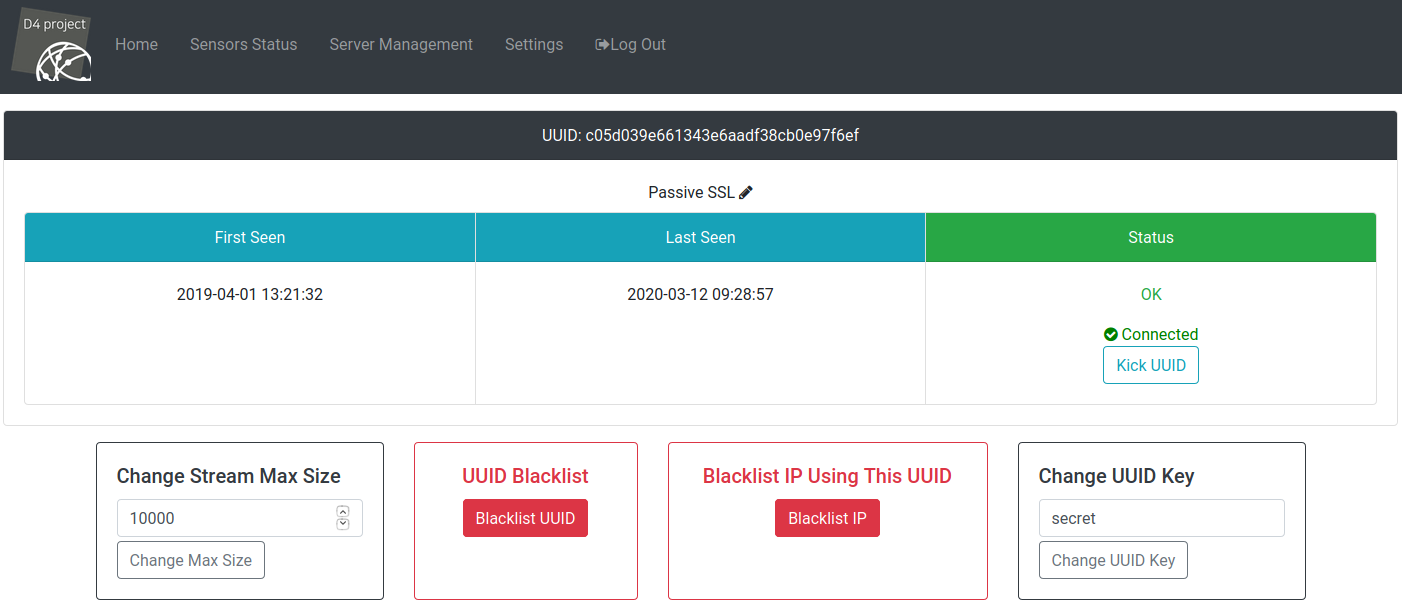

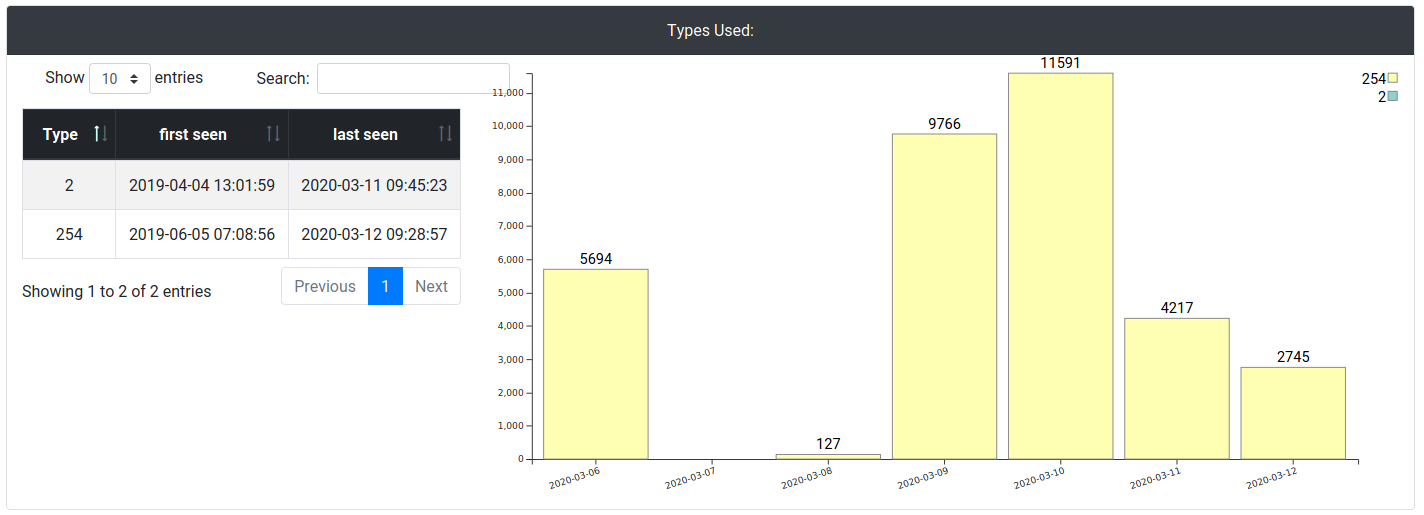

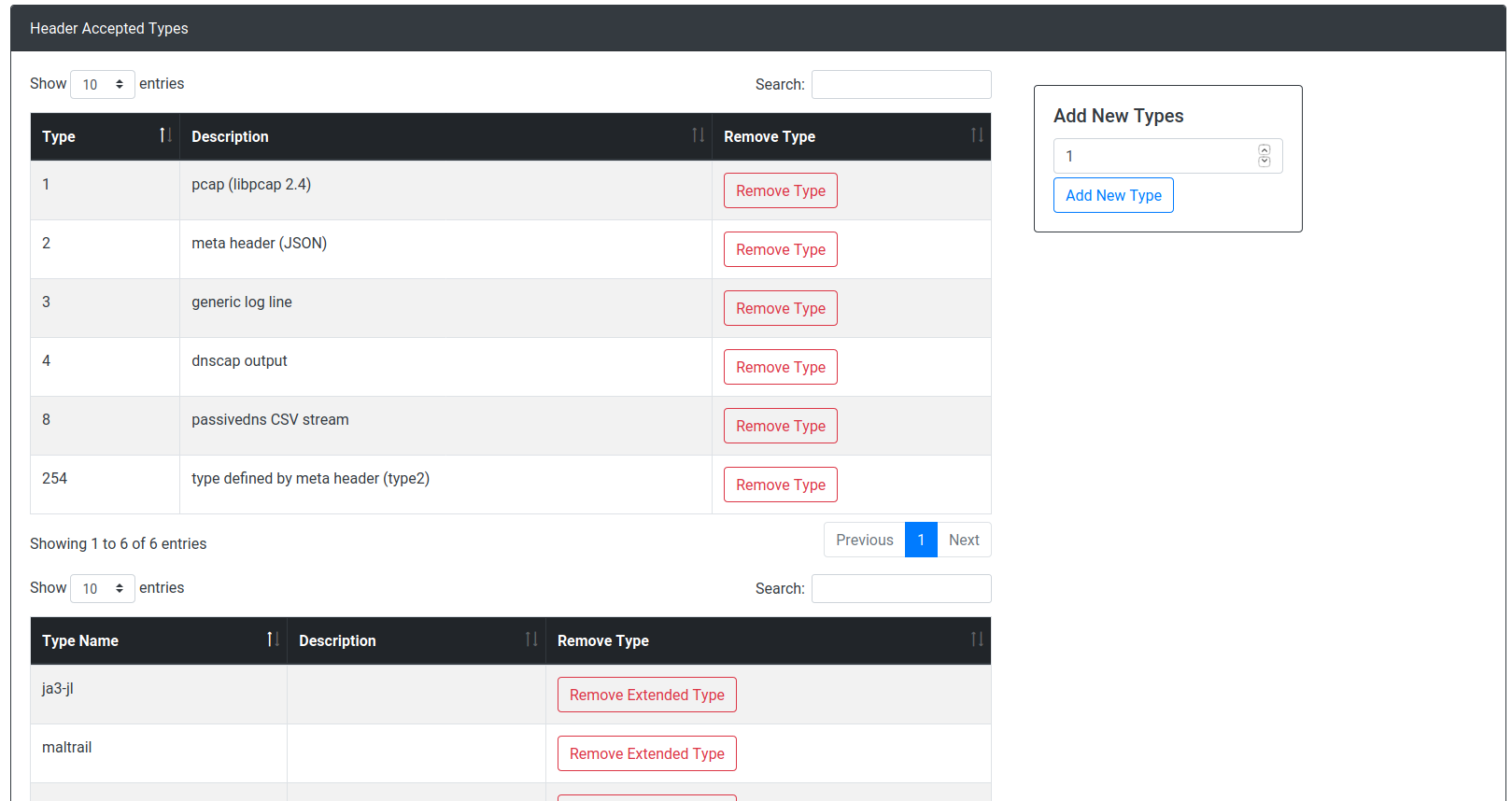

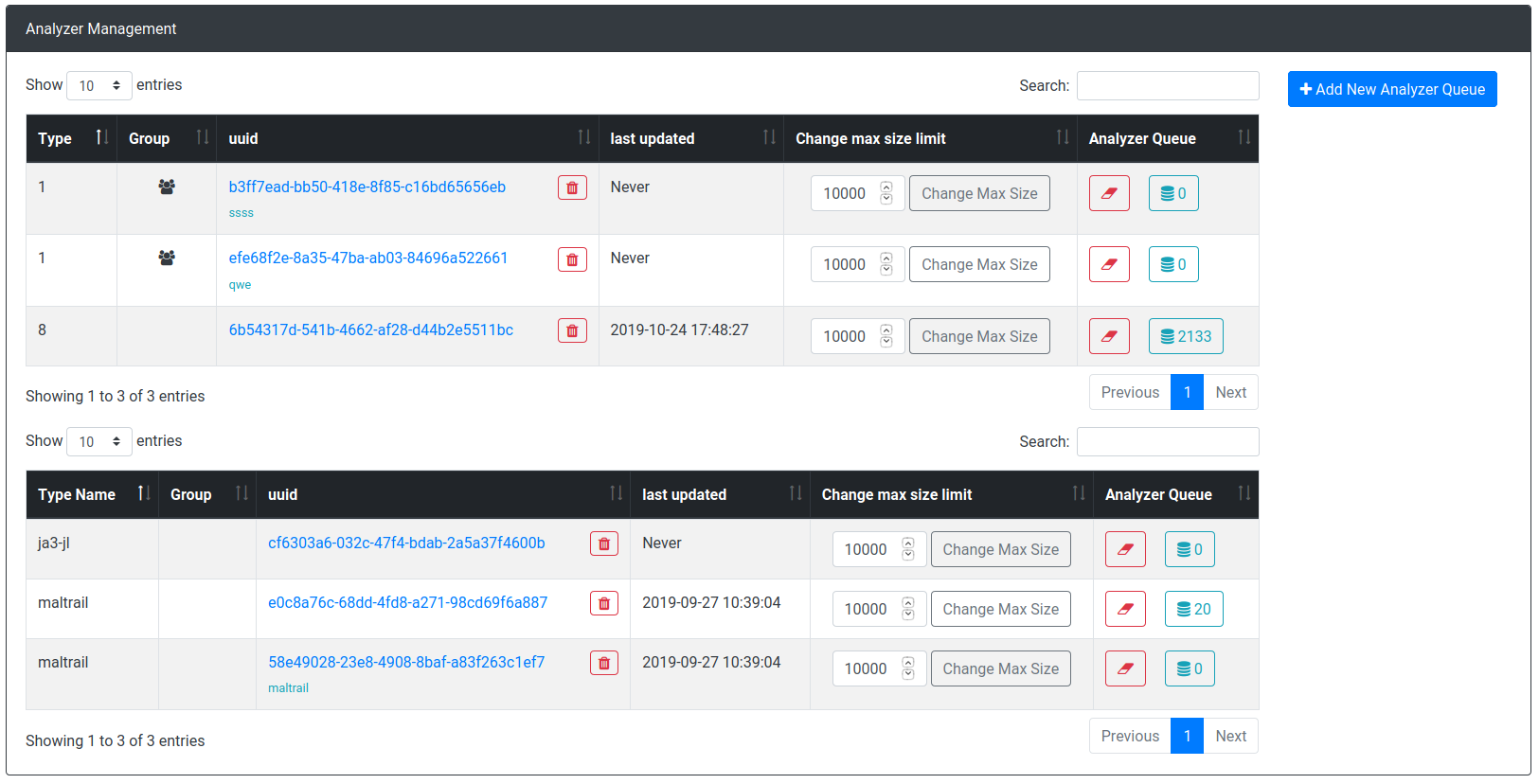

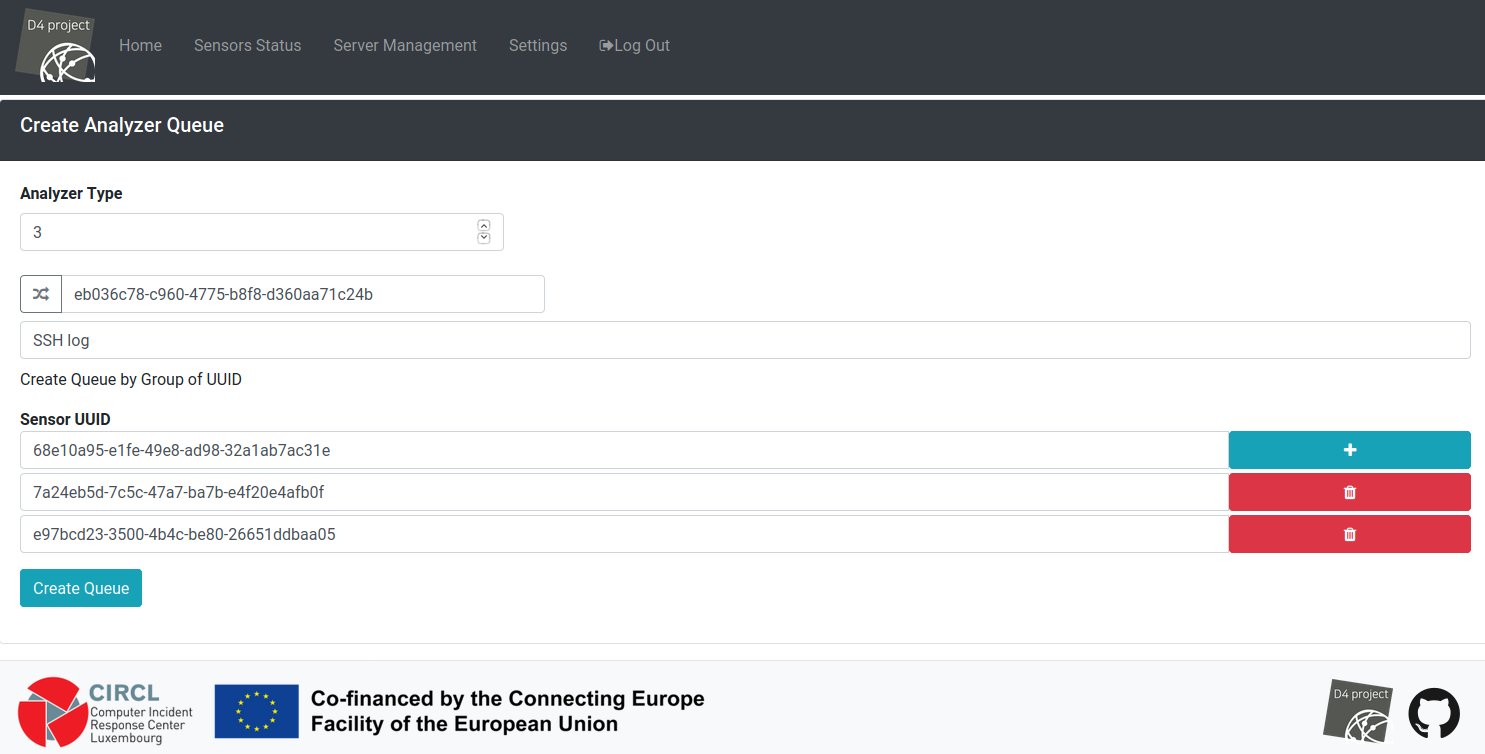

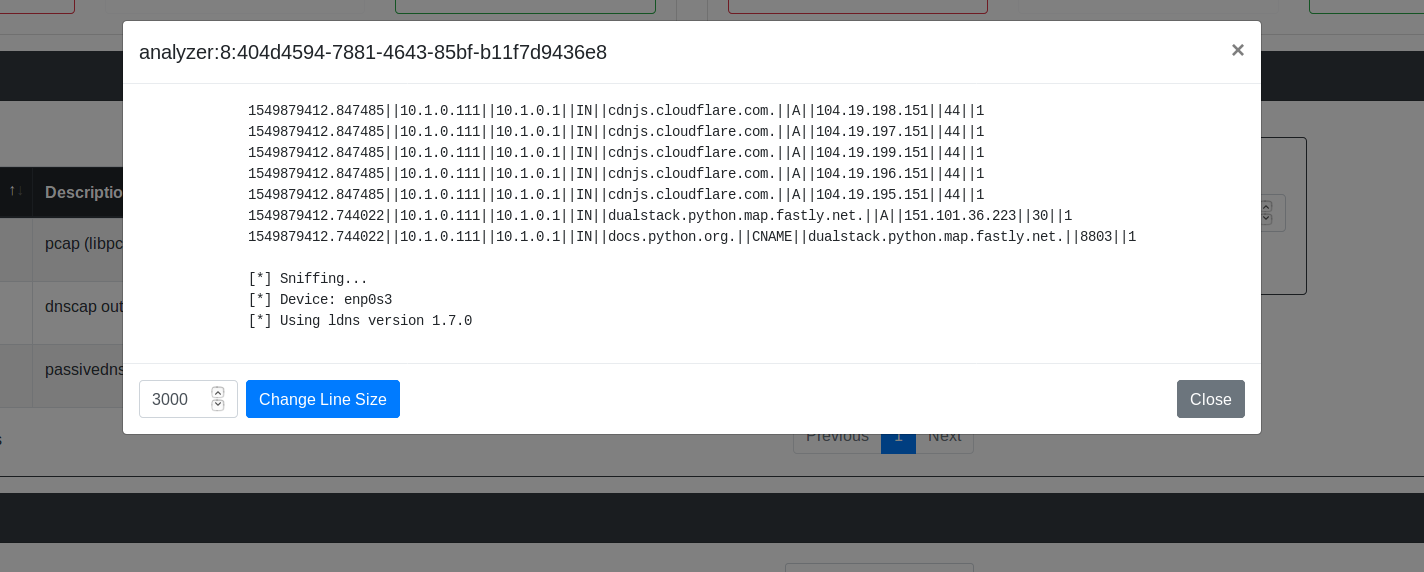

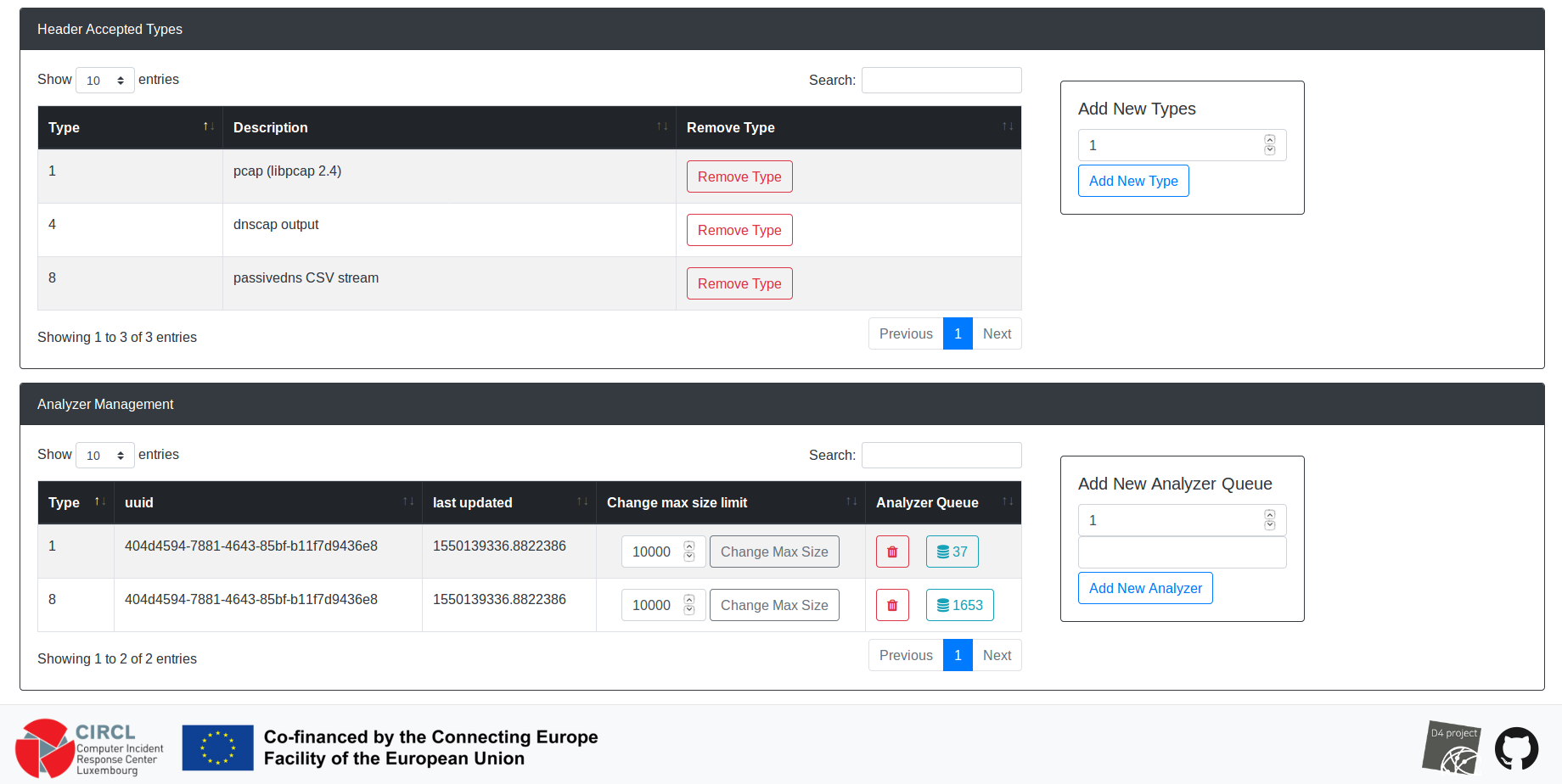

### Screenshots of D4 core server management

|

||||

### D4 core server Screenshots

|

||||

|

||||

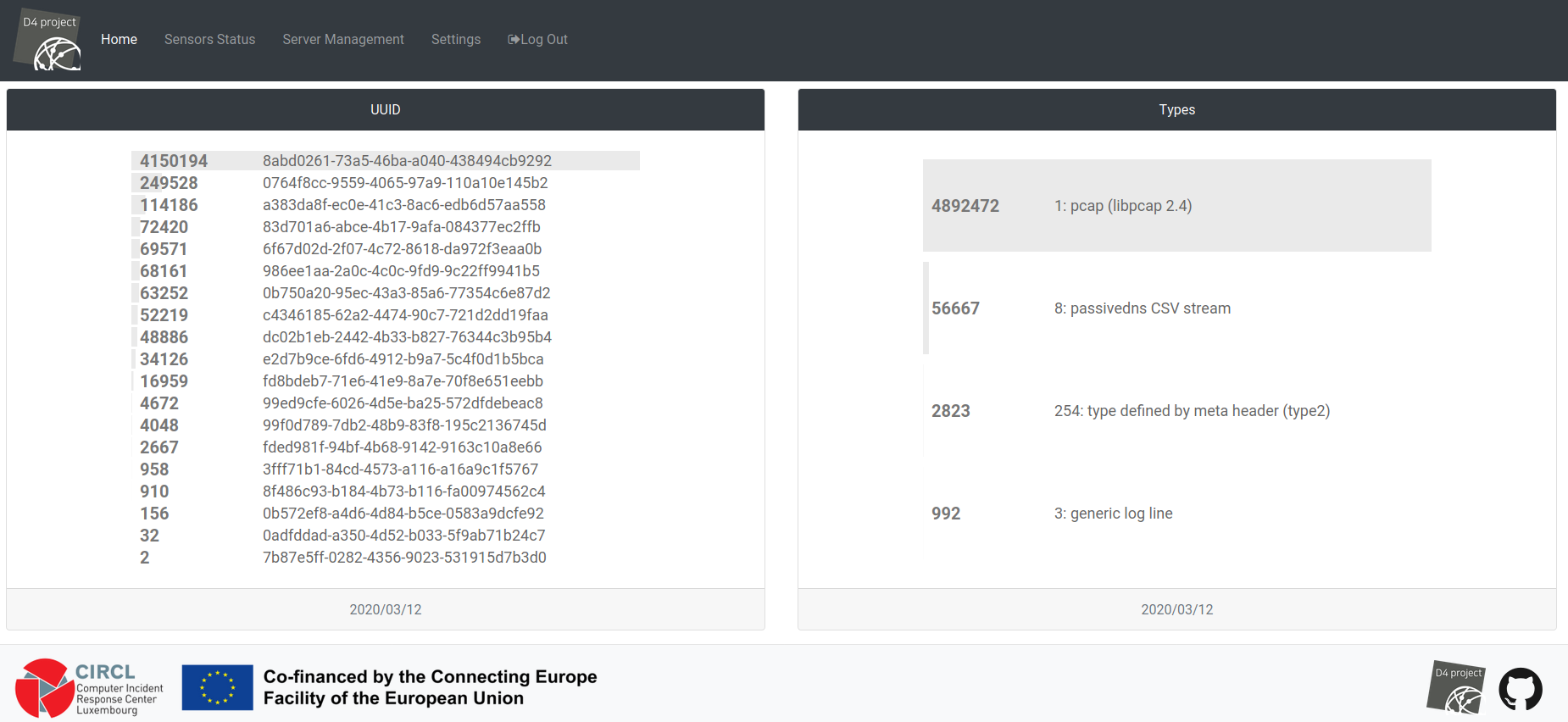

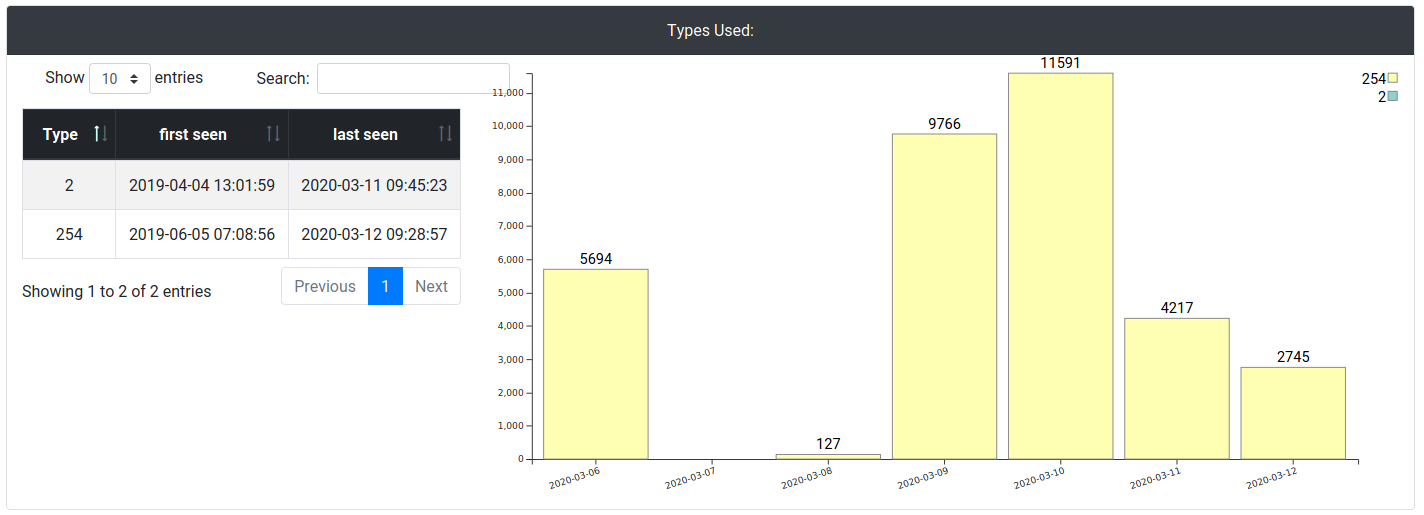

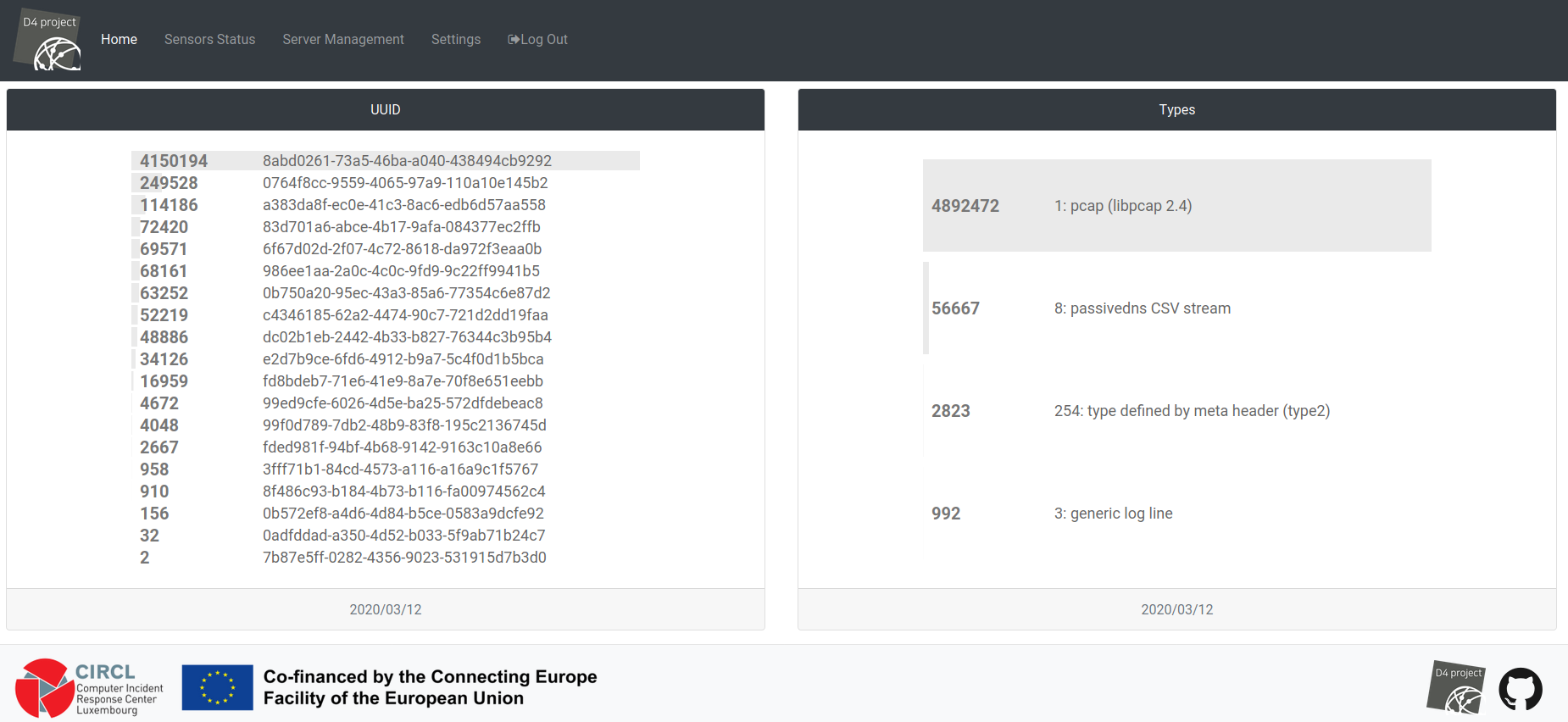

#### Dashboard:

|

||||

|

||||

|

||||

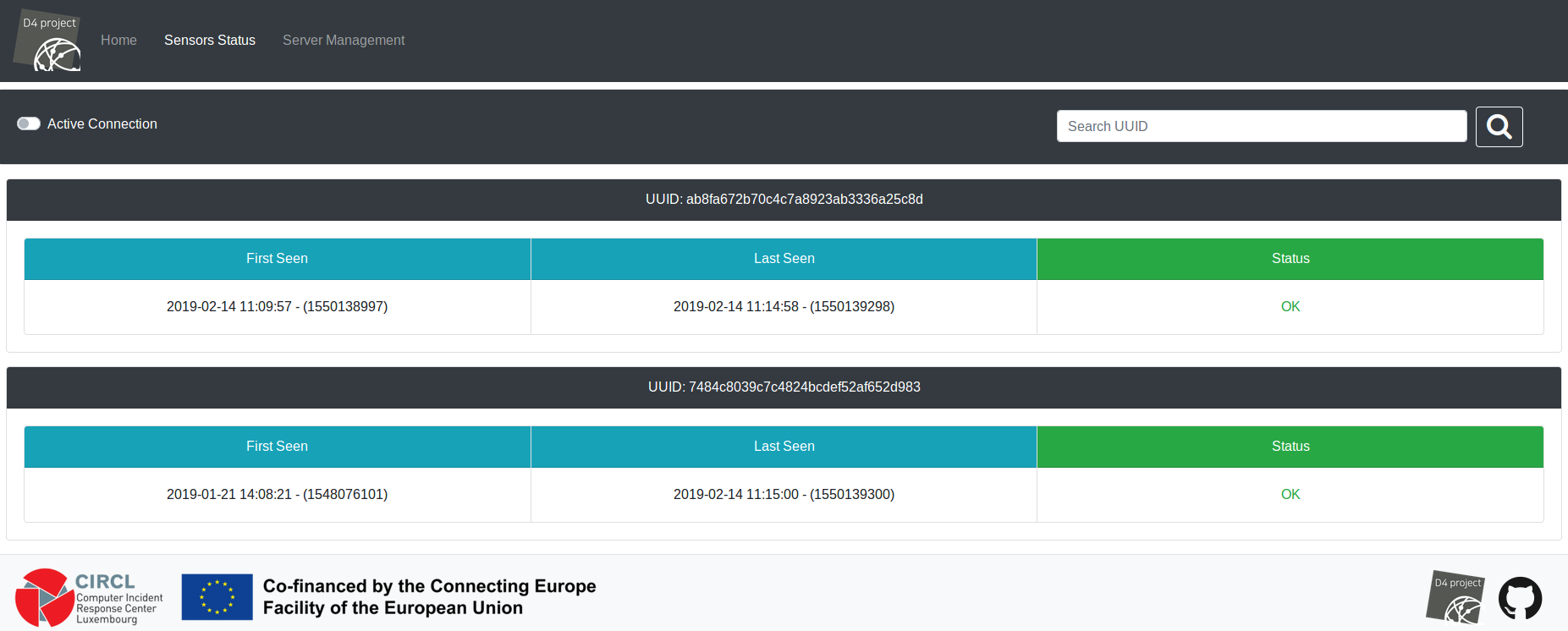

#### Connected Sensors:

|

||||

|

||||

|

||||

|

||||

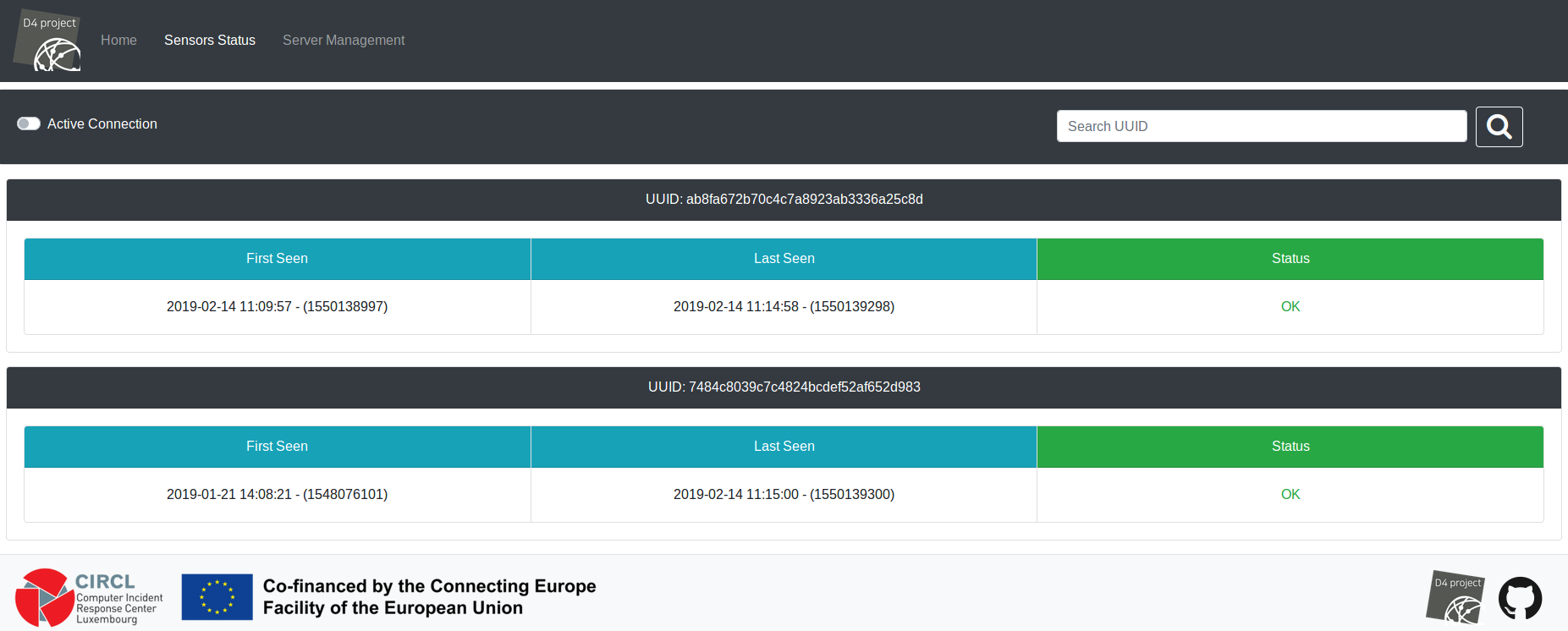

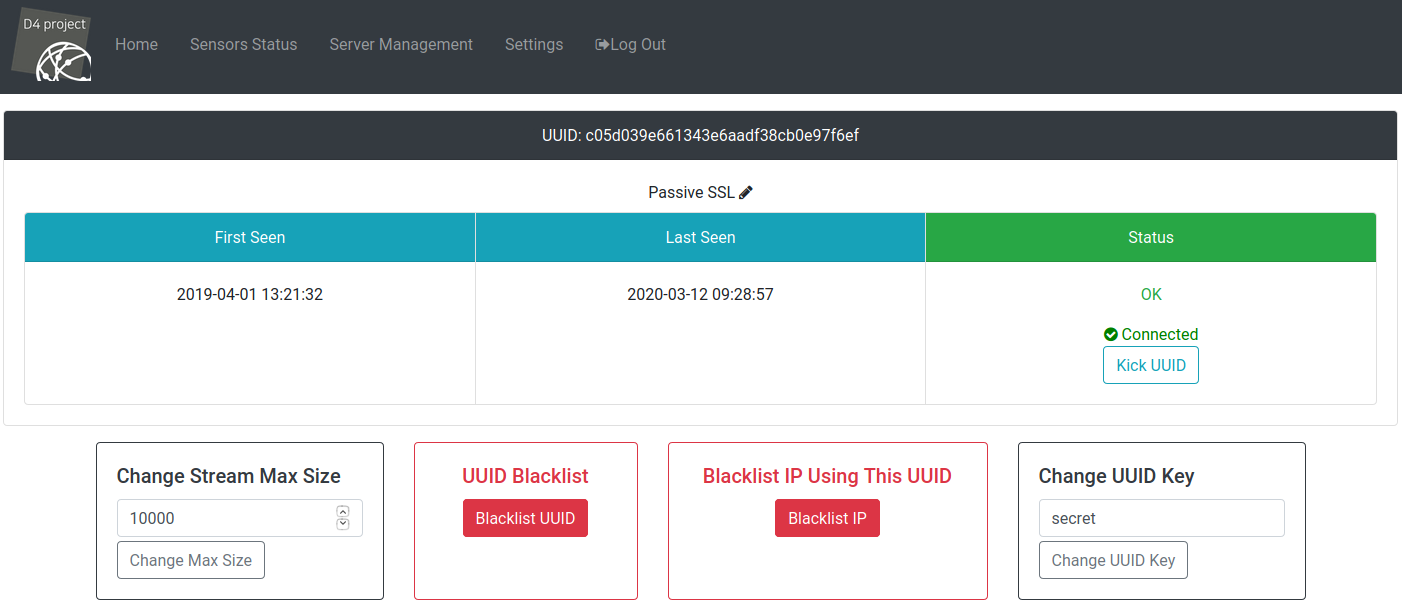

#### Sensors Status:

|

||||

|

||||

|

||||

|

||||

|

||||

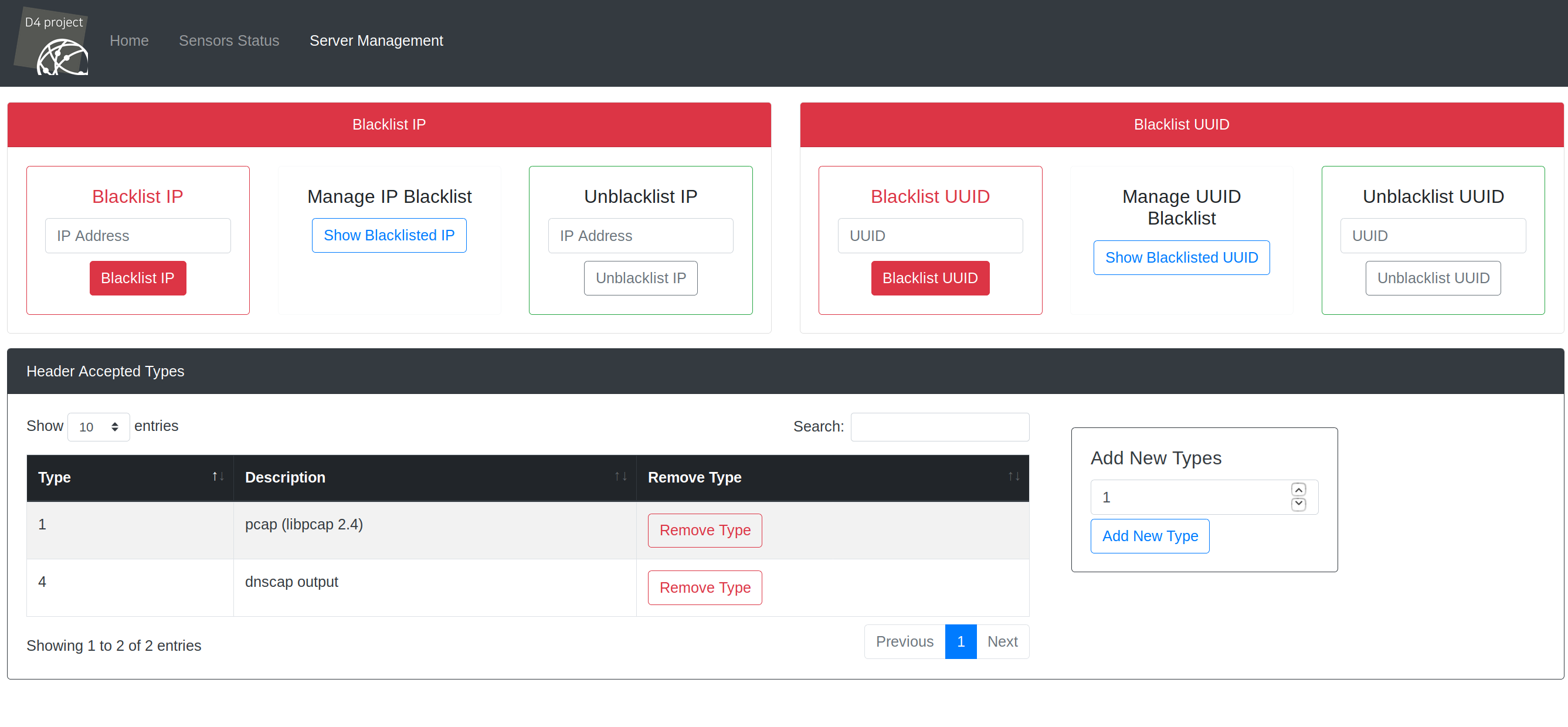

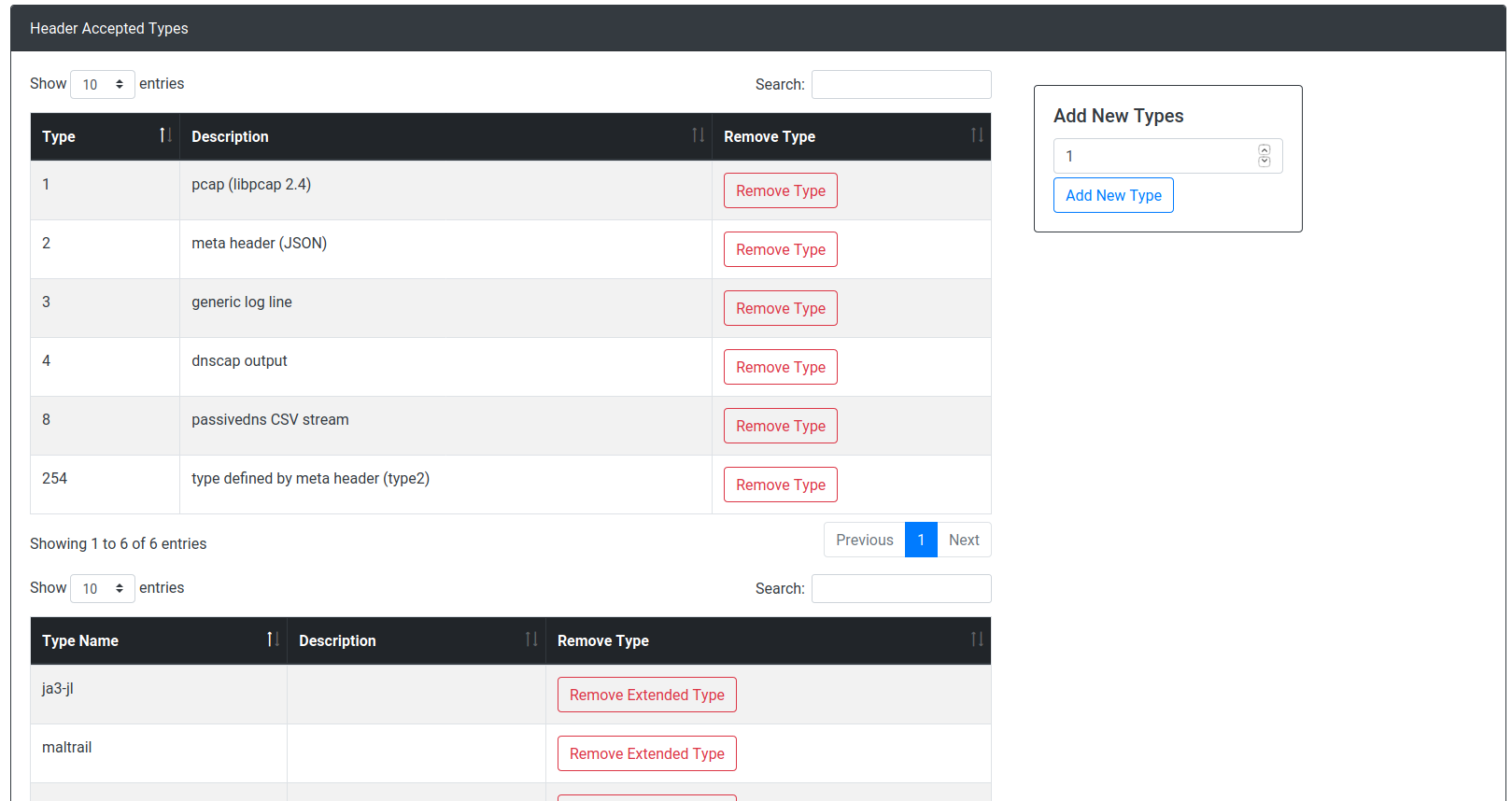

#### Server Management:

|

||||

|

||||

|

||||

|

||||

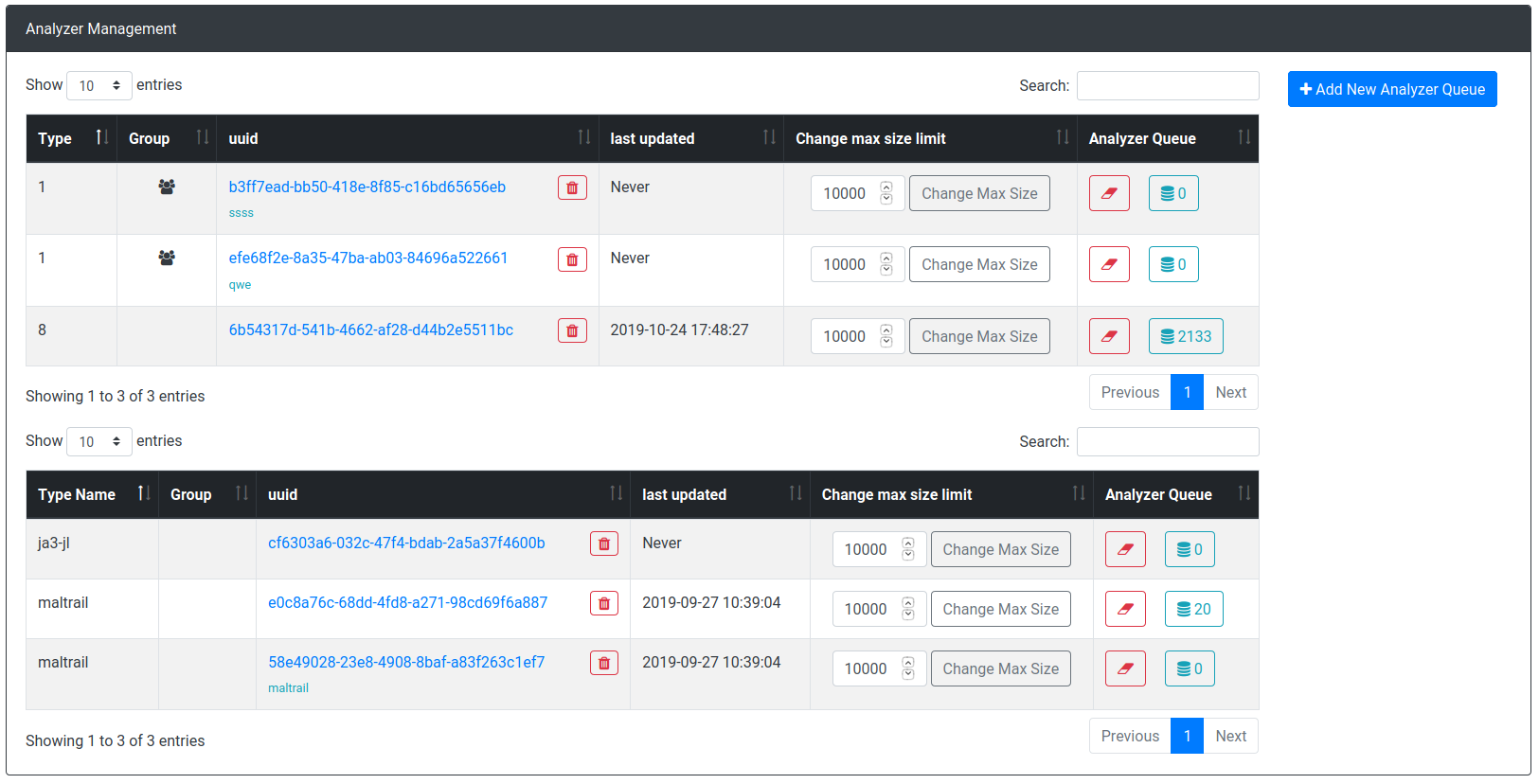

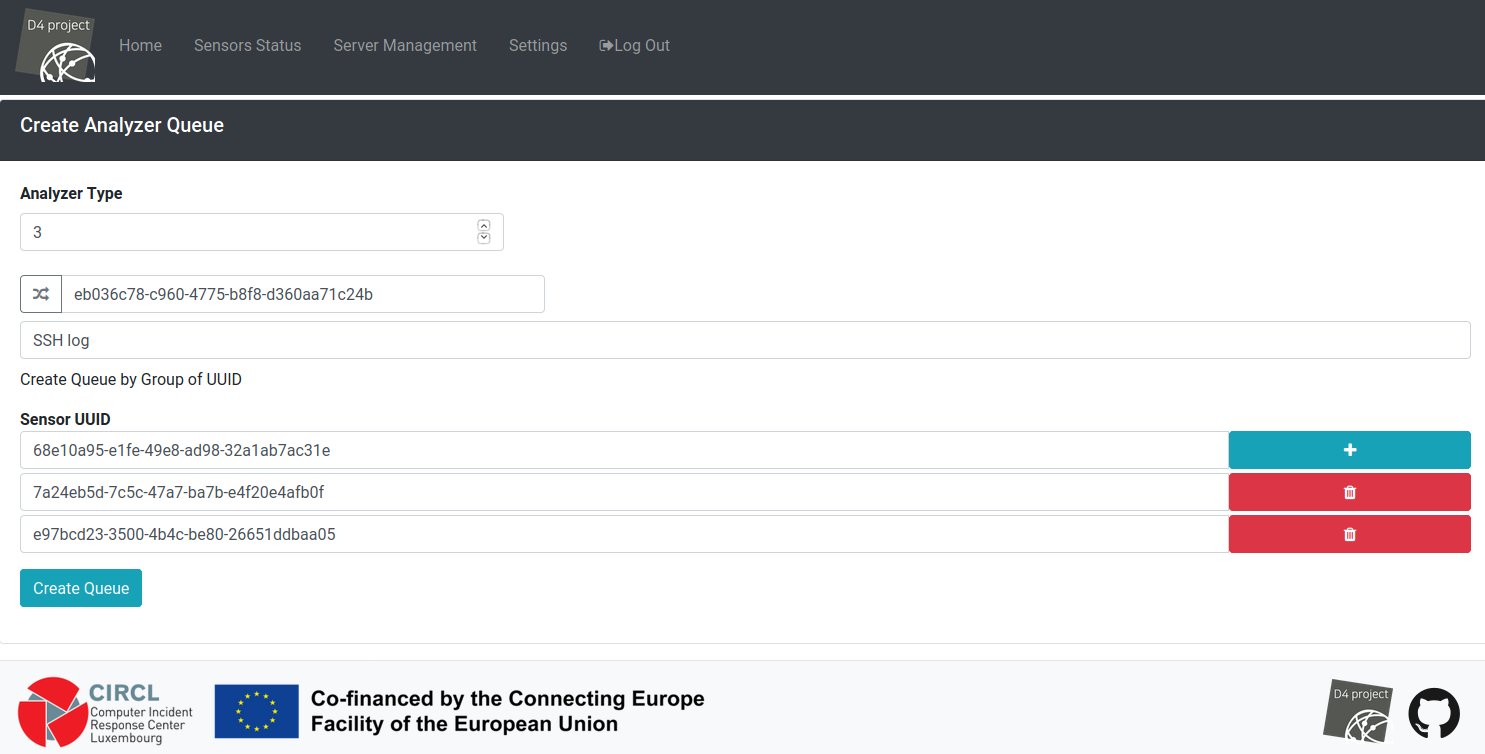

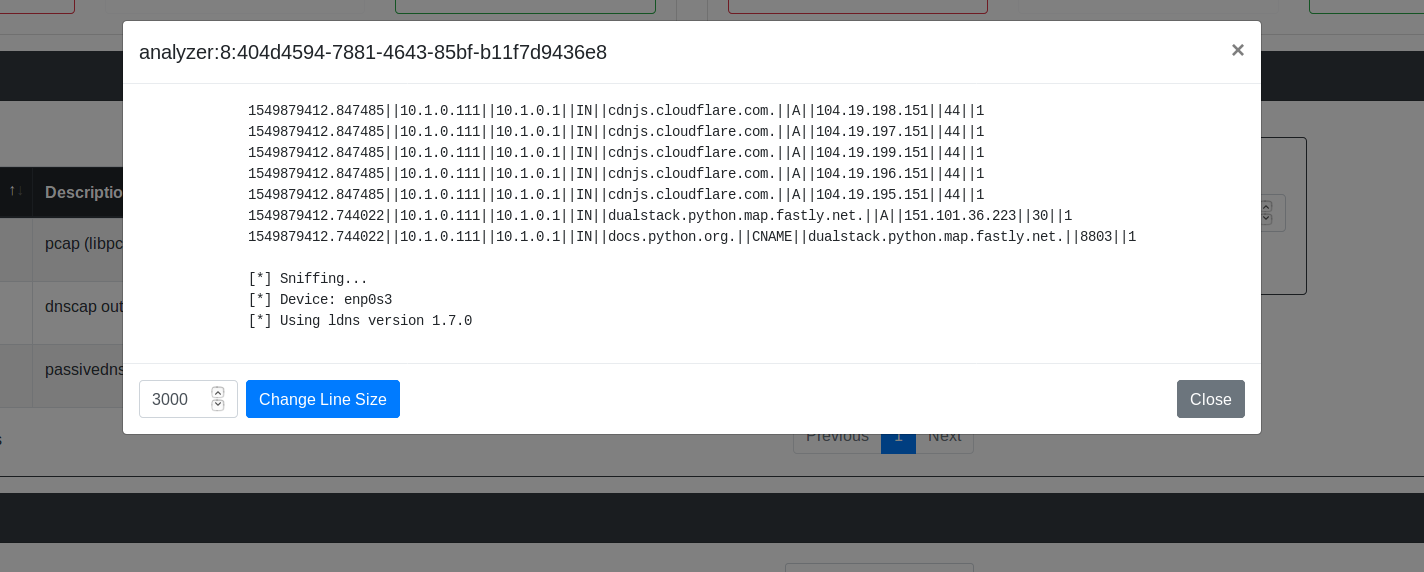

#### analyzer Queues:

|

||||

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ clean:

|

|||

- rm -rf *.o hmac

|

||||

|

||||

d4: d4.o sha2.o hmac.o unpack.o unparse.o pack.o gen_uuid.o randutils.o parse.o

|

||||

gcc -Wall -o d4 d4.o hmac.o sha2.o unpack.o pack.o unparse.o gen_uuid.o randutils.o parse.o

|

||||

$(CC) -Wall -o d4 d4.o hmac.o sha2.o unpack.o pack.o unparse.o gen_uuid.o randutils.o parse.o

|

||||

|

||||

d4.o: d4.c

|

||||

gcc -Wall -c d4.c

|

||||

$(CC) -Wall -c d4.c

|

||||

|

|

|

|||

|

|

@ -210,7 +210,7 @@ void d4_transfert(d4_t* d4)

|

|||

//In case of errors see block of 0 bytes

|

||||

bzero(buf, d4->snaplen);

|

||||

nread = read(d4->source.fd, buf, d4->snaplen);

|

||||

if ( nread > 0 ) {

|

||||

if ( nread >= 0 ) {

|

||||

d4_update_header(d4, nread);

|

||||

//Do HMAC on header and payload. HMAC field is 0 during computation

|

||||

if (d4->ctx) {

|

||||

|

|

@ -238,6 +238,11 @@ void d4_transfert(d4_t* d4)

|

|||

fprintf(stderr,"Incomplete header written. abort to let consumer known that the packet is corrupted\n");

|

||||

abort();

|

||||

}

|

||||

// no data - create empty D4 packet

|

||||

if ( nread == 0 ) {

|

||||

//FIXME no data available, sleep, abort, retry

|

||||

break;

|

||||

}

|

||||

} else{

|

||||

//FIXME no data available, sleep, abort, retry

|

||||

break;

|

||||

|

|

|

|||

|

After Width: | Height: | Size: 82 KiB |

|

After Width: | Height: | Size: 141 KiB |

|

After Width: | Height: | Size: 117 KiB |

|

Before Width: | Height: | Size: 88 KiB After Width: | Height: | Size: 243 KiB |

|

After Width: | Height: | Size: 52 KiB |

|

After Width: | Height: | Size: 48 KiB |

|

After Width: | Height: | Size: 66 KiB |

|

After Width: | Height: | Size: 85 KiB |

|

After Width: | Height: | Size: 68 KiB |

|

|

@ -0,0 +1,15 @@

|

|||

FROM python:3

|

||||

|

||||

WORKDIR /usr/src/

|

||||

RUN git clone https://github.com/D4-project/analyzer-d4-passivedns.git

|

||||

# RUN git clone https://github.com/trolldbois/analyzer-d4-passivedns.git

|

||||

WORKDIR /usr/src/analyzer-d4-passivedns

|

||||

|

||||

# FIXME typo in requirements.txt filename

|

||||

RUN pip install --no-cache-dir -r requirements

|

||||

WORKDIR /usr/src/analyzer-d4-passivedns/bin

|

||||

|

||||

# should be a config

|

||||

# RUN cat /usr/src/analyzer-d4-passivedns/etc/analyzer.conf.sample | sed "s/127.0.0.1/redis-metadata/g" > /usr/src/analyzer-d4-passivedns/etc/analyzer.conf

|

||||

# ignore the config and use ENV variables.

|

||||

RUN cp ../etc/analyzer.conf.sample ../etc/analyzer.conf

|

||||

|

|

@ -0,0 +1,37 @@

|

|||

FROM python:3

|

||||

|

||||

|

||||

# that doesn't work on windows docker due to linefeeds

|

||||

# WORKDIR /usr/src/d4-server

|

||||

# COPY . .

|

||||

|

||||

## alternate solution

|

||||

WORKDIR /usr/src/tmp

|

||||

# RUN git clone https://github.com/trolldbois/d4-core.git

|

||||

RUN git clone https://github.com/D4-project/d4-core.git

|

||||

RUN mv d4-core/server/ /usr/src/d4-server

|

||||

WORKDIR /usr/src/d4-server

|

||||

|

||||

ENV D4_HOME=/usr/src/d4-server

|

||||

RUN pip install --no-cache-dir -r requirement.txt

|

||||

|

||||

# move to tls proxy ?

|

||||

WORKDIR /usr/src/d4-server/gen_cert

|

||||

RUN ./gen_root.sh

|

||||

RUN ./gen_cert.sh

|

||||

|

||||

# setup a lots of files

|

||||

WORKDIR /usr/src/d4-server/web

|

||||

RUN ./update_web.sh

|

||||

|

||||

WORKDIR /usr/src/d4-server

|

||||

|

||||

# Should be using configs instead. but not supported until docker 17.06+

|

||||

RUN cp configs/server.conf.sample configs/server.conf

|

||||

|

||||

# workers need tcpdump

|

||||

RUN apt-get update && apt-get install -y tcpdump

|

||||

|

||||

ENTRYPOINT ["python", "server.py", "-v", "10"]

|

||||

|

||||

# CMD bash -l

|

||||

|

|

@ -11,10 +11,10 @@ CYAN="\\033[1;36m"

|

|||

|

||||

. ./D4ENV/bin/activate

|

||||

|

||||

isredis=`screen -ls | egrep '[0-9]+.Redis_D4' | cut -d. -f1`

|

||||

isd4server=`screen -ls | egrep '[0-9]+.Server_D4' | cut -d. -f1`

|

||||

isworker=`screen -ls | egrep '[0-9]+.Workers_D4' | cut -d. -f1`

|

||||

isflask=`screen -ls | egrep '[0-9]+.Flask_D4' | cut -d. -f1`

|

||||

isredis=`screen -ls | egrep '[0-9]+.Redis_D4 ' | cut -d. -f1`

|

||||

isd4server=`screen -ls | egrep '[0-9]+.Server_D4 ' | cut -d. -f1`

|

||||

isworker=`screen -ls | egrep '[0-9]+.Workers_D4 ' | cut -d. -f1`

|

||||

isflask=`screen -ls | egrep '[0-9]+.Flask_D4 ' | cut -d. -f1`

|

||||

|

||||

function helptext {

|

||||

echo -e $YELLOW"

|

||||

|

|

@ -45,6 +45,10 @@ function helptext {

|

|||

"

|

||||

}

|

||||

|

||||

CONFIG=$D4_HOME/configs/server.conf

|

||||

redis_stream=`sed -nr '/\[Redis_STREAM\]/,/\[/{/port/p}' ${CONFIG} | awk -F= '/port/{print $2}' | sed 's/ //g'`

|

||||

redis_metadata=`sed -nr '/\[Redis_METADATA\]/,/\[/{/port/p}' ${CONFIG} | awk -F= '/port/{print $2}' | sed 's/ //g'`

|

||||

|

||||

function launching_redis {

|

||||

conf_dir="${D4_HOME}/configs/"

|

||||

redis_dir="${D4_HOME}/redis/src/"

|

||||

|

|

@ -65,6 +69,8 @@ function launching_d4_server {

|

|||

|

||||

screen -S "Server_D4" -X screen -t "Server_D4" bash -c "cd ${D4_HOME}; ./server.py -v 10; read x"

|

||||

sleep 0.1

|

||||

screen -S "Server_D4" -X screen -t "sensors_manager" bash -c "cd ${D4_HOME}; ./sensors_manager.py; read x"

|

||||

sleep 0.1

|

||||

}

|

||||

|

||||

function launching_workers {

|

||||

|

|

@ -76,6 +82,8 @@ function launching_workers {

|

|||

sleep 0.1

|

||||

screen -S "Workers_D4" -X screen -t "2_workers" bash -c "cd ${D4_HOME}/workers/workers_2; ./workers_manager.py; read x"

|

||||

sleep 0.1

|

||||

screen -S "Workers_D4" -X screen -t "3_workers" bash -c "cd ${D4_HOME}/workers/workers_3; ./workers_manager.py; read x"

|

||||

sleep 0.1

|

||||

screen -S "Workers_D4" -X screen -t "4_workers" bash -c "cd ${D4_HOME}/workers/workers_4; ./workers_manager.py; read x"

|

||||

sleep 0.1

|

||||

screen -S "Workers_D4" -X screen -t "8_workers" bash -c "cd ${D4_HOME}/workers/workers_8; ./workers_manager.py; read x"

|

||||

|

|

@ -84,22 +92,22 @@ function launching_workers {

|

|||

|

||||

function shutting_down_redis {

|

||||

redis_dir=${D4_HOME}/redis/src/

|

||||

bash -c $redis_dir'redis-cli -p 6379 SHUTDOWN'

|

||||

bash -c $redis_dir'redis-cli -p '$redis_stream' SHUTDOWN'

|

||||

sleep 0.1

|

||||

bash -c $redis_dir'redis-cli -p 6380 SHUTDOWN'

|

||||

bash -c $redis_dir'redis-cli -p '$redis_metadata' SHUTDOWN'

|

||||

sleep 0.1

|

||||

}

|

||||

|

||||

function checking_redis {

|

||||

flag_redis=0

|

||||

redis_dir=${D4_HOME}/redis/src/

|

||||

bash -c $redis_dir'redis-cli -p 6379 PING | grep "PONG" &> /dev/null'

|

||||

bash -c $redis_dir'redis-cli -p '$redis_stream' PING | grep "PONG" &> /dev/null'

|

||||

if [ ! $? == 0 ]; then

|

||||

echo -e $RED"\t6379 not ready"$DEFAULT

|

||||

flag_redis=1

|

||||

fi

|

||||

sleep 0.1

|

||||

bash -c $redis_dir'redis-cli -p 6380 PING | grep "PONG" &> /dev/null'

|

||||

bash -c $redis_dir'redis-cli -p '$redis_metadata' PING | grep "PONG" &> /dev/null'

|

||||

if [ ! $? == 0 ]; then

|

||||

echo -e $RED"\t6380 not ready"$DEFAULT

|

||||

flag_redis=1

|

||||

|

|

@ -109,6 +117,18 @@ function checking_redis {

|

|||

return $flag_redis;

|

||||

}

|

||||

|

||||

function wait_until_redis_is_ready {

|

||||

redis_not_ready=true

|

||||

while $redis_not_ready; do

|

||||

if checking_redis; then

|

||||

redis_not_ready=false;

|

||||

else

|

||||

sleep 1

|

||||

fi

|

||||

done

|

||||

echo -e $YELLOW"\t* Redis Launched"$DEFAULT

|

||||

}

|

||||

|

||||

function launch_redis {

|

||||

if [[ ! $isredis ]]; then

|

||||

launching_redis;

|

||||

|

|

@ -280,6 +300,9 @@ while [ "$1" != "" ]; do

|

|||

-k | --killAll ) helptext;

|

||||

killall;

|

||||

;;

|

||||

-lrv | --launchRedisVerify ) launch_redis;

|

||||

wait_until_redis_is_ready;

|

||||

;;

|

||||

-h | --help ) helptext;

|

||||

exit

|

||||

;;

|

||||

|

|

|

|||

|

|

@ -15,11 +15,24 @@ sensor registrations, management of decoding protocols and dispatching to adequa

|

|||

### Installation

|

||||

|

||||

###### Install D4 server

|

||||

|

||||

Clone the repository and install necessary packages. Installation requires *sudo* permissions.

|

||||

|

||||

~~~~

|

||||

git clone https://github.com/D4-project/d4-core.git

|

||||

cd d4-core

|

||||

cd server

|

||||

./install_server.sh

|

||||

~~~~

|

||||

Create or add a pem in [d4-core/server](https://github.com/D4-project/d4-core/tree/master/server) :

|

||||

|

||||

When the installation is finished, scroll back to where `+ ./create_default_user.py` is displayed. The next lines contain the default generated user and should resemble the snippet below. Take a temporary note of the password, you are required to **change the password** on first login.

|

||||

~~~~

|

||||

new user created: admin@admin.test

|

||||

password: <redacted>

|

||||

token: <redacted>

|

||||

~~~~

|

||||

|

||||

Then create or add a pem in [d4-core/server](https://github.com/D4-project/d4-core/tree/master/server) :

|

||||

~~~~

|

||||

cd gen_cert

|

||||

./gen_root.sh

|

||||

|

|

@ -27,7 +40,6 @@ cd gen_cert

|

|||

cd ..

|

||||

~~~~

|

||||

|

||||

|

||||

###### Launch D4 server

|

||||

~~~~

|

||||

./LAUNCH.sh -l

|

||||

|

|

@ -35,6 +47,14 @@ cd ..

|

|||

|

||||

The web interface is accessible via `http://127.0.0.1:7000/`

|

||||

|

||||

If you cannot access the web interface on localhost (for example because the system is running on a remote host), then stop the server, change the listening host IP and restart the server. In the below example it's changed to `0.0.0.0` (all interfaces). Make sure that the IP is not unintentionally publicly exposed.

|

||||

|

||||

~~~~

|

||||

./LAUNCH.sh -k

|

||||

sed -i '/\[Flask_Server\]/{:a;N;/host = 127\.0\.0\.1/!ba;s/host = 127\.0\.0\.1/host = 0.0.0.0/}' configs/server.conf

|

||||

./LAUNCH.sh -l

|

||||

~~~~

|

||||

|

||||

### Updating web assets

|

||||

To update javascript libs run:

|

||||

~~~~

|

||||

|

|

@ -42,18 +62,36 @@ cd web

|

|||

./update_web.sh

|

||||

~~~~

|

||||

|

||||

### API

|

||||

|

||||

[API Documentation](https://github.com/D4-project/d4-core/tree/master/server/documentation/README.md)

|

||||

|

||||

### Notes

|

||||

|

||||

- All server logs are located in ``d4-core/server/logs/``

|

||||

- Close D4 Server: ``./LAUNCH.sh -k``

|

||||

|

||||

### Screenshots of D4 core server management

|

||||

### D4 core server

|

||||

|

||||

#### Dashboard:

|

||||

|

||||

|

||||

#### Connected Sensors:

|

||||

|

||||

|

||||

|

||||

#### Sensors Status:

|

||||

|

||||

|

||||

|

||||

|

||||

#### Server Management:

|

||||

|

||||

|

||||

|

||||

#### analyzer Queues:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Troubleshooting

|

||||

|

||||

|

|

@ -66,3 +104,7 @@ Run the following command as root:

|

|||

~~~~

|

||||

aa-complain /usr/sbin/tcpdump

|

||||

~~~~

|

||||

|

||||

###### WARNING - Not registered UUID=UUID4, connection closed

|

||||

|

||||

This happens after you have registered a new sensor, but have not approved the registration. In order to approve the sensor, go in the web interface to **Server Management**, and click **Pending Sensors**.

|

||||

|

|

@ -0,0 +1,75 @@

|

|||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

import time

|

||||

import redis

|

||||

import socket

|

||||

import argparse

|

||||

|

||||

import logging

|

||||

import logging.handlers

|

||||

|

||||

log_level = {'DEBUG': 10, 'INFO': 20, 'WARNING': 30, 'ERROR': 40, 'CRITICAL': 50}

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser(description='Export d4 data to stdout')

|

||||

parser.add_argument('-t', '--type', help='d4 type or extended type' , type=str, dest='type', required=True)

|

||||

parser.add_argument('-u', '--uuid', help='queue uuid' , type=str, dest='uuid', required=True)

|

||||

parser.add_argument('-i', '--ip',help='server ip' , type=str, default='127.0.0.1', dest='target_ip')

|

||||

parser.add_argument('-p', '--port',help='server port' ,type=int, default=514, dest='target_port')

|

||||

parser.add_argument('-l', '--log_level', help='log level: DEBUG, INFO, WARNING, ERROR, CRITICAL', type=str, default='INFO', dest='req_level')

|

||||

parser.add_argument('-n', '--newline', help='add new lines', action="store_true")

|

||||

parser.add_argument('-ri', '--redis_ip',help='redis host' , type=str, default='127.0.0.1', dest='host_redis')

|

||||

parser.add_argument('-rp', '--redis_port',help='redis port' , type=int, default=6380, dest='port_redis')

|

||||

args = parser.parse_args()

|

||||

|

||||

if not args.uuid or not args.type or not args.target_port:

|

||||

parser.print_help()

|

||||

sys.exit(0)

|

||||

|

||||

host_redis=args.host_redis

|

||||

port_redis=args.port_redis

|

||||

newLines = args.newline

|

||||

req_level = args.req_level

|

||||

|

||||

if req_level not in log_level:

|

||||

print('ERROR: incorrect log level')

|

||||

sys.exit(0)

|

||||

|

||||

redis_d4= redis.StrictRedis(

|

||||

host=host_redis,

|

||||

port=port_redis,

|

||||

db=2)

|

||||

try:

|

||||

redis_d4.ping()

|

||||

except redis.exceptions.ConnectionError:

|

||||

print('Error: Redis server {}:{}, ConnectionError'.format(host_redis, port_redis))

|

||||

sys.exit(1)

|

||||

|

||||

d4_uuid = args.uuid

|

||||

d4_type = args.type

|

||||

data_queue = 'analyzer:{}:{}'.format(d4_type, d4_uuid)

|

||||

|

||||

target_ip = args.target_ip

|

||||

target_port = args.target_port

|

||||

addr = (target_ip, target_port)

|

||||

|

||||

syslog_logger = logging.getLogger('D4-SYSLOGOUT')

|

||||

syslog_logger.setLevel(logging.DEBUG)

|

||||

client_socket = logging.handlers.SysLogHandler(address = addr)

|

||||

syslog_logger.addHandler(client_socket)

|

||||

|

||||

while True:

|

||||

|

||||

d4_data = redis_d4.rpop(data_queue)

|

||||

if d4_data is None:

|

||||

time.sleep(1)

|

||||

continue

|

||||

|

||||

if newLines:

|

||||

d4_data = d4_data + b'\n'

|

||||

|

||||

syslog_logger.log(log_level[req_level], d4_data.decode())

|

||||

|

||||

client_socket.close()

|

||||

|

|

@ -0,0 +1,86 @@

|

|||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

import redis

|

||||

import time

|

||||

import datetime

|

||||

|

||||

import argparse

|

||||

import logging

|

||||

import logging.handlers

|

||||

|

||||

|

||||

import socket

|

||||

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser(description='Export d4 data to stdout')

|

||||

parser.add_argument('-t', '--type', help='d4 type or extended type' , type=str, dest='type', required=True)

|

||||

parser.add_argument('-u', '--uuid', help='queue uuid' , type=str, dest='uuid', required=True)

|

||||

parser.add_argument('-i', '--ip',help='server ip' , type=str, default='127.0.0.1', dest='target_ip')

|

||||

parser.add_argument('-p', '--port',help='server port' , type=int, dest='target_port', required=True)

|

||||

parser.add_argument('-k', '--Keepalive', help='Keepalive in second', type=int, default='15', dest='ka_sec')

|

||||

parser.add_argument('-n', '--newline', help='add new lines', action="store_true")

|

||||

parser.add_argument('-ri', '--redis_ip',help='redis ip' , type=str, default='127.0.0.1', dest='host_redis')

|

||||

parser.add_argument('-rp', '--redis_port',help='redis port' , type=int, default=6380, dest='port_redis')

|

||||

args = parser.parse_args()

|

||||

|

||||

if not args.uuid or not args.type or not args.target_port:

|

||||

parser.print_help()

|

||||

sys.exit(0)

|

||||

|

||||

host_redis=args.host_redis

|

||||

port_redis=args.port_redis

|

||||

newLines = args.newline

|

||||

|

||||

redis_d4= redis.StrictRedis(

|

||||

host=host_redis,

|

||||

port=port_redis,

|

||||

db=2)

|

||||

try:

|

||||

redis_d4.ping()

|

||||

except redis.exceptions.ConnectionError:

|

||||

print('Error: Redis server {}:{}, ConnectionError'.format(host_redis, port_redis))

|

||||

sys.exit(1)

|

||||

|

||||

d4_uuid = args.uuid

|

||||

d4_type = args.type

|

||||

data_queue = 'analyzer:{}:{}'.format(d4_type, d4_uuid)

|

||||

|

||||

target_ip = args.target_ip

|

||||

target_port = args.target_port

|

||||

addr = (target_ip, target_port)

|

||||

|

||||

# default keep alive: 15

|

||||

ka_sec = args.ka_sec

|

||||

|

||||

# Create a TCP socket

|

||||

client_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

|

||||

|

||||

# TCP Keepalive

|

||||

client_socket.setsockopt(socket.SOL_SOCKET, socket.SO_KEEPALIVE, 1)

|

||||

client_socket.setsockopt(socket.IPPROTO_TCP, socket.TCP_KEEPCNT, 1)

|

||||

client_socket.setsockopt(socket.IPPROTO_TCP, socket.TCP_KEEPIDLE, ka_sec)

|

||||

client_socket.setsockopt(socket.IPPROTO_TCP, socket.TCP_KEEPINTVL, ka_sec)

|

||||

|

||||

# TCP connect

|

||||

client_socket.connect(addr)

|

||||

|

||||

newLines=True

|

||||

while True:

|

||||

|

||||

d4_data = redis_d4.rpop(data_queue)

|

||||

if d4_data is None:

|

||||

time.sleep(1)

|

||||

continue

|

||||

|

||||

if newLines:

|

||||

d4_data = d4_data + b'\n'

|

||||

|

||||

print(d4_data)

|

||||

client_socket.sendall(d4_data)

|

||||

|

||||

client_socket.shutdown(socket.SHUT_RDWR)

|

||||

|

|

@ -0,0 +1,101 @@

|

|||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

import redis

|

||||

import time

|

||||

import datetime

|

||||

|

||||

import argparse

|

||||

import logging

|

||||

import logging.handlers

|

||||

|

||||

|

||||

import socket

|

||||

import ssl

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser(description='Export d4 data to stdout')

|

||||

parser.add_argument('-t', '--type', help='d4 type or extended type', type=str, dest='type', required=True)

|

||||

parser.add_argument('-u', '--uuid', help='queue uuid', type=str, dest='uuid', required=True)

|

||||

parser.add_argument('-i', '--ip',help='server ip', type=str, default='127.0.0.1', dest='target_ip')

|

||||

parser.add_argument('-p', '--port',help='server port', type=int, dest='target_port', required=True)

|

||||

parser.add_argument('-k', '--Keepalive', help='Keepalive in second', type=int, default='15', dest='ka_sec')

|

||||

parser.add_argument('-n', '--newline', help='add new lines', action="store_true")

|

||||

parser.add_argument('-ri', '--redis_ip', help='redis ip', type=str, default='127.0.0.1', dest='host_redis')

|

||||

parser.add_argument('-rp', '--redis_port', help='redis port', type=int, default=6380, dest='port_redis')

|

||||

parser.add_argument('-v', '--verify_certificate', help='verify server certificate', type=str, default='True', dest='verify_certificate')

|

||||

parser.add_argument('-c', '--ca_certs', help='cert filename' , type=str, default=None, dest='ca_certs')

|

||||

args = parser.parse_args()

|

||||

|

||||

if not args.uuid or not args.type or not args.target_port:

|

||||

parser.print_help()

|

||||

sys.exit(0)

|

||||

|

||||

host_redis=args.host_redis

|

||||

port_redis=args.port_redis

|

||||

newLines=args.newline

|

||||

verify_certificate=args.verify_certificate

|

||||

ca_certs=args.ca_certs

|

||||

|

||||

redis_d4= redis.StrictRedis(

|

||||

host=host_redis,

|

||||

port=port_redis,

|

||||

db=2)

|

||||

try:

|

||||

redis_d4.ping()

|

||||

except redis.exceptions.ConnectionError:

|

||||

print('Error: Redis server {}:{}, ConnectionError'.format(host_redis, port_redis))

|

||||

sys.exit(1)

|

||||

|

||||

d4_uuid = args.uuid

|

||||

d4_type = args.type

|

||||

data_queue = 'analyzer:{}:{}'.format(d4_type, d4_uuid)

|

||||

|

||||

target_ip = args.target_ip

|

||||

target_port = args.target_port

|

||||

addr = (target_ip, target_port)

|

||||

|

||||

# default keep alive: 15

|

||||

ka_sec = args.ka_sec

|

||||

|

||||

# Create a TCP socket

|

||||

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

|

||||

|

||||

# TCP Keepalive

|

||||

s.setsockopt(socket.SOL_SOCKET, socket.SO_KEEPALIVE, 1)

|

||||

s.setsockopt(socket.IPPROTO_TCP, socket.TCP_KEEPCNT, 1)

|

||||

s.setsockopt(socket.IPPROTO_TCP, socket.TCP_KEEPIDLE, ka_sec)

|

||||

s.setsockopt(socket.IPPROTO_TCP, socket.TCP_KEEPINTVL, ka_sec)

|

||||

|

||||

# SSL

|

||||

if verify_certificate in ['False', 'false', 'f']:

|

||||

cert_reqs_option = ssl.CERT_NONE

|

||||

else:

|

||||

cert_reqs_option = ssl.CERT_REQUIRED

|

||||

|

||||

if ca_certs:

|

||||

ca_certs = None

|

||||

|

||||

client_socket = ssl.wrap_socket(s, cert_reqs=cert_reqs_option, ca_certs=ca_certs, ssl_version=ssl.PROTOCOL_TLS)

|

||||

|

||||

# TCP connect

|

||||

client_socket.connect(addr)

|

||||

|

||||

newLines=True

|

||||

while True:

|

||||

|

||||

d4_data = redis_d4.rpop(data_queue)

|

||||

if d4_data is None:

|

||||

time.sleep(1)

|

||||

continue

|

||||

|

||||

if newLines:

|

||||

d4_data = d4_data + b'\n'

|

||||

|

||||

print(d4_data)

|

||||

client_socket.send(d4_data)

|

||||

|

||||

client_socket.shutdown(socket.SHUT_RDWR)

|

||||

|

|

@ -0,0 +1,73 @@

|

|||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

import redis

|

||||

import time

|

||||

import datetime

|

||||

|

||||

import argparse

|

||||

import logging

|

||||

import logging.handlers

|

||||

|

||||

|

||||

import socket

|

||||

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser(description='Export d4 data to stdout')

|

||||

parser.add_argument('-t', '--type', help='d4 type or extended type' , type=str, dest='type', required=True)

|

||||

parser.add_argument('-u', '--uuid', help='queue uuid' , type=str, dest='uuid', required=True)

|

||||

parser.add_argument('-i', '--ip',help='server ip' , type=str, default='127.0.0.1', dest='target_ip')

|

||||

parser.add_argument('-p', '--port',help='server port' , type=int, dest='target_port', required=True)

|

||||

parser.add_argument('-n', '--newline', help='add new lines', action="store_true")

|

||||

parser.add_argument('-ri', '--redis_ip',help='redis host' , type=str, default='127.0.0.1', dest='host_redis')

|

||||

parser.add_argument('-rp', '--redis_port',help='redis port' , type=int, default=6380, dest='port_redis')

|

||||

args = parser.parse_args()

|

||||

|

||||

if not args.uuid or not args.type or not args.target_port:

|

||||

parser.print_help()

|

||||

sys.exit(0)

|

||||

|

||||

host_redis=args.host_redis

|

||||

port_redis=args.port_redis

|

||||

newLines = args.newline

|

||||

|

||||

redis_d4= redis.StrictRedis(

|

||||

host=host_redis,

|

||||

port=port_redis,

|

||||

db=2)

|

||||

try:

|

||||

redis_d4.ping()

|

||||

except redis.exceptions.ConnectionError:

|

||||

print('Error: Redis server {}:{}, ConnectionError'.format(host_redis, port_redis))

|

||||

sys.exit(1)

|

||||

|

||||

d4_uuid = args.uuid

|

||||

d4_type = args.type

|

||||

data_queue = 'analyzer:{}:{}'.format(d4_type, d4_uuid)

|

||||

|

||||

target_ip = args.target_ip

|

||||

target_port = args.target_port

|

||||

addr = (target_ip, target_port)

|

||||

|

||||

#Create a UDP socket

|

||||

client_socket = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

|

||||

|

||||

newLines=True

|

||||

while True:

|

||||

|

||||

d4_data = redis_d4.rpop(data_queue)

|

||||

if d4_data is None:

|

||||

time.sleep(1)

|

||||

continue

|

||||

|

||||

if newLines:

|

||||

d4_data = d4_data + b'\n'

|

||||

|

||||

print(d4_data)

|

||||

client_socket.sendto(d4_data, addr)

|

||||

|

||||

client_socket.close()

|

||||

|

|

@ -0,0 +1,80 @@

|

|||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

import redis

|

||||

import time

|

||||

import datetime

|

||||

|

||||

import argparse

|

||||

import logging

|

||||

import logging.handlers

|

||||

|

||||

|

||||

import socket

|

||||

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser(description='Export d4 data to stdout')

|

||||

parser.add_argument('-t', '--type', help='d4 type or extended type' , type=str, dest='type', required=True)

|

||||

parser.add_argument('-u', '--uuid', help='queue uuid' , type=str, dest='uuid', required=True)

|

||||

parser.add_argument('-s', '--socket',help='socket file' , type=str, dest='socket_file', required=True)

|

||||

parser.add_argument('-n', '--newline', help='add new lines', action="store_true")

|

||||

parser.add_argument('-ri', '--redis_ip',help='redis host' , type=str, default='127.0.0.1', dest='host_redis')

|

||||

parser.add_argument('-rp', '--redis_port',help='redis port' , type=int, default=6380, dest='port_redis')

|

||||

args = parser.parse_args()

|

||||

|

||||

if not args.uuid or not args.type or not args.socket_file:

|

||||

parser.print_help()

|

||||

sys.exit(0)

|

||||

|

||||

host_redis=args.host_redis

|

||||

port_redis=args.port_redis

|

||||

newLines = args.newline

|

||||

|

||||

redis_d4= redis.StrictRedis(

|

||||

host=host_redis,

|

||||

port=port_redis,

|

||||

db=2)

|

||||

try:

|

||||

redis_d4.ping()

|

||||

except redis.exceptions.ConnectionError:

|

||||

print('Error: Redis server {}:{}, ConnectionError'.format(host_redis, port_redis))

|

||||

sys.exit(1)

|

||||

|

||||

d4_uuid = args.uuid

|

||||

d4_type = args.type

|

||||

data_queue = 'analyzer:{}:{}'.format(d4_type, d4_uuid)

|

||||

|

||||

|

||||

socket_file = args.socket_file

|

||||

print("UNIX SOCKET: Connecting...")

|

||||

if os.path.exists(socket_file):

|

||||

client = socket.socket(socket.AF_UNIX, socket.SOCK_DGRAM)

|

||||

client.connect(socket_file)

|

||||

print("Connected")

|

||||

else:

|

||||

print("Couldn't Connect!")

|

||||

print("ERROR: socket file not found")

|

||||

print("Done")

|

||||

|

||||

|

||||

|

||||

newLines=False

|

||||

while True:

|

||||

|

||||

d4_data = redis_d4.rpop(data_queue)

|

||||

if d4_data is None:

|

||||

time.sleep(1)

|

||||

continue

|

||||

|

||||

if newLines:

|

||||

d4_data = d4_data + b'\n'

|

||||

|

||||

print(d4_data)

|

||||

|

||||

client.send(d4_data)

|

||||

|

||||

client.close()

|

||||

|

|

@ -0,0 +1,81 @@

|

|||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

import redis

|

||||

import time

|

||||

import datetime

|

||||

|

||||

import argparse

|

||||

import logging

|

||||

import logging.handlers

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser(description='Export d4 data to stdout')

|

||||

parser.add_argument('-t', '--type', help='d4 type' , type=int, dest='type', required=True)

|

||||

parser.add_argument('-u', '--uuid', help='queue uuid' , type=str, dest='uuid', required=True)

|

||||

parser.add_argument('-f', '--files', help='read data from files. Append file to stdin', action="store_true")

|

||||

parser.add_argument('-n', '--newline', help='add new lines', action="store_true")

|

||||

parser.add_argument('-i', '--ip',help='redis host' , type=str, default='127.0.0.1', dest='host_redis')

|

||||

parser.add_argument('-p', '--port',help='redis port' , type=int, default=6380, dest='port_redis')

|

||||

args = parser.parse_args()

|

||||

|

||||

if not args.uuid or not args.type:

|

||||

parser.print_help()

|

||||

sys.exit(0)

|

||||

|

||||

host_redis=args.host_redis

|

||||

port_redis=args.port_redis

|

||||

newLines = args.newline

|

||||

read_files = args.files

|

||||

|

||||

redis_d4= redis.StrictRedis(

|

||||

host=host_redis,

|

||||

port=port_redis,

|

||||

db=2)

|

||||

try:

|

||||

redis_d4.ping()

|

||||

except redis.exceptions.ConnectionError:

|

||||

print('Error: Redis server {}:{}, ConnectionError'.format(host_redis, port_redis))

|

||||

sys.exit(1)

|

||||

|

||||

# logs_dir = 'logs'

|

||||

# if not os.path.isdir(logs_dir):

|

||||

# os.makedirs(logs_dir)

|

||||

#

|

||||

# log_filename = 'logs/d4-stdout.log'

|

||||

# logger = logging.getLogger()

|

||||

# formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s')

|

||||

# handler_log = logging.handlers.TimedRotatingFileHandler(log_filename, when="midnight", interval=1)

|

||||

# handler_log.suffix = '%Y-%m-%d.log'

|

||||

# handler_log.setFormatter(formatter)

|

||||

# logger.addHandler(handler_log)

|

||||

# logger.setLevel(args.verbose)

|

||||

#

|

||||

# logger.info('Launching stdout Analyzer ...')

|

||||

|

||||

d4_uuid = args.uuid

|

||||

d4_type = args.type

|

||||

|

||||

data_queue = 'analyzer:{}:{}'.format(d4_type, d4_uuid)

|

||||

|

||||

while True:

|

||||

d4_data = redis_d4.rpop(data_queue)

|

||||

if d4_data is None:

|

||||

time.sleep(1)

|

||||

continue

|

||||

if read_files:

|

||||

try:

|

||||

with open(d4_data, 'rb') as f:

|

||||

sys.stdout.buffer.write(f.read())

|

||||

sys.exit(0)

|

||||

except FileNotFoundError:

|

||||

## TODO: write logs file

|

||||

continue

|

||||

|

||||

else:

|

||||

if newLines:

|

||||

sys.stdout.buffer.write(d4_data + b'\n')

|

||||

else:

|

||||

sys.stdout.buffer.write(d4_data)

|

||||

|

|

@ -155,7 +155,7 @@ supervised no

|

|||

#

|

||||

# Creating a pid file is best effort: if Redis is not able to create it

|

||||

# nothing bad happens, the server will start and run normally.

|

||||

pidfile /var/run/redis_6379.pid

|

||||

pidfile /var/run/redis_6380.pid

|

||||

|

||||

# Specify the server verbosity level.

|

||||

# This can be one of:

|

||||

|

|

@ -843,7 +843,7 @@ lua-time-limit 5000

|

|||

# Make sure that instances running in the same system do not have

|

||||

# overlapping cluster configuration file names.

|

||||

#

|

||||

# cluster-config-file nodes-6379.conf

|

||||

# cluster-config-file nodes-6380.conf

|

||||

|

||||

# Cluster node timeout is the amount of milliseconds a node must be unreachable

|

||||

# for it to be considered in failure state.

|

||||

|

|

@ -971,7 +971,7 @@ lua-time-limit 5000

|

|||

# Example:

|

||||

#

|

||||

# cluster-announce-ip 10.1.1.5

|

||||

# cluster-announce-port 6379

|

||||

# cluster-announce-port 6380

|

||||

# cluster-announce-bus-port 6380

|

||||

|

||||

################################## SLOW LOG ###################################

|

||||

|

|

|

|||

|

|

@ -3,3 +3,39 @@

|

|||

use_default_save_directory = yes

|

||||

save_directory = None

|

||||

|

||||

[D4_Server]

|

||||

server_port=4443

|

||||

# registration or shared-secret

|

||||

server_mode = registration

|

||||

default_hmac_key = private key to change

|

||||

analyzer_queues_max_size = 100000000

|

||||

|

||||

[Flask_Server]

|

||||

# UI port number

|

||||

host = 127.0.0.1

|

||||

port = 7000

|

||||

|

||||

[Redis_STREAM]

|

||||

host = localhost

|

||||

port = 6379

|

||||

db = 0

|

||||

|

||||

[Redis_METADATA]

|

||||

host = localhost

|

||||

port = 6380

|

||||

db = 0

|

||||

|

||||

[Redis_SERV]

|

||||

host = localhost

|

||||

port = 6380

|

||||

db = 1

|

||||

|

||||

[Redis_ANALYZER]

|

||||

host = localhost

|

||||

port = 6380

|

||||

db = 2

|

||||

|

||||

[Redis_CACHE]

|

||||

host = localhost

|

||||

port = 6380

|

||||

db = 3

|

||||

|

|

|

|||

|

|

@ -0,0 +1,156 @@

|

|||

# Should be using configs but not supported until docker 17.06+

|

||||

# https://www.d4-project.org/2019/05/28/passive-dns-tutorial.html

|

||||

|

||||

version: "3"

|

||||

services:

|

||||

redis-stream:

|

||||

image: redis

|

||||

command: redis-server --port 6379

|

||||

|

||||

redis-metadata:

|

||||

image: redis

|

||||

command: redis-server --port 6380

|

||||

|

||||

redis-analyzer:

|

||||

image: redis

|

||||

command: redis-server --port 6400

|

||||

|

||||

d4-server:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.d4-server

|

||||

image: d4-server:latest

|

||||

depends_on:

|

||||

- redis-stream

|

||||

- redis-metadata

|

||||

environment:

|

||||

- D4_REDIS_STREAM_HOST=redis-stream

|

||||

- D4_REDIS_STREAM_PORT=6379

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

ports:

|

||||

- "4443:4443"

|

||||

|

||||

d4-worker_1:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.d4-server

|

||||

image: d4-server:latest

|

||||

depends_on:

|

||||

- redis-stream

|

||||

- redis-metadata

|

||||

environment:

|

||||

- D4_REDIS_STREAM_HOST=redis-stream

|

||||

- D4_REDIS_STREAM_PORT=6379

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

entrypoint: bash -c "cd workers/workers_1; ./workers_manager.py; read x"

|

||||

volumes:

|

||||

- d4-data:/usr/src/d4-server/data

|

||||

|

||||

d4-worker_2:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.d4-server

|

||||

image: d4-server:latest

|

||||

depends_on:

|

||||

- redis-stream

|

||||

- redis-metadata

|

||||

environment:

|

||||

- D4_REDIS_STREAM_HOST=redis-stream

|

||||

- D4_REDIS_STREAM_PORT=6379

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

entrypoint: bash -c "cd workers/workers_2; ./workers_manager.py; read x"

|

||||

volumes:

|

||||

- d4-data:/usr/src/d4-server/data

|

||||

|

||||

d4-worker_4:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.d4-server

|

||||

image: d4-server:latest

|

||||

depends_on:

|

||||

- redis-stream

|

||||

- redis-metadata

|

||||

environment:

|

||||

- D4_REDIS_STREAM_HOST=redis-stream

|

||||

- D4_REDIS_STREAM_PORT=6379

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

entrypoint: bash -c "cd workers/workers_4; ./workers_manager.py; read x"

|

||||

volumes:

|

||||

- d4-data:/usr/src/d4-server/data

|

||||

|

||||

d4-worker_8:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.d4-server

|

||||

image: d4-server:latest

|

||||

depends_on:

|

||||

- redis-stream

|

||||

- redis-metadata

|

||||

environment:

|

||||

- D4_REDIS_STREAM_HOST=redis-stream

|

||||

- D4_REDIS_STREAM_PORT=6379

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

entrypoint: bash -c "cd workers/workers_8; ./workers_manager.py; read x"

|

||||

volumes:

|

||||

- d4-data:/usr/src/d4-server/data

|

||||

|

||||

d4-web:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.d4-server

|

||||

image: d4-server:latest

|

||||

depends_on:

|

||||

- redis-stream

|

||||

- redis-metadata

|

||||

environment:

|

||||

- D4_REDIS_STREAM_HOST=redis-stream

|

||||

- D4_REDIS_STREAM_PORT=6379

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

entrypoint: bash -c "cd web; ./Flask_server.py; read x"

|

||||

ports:

|

||||

- "7000:7000"

|

||||

volumes:

|

||||

- d4-data:/usr/src/d4-server/data

|

||||

|

||||

d4-analyzer-passivedns-cof:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.analyzer-d4-passivedns

|

||||

image: analyzer-d4-passivedns:latest

|

||||

depends_on:

|

||||

- redis-metadata

|

||||

- redis-analyzer

|

||||

environment:

|

||||

- D4_ANALYZER_REDIS_HOST=redis-analyzer

|

||||

- D4_ANALYZER_REDIS_PORT=6400

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

- DEBUG=true

|

||||

entrypoint: bash -c "python ./pdns-cof-server.py; read x"

|

||||

ports:

|

||||

- "8400:8400"

|

||||

|

||||

d4-analyzer-passivedns-ingestion:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile.analyzer-d4-passivedns

|

||||

image: analyzer-d4-passivedns:latest

|

||||

depends_on:

|

||||

- redis-metadata

|

||||

- redis-analyzer

|

||||

environment:

|

||||

- D4_ANALYZER_REDIS_HOST=redis-analyzer

|

||||

- D4_ANALYZER_REDIS_PORT=6400

|

||||

- D4_REDIS_METADATA_HOST=redis-metadata

|

||||

- D4_REDIS_METADATA_PORT=6380

|

||||

- DEBUG=true

|

||||

entrypoint: bash -c "python ./pdns-ingestion.py; read x"

|

||||

|

||||

volumes:

|

||||

d4-data:

|

||||

|

|

@ -0,0 +1,130 @@

|

|||

# D4 core

|

||||

|

||||

|

||||

|

||||

## D4 core server

|

||||

|

||||

D4 core server is a complete server to handle clients (sensors) including the decapsulation of the [D4 protocol](https://github.com/D4-project/architecture/tree/master/format), control of

|

||||

sensor registrations, management of decoding protocols and dispatching to adequate decoders/analysers.

|

||||

|

||||

## Database map - Metadata

|

||||

|

||||

```

|

||||

DB 0 - Stats + sensor configs

|

||||

DB 1 - Users

|

||||

DB 2 - Analyzer queue

|

||||

DB 3 - Flask Cache

|

||||

```

|

||||

|

||||

### DB 1

|

||||

|

||||

##### User Management:

|

||||

| Hset Key | Field | Value |

|

||||

| ------ | ------ | ------ |

|

||||

| user:all | **user id** | **password hash** |

|

||||

| | | |

|

||||

| user:tokens | **token** | **user id** |

|

||||

| | | |

|

||||

| user_metadata:**user id** | token | **token** |

|

||||

| | change_passwd | **boolean** |

|

||||

| | role | **role** |

|

||||

|

||||

| Set Key | Value |

|

||||

| ------ | ------ |

|

||||

| user_role:**role** | **user id** |

|

||||

|

||||

|

||||

| Zrank Key | Field | Value |

|

||||

| ------ | ------ | ------ |

|

||||

| ail:all_role | **role** | **int, role priority (1=admin)** |

|

||||

|

||||

### Server

|

||||

| Key | Value |

|

||||

| --- | --- |

|

||||

| server:hmac_default_key | **hmac_default_key** |

|

||||

|

||||

| Set Key | Value |

|

||||

| --- | --- |

|

||||

| server:accepted_type | **accepted type** |

|

||||

| server:accepted_extended_type | **accepted extended type** |

|

||||

|

||||

###### Server Mode

|

||||

| Set Key | Value |

|

||||

| --- | --- |

|

||||

| blacklist_ip | **blacklisted ip** |

|

||||

| blacklist_ip_by_uuid | **uuidv4** |

|

||||

| blacklist_uuid | **uuidv4** |

|

||||

|

||||

###### Connection Manager

|

||||

| Set Key | Value |

|

||||

| --- | --- |

|

||||

| active_connection | **uuid** |

|

||||

| | |

|

||||

| active_connection:**type** | **uuid** |

|

||||

| active_connection_extended_type:**uuid** | **extended type** |

|

||||

| | |

|

||||

| active_uuid_type2:**uuid** | **session uuid** |

|

||||

| | |

|

||||

| map:active_connection-uuid-session_uuid:**uuid** | **session uuid** |

|

||||

|

||||

| Set Key | Field | Value |

|

||||

| --- | --- | --- |

|

||||

| map:session-uuid_active_extended_type | **session_uuid** | **extended_type** |

|

||||

|

||||

### Stats

|

||||

| Zset Key | Field | Value |

|

||||

| --- | --- | --- |

|

||||

| stat_uuid_ip:**date**:**uuid** | **IP** | **number D4 Packets** |

|

||||

| | | |

|

||||

| stat_uuid_type:**date**:**uuid** | **type** | **number D4 Packets** |

|

||||

| | | |

|

||||

| stat_type_uuid:**date**:**type** | **uuid** | **number D4 Packets** |

|

||||

| | | |

|

||||

| stat_ip_uuid:20190519:158.64.14.86 | **uuid** | **number D4 Packets** |

|

||||

| | | |

|

||||

| | | |

|

||||

| daily_uuid:**date** | **uuid** | **number D4 Packets** |

|

||||

| | | |

|

||||

| daily_type:**date** | **type** | **number D4 Packets** |

|

||||

| | | |

|

||||

| daily_ip:**date** | **IP** | **number D4 Packets** |

|

||||

|

||||

### metadata sensors

|

||||

| Hset Key | Field | Value |

|

||||

| --- | --- | --- |

|

||||

| metadata_uuid:**uuid** | first_seen | **epoch** |

|

||||

| | last_seen | **epoch** |

|

||||

| | description | **description** | (optionnal)

|

||||

| | Error | **error message** | (optionnal)

|

||||

| | hmac_key | **hmac_key** | (optionnal)

|

||||

| | user_id | **user_id** | (optionnal)

|

||||

|

||||

###### Last IP

|

||||

| List Key | Value |

|

||||

| --- | --- |

|

||||

| list_uuid_ip:**uuid** | **IP** |

|

||||

|

||||

### metadata types by sensors

|

||||

| Hset Key | Field | Value |

|

||||

| --- | --- | --- |

|

||||

| metadata_uuid:**uuid** | first_seen | **epoch** |

|

||||

| | last_seen | **epoch** |

|

||||

|

||||

| Set Key | Value |

|

||||

| --- | --- |

|

||||

| all_types_by_uuid:**uuid** | **type** |

|

||||

| all_extended_types_by_uuid:**uuid** | **type** |

|

||||

|

||||

### analyzers

|

||||

###### metadata

|

||||

| Hset Key | Field | Value |

|

||||

| --- | --- | --- |

|

||||

| analyzer:**uuid** | last_updated | **epoch** |

|

||||

| | description | **description** |

|

||||

| | max_size | **queue max size** |

|

||||

|

||||

###### all analyzers by type

|

||||

| Set Key | Value |

|

||||

| --- | --- |

|

||||

| analyzer:**type** | **uuid** |

|

||||

| analyzer:254:**extended type** | **uuid** |

|

||||

|

|

@ -0,0 +1,94 @@

|

|||

# API DOCUMENTATION

|

||||

|

||||

## General

|

||||

|

||||

### Automation key

|

||||

|

||||

The authentication of the automation is performed via a secure key available in the D4 UI interface. Make sure you keep that key secret. It gives access to the entire database! The API key is available in the ``Settings`` menu under ``My Profile``.

|

||||

|

||||

The authorization is performed by using the following header:

|

||||

|

||||

~~~~

|

||||

Authorization: YOUR_API_KEY

|

||||

~~~~

|

||||

### Accept and Content-Type headers

|

||||

|

||||

When submitting data in a POST, PUT or DELETE operation you need to specify in what content-type you encoded the payload. This is done by setting the below Content-Type headers:

|

||||

|

||||

~~~~

|

||||

Content-Type: application/json

|

||||

~~~~

|

||||

|

||||

Example:

|

||||

|

||||

~~~~

|

||||

curl --header "Authorization: YOUR_API_KEY" --header "Content-Type: application/json" https://D4_URL/

|

||||

~~~~

|

||||

|

||||

## Sensor Registration

|

||||

|

||||

### Register a sensor: `api/v1/add/sensor/register`<a name="add_sensor_register"></a>

|

||||

|

||||

#### Description

|

||||

Register a sensor.

|

||||

|

||||

**Method** : `POST`

|

||||

|

||||

#### Parameters

|

||||

- `uuid`

|

||||

- sensor uuid

|

||||

- *uuid4*

|

||||

- mandatory

|

||||

|

||||

- `hmac_key`

|

||||

- sensor secret key

|

||||

- *binary*

|

||||

- mandatory

|

||||

|

||||

- `description`

|

||||

- sensor description

|

||||

- *str*

|

||||

|

||||

- `mail`

|

||||

- user mail

|

||||

- *str*

|

||||

|

||||

#### JSON response

|

||||

- `uuid`

|

||||

- sensor uuid

|

||||

- *uuid4*

|

||||

|

||||

#### Example

|

||||

```

|

||||

curl https://127.0.0.1:7000/api/v1/add/sensor/register --header "Authorization: iHc1_ChZxj1aXmiFiF1mkxxQkzawwriEaZpPqyTQj " -H "Content-Type: application/json" --data @input.json -X POST

|

||||

```

|

||||

|

||||

#### input.json Example

|

||||

```json

|

||||

{

|

||||

"uuid": "ff7ba400-e76c-4053-982d-feec42bdef38",

|

||||

"hmac_key": "...HMAC_KEY..."

|

||||

}

|

||||

```

|

||||

|

||||

#### Expected Success Response

|

||||

**HTTP Status Code** : `200`

|

||||

|

||||

```json

|

||||

{

|

||||

"uuid": "ff7ba400-e76c-4053-982d-feec42bdef38",

|

||||

}

|

||||

```

|

||||

|

||||

#### Expected Fail Response

|

||||

|

||||

**HTTP Status Code** : `400`

|

||||

```json

|

||||

{"status": "error", "reason": "Mandatory parameter(s) not provided"}

|

||||

{"status": "error", "reason": "Invalid uuid"}

|

||||

```

|

||||

|

||||

**HTTP Status Code** : `409`

|

||||

```json

|

||||

{"status": "error", "reason": "Sensor already registred"}

|

||||

```

|

||||

|

|

@ -7,3 +7,6 @@ openssl req -sha256 -new -key server.key -out server.csr -config san.cnf

|

|||

openssl x509 -req -in server.csr -CA rootCA.crt -CAkey rootCA.key -CAcreateserial -out server.crt -days 500 -sha256 -extfile ext3.cnf

|

||||

# Concat in pem

|

||||

cat server.crt server.key > ../server.pem

|

||||

# Copy certs for Flask https

|

||||

cp server.key ../web/server.key

|

||||

cp server.crt ../web/server.crt

|

||||

|

|

|

|||

|

|

@ -12,6 +12,10 @@ if [ -z "$VIRTUAL_ENV" ]; then

|

|||

fi

|

||||

python3 -m pip install -r requirement.txt

|

||||

|

||||

pushd configs/

|

||||

cp server.conf.sample server.conf

|

||||

popd

|

||||

|

||||

pushd web/

|

||||

./update_web.sh

|

||||

popd

|

||||

|

|

@ -25,3 +29,17 @@ pushd redis/

|

|||

git checkout 5.0

|

||||

make

|

||||

popd

|

||||

|

||||

# LAUNCH

|

||||

bash LAUNCH.sh -l &

|

||||

wait

|

||||

echo ""

|

||||

|

||||

# create default users

|

||||

pushd web/

|

||||

./create_default_user.py

|

||||

popd

|

||||

|

||||

bash LAUNCH.sh -k &

|

||||

wait

|

||||

echo ""

|

||||

|

|

|

|||

|

|

@ -0,0 +1,370 @@

|

|||

#!/usr/bin/env python3

|

||||

# -*-coding:UTF-8 -*

|

||||

|

||||

import os

|

||||

import sys

|

||||

import datetime

|

||||

import time

|

||||

import uuid

|

||||

import redis

|

||||

|

||||

sys.path.append(os.path.join(os.environ['D4_HOME'], 'lib/'))

|

||||

import ConfigLoader

|

||||

import d4_type

|

||||

|

||||

### Config ###

|

||||

config_loader = ConfigLoader.ConfigLoader()

|

||||

r_serv_metadata = config_loader.get_redis_conn("Redis_METADATA")

|

||||

r_serv_analyzer = config_loader.get_redis_conn("Redis_ANALYZER")

|

||||

LIST_DEFAULT_SIZE = config_loader.get_config_int('D4_Server', 'analyzer_queues_max_size')

|

||||

config_loader = None

|

||||

### ###

|

||||

|

||||

def is_valid_uuid_v4(uuid_v4):

|

||||

if uuid_v4:

|

||||

uuid_v4 = uuid_v4.replace('-', '')

|

||||

else:

|

||||

return False

|

||||

|

||||

try:

|

||||

uuid_test = uuid.UUID(hex=uuid_v4, version=4)

|

||||

return uuid_test.hex == uuid_v4

|

||||

except:

|

||||

return False

|

||||

|

||||

def sanitize_uuid(uuid_v4, not_exist=False):

|

||||

if not is_valid_uuid_v4(uuid_v4):

|

||||

uuid_v4 = str(uuid.uuid4())

|

||||

if not_exist:

|

||||

if exist_queue(uuid_v4):

|

||||

uuid_v4 = str(uuid.uuid4())

|

||||

return uuid_v4

|

||||

|

||||

def sanitize_queue_type(format_type):

|

||||

try:

|

||||

format_type = int(format_type)

|

||||

except:

|

||||

format_type = 1

|

||||

if format_type == 2:

|

||||

format_type = 254

|

||||

return format_type

|

||||

|

||||

def exist_queue(queue_uuid):

|

||||

return r_serv_metadata.exists('analyzer:{}'.format(queue_uuid))

|

||||

|

||||

def get_all_queues(r_list=None):

|

||||

res = r_serv_metadata.smembers('all_analyzer_queues')

|

||||

if r_list:

|

||||

return list(res)

|

||||

return res

|

||||

|

||||

def get_all_queues_format_type(r_list=None):

|

||||

res = r_serv_metadata.smembers('all:analyzer:format_type')

|

||||

if r_list:

|

||||

return list(res)

|

||||

return res

|

||||

|

||||

def get_all_queues_extended_type(r_list=None):

|

||||

res = r_serv_metadata.smembers('all:analyzer:extended_type')

|

||||

if r_list:

|

||||

return list(res)

|

||||

return res

|

||||

|

||||

# GLOBAL

|

||||

def get_all_queues_uuid_by_type(format_type, r_list=None):

|

||||

res = r_serv_metadata.smembers('all:analyzer:by:format_type:{}'.format(format_type))

|